If you’ve ever tried to scrape data from a website, you’ve likely encountered a CAPTCHA. Those commonly seen "I’m not a robot" checkboxes or image puzzles can stop web scraping efforts in their tracks. For businesses and developers relying on automated data collection via scraping, CAPTCHAs pose a significant barrier.

CAPTCHAs are specifically designed to block automated systems from accessing data on websites. While this is a valid way to prevent spam and protect against malicious activity, it also creates challenges for legitimate scraping, such as gathering insights from search engine results or competitor analysis. Let's dive into what CAPTCHAs mean, and how CAPTCHA solvers work to help overcome these challenges.

What is a CAPTCHA?

CAPTCHA is an acronym that stands for "Completely Automated Public Turing test to tell Computers and Humans Apart." A CAPTCHA test is designed to determine if an online user is really a human (and not a bot). Users often encounter CAPTCHA and reCAPTCHA tests on the Internet when browsing websites, or search engines, especially if the browser suspects bot-like activity.

Although CAPTCHAs are designed to block automated bots, CAPTCHAs are themselves automated. They're programmed to pop up in certain places on a website, and they automatically pass or fail users. This typically includes a quick step that requires users to enter a code or words, click images, or complete a small test to gain access to the website.

What is a Turing test? How are CAPTCHAs related to the Turing test?

A Turing test measures a computer's ability to mimic human behavior. Proposed by Alan Turing in 1950, a computer passes if its responses are indistinguishable from a human’s, regardless of whether they are correct or not.

In contrast, a CAPTCHA (often called a "Public Turing test") is designed to do the opposite: it determines whether a user is human or a bot by giving tasks that humans can easily perform but machines struggle with, such as identifying text or images.

How does a CAPTCHA work?

A CAPTCHA works by presenting a test or puzzle that is easy for humans to solve but difficult for bots to solve. A website presents a CAPTCHA test to the user in the form of an image, audio file, or a simple question that requires a response. The user completes the test by providing the correct response. This response is then sent back to the website for verification using advanced algorithms to determine whether the response is likely to have been provided by a human or a bot. If the response is deemed to be from a bot, the user is denied access to the website or service.

There are many different types of CAPTCHAs:

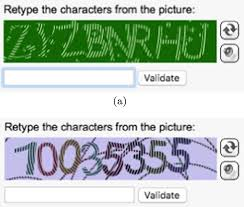

- Text Based CAPTCHA

This type of CAPTCHA presents a sequence of distorted letters or numbers that users must enter into a text box. The characters are intentionally altered to make them challenging for computers to interpret, yet recognizable for humans. Common examples include Google's reCAPTCHA, which features distorted text, and Cloudflare's CAPTCHA, which presents basic arithmetic problems.

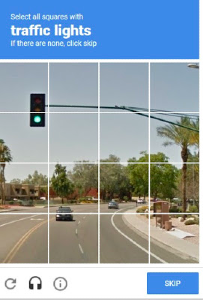

- Image Based CAPTCHA

An image-based CAPTCHA presents an image containing a specific object or shape, and the user must identify or select that object within the image. These CAPTCHAs are challenging for bots to solve because they require sophisticated image recognition capabilities. Variations of image-based CAPTCHAs may include tasks such as selecting images that feature certain objects from a grid, rearranging jigsaw-like pieces to form the original picture, clicking on images that must be rotated to a correct orientation, or other similar interactive challenges.

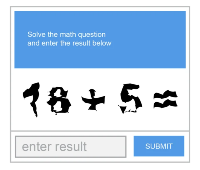

- Math or Word Problems Based CAPTCHA

These CAPTCHAs require users to solve a simple math problem or answer a trivia question to prove that they are human.

What triggers CAPTCHAs?

Some websites have CAPTCHAs in place as a preventive measure against bots. In other cases, a CAPTCHA may be triggered if the user's behavior appears to mimic that of a bot—such as making an unusually high number of page requests or clicking links at a rate that exceeds typical user patterns.

Here are suspicious examples of behaviours that might trigger a CAPTCHA test:

- IP Tracking: A user’s IP has been identified as a bot.

- Resource Loading: A user doesn’t load styles, banners, or images.

- Sign in: The user isn’t signed in to any account when accessing the site.

- Bot-Like Behavior: Weird clicking patterns, little mouse movement, and perfectly-centered checkbox clicking can all trigger a CAPTCHA test.

- No Browsing History: Real humans do more than try to log in to the same page over and over again.

What is reCAPTCHA?

reCAPTCHA is a free service Google offers in place of traditional CAPTCHAs. reCAPTCHA technology was developed by researchers at Carnegie Mellon University, then acquired by Google in 2009.

reCAPTCHA is advanced compared to the typical CAPTCHA tests. Like CAPTCHA, some reCAPTCHAs require users to enter images of text that computers have trouble deciphering. Unlike regular CAPTCHAs, reCAPTCHA gets data from real-world artifacts: pictures of streets, text from books or newspapers, etc.

It uses multiple other signals to distinguish bot behavior from human behaviour, such as:

- Image recognition

- Checkbox

- General user behavior assessment (no user interaction at all)

Many reCAPTCHA tests simply prompt the user to check a box next to the statement, "I'm not a robot." However, the test is not the actual action of clicking the checkbox – it's everything leading up to the checkbox click.

How do CAPTCHA solvers work?

For text-based CAPTCHAs, solvers use Optional Character Recognition (OCR) technology to extract characters from the distorted image. OCR works by identifying the shapes of letters and numbers, comparing them against pre-defined character sets, and filtering out noise and distortion to recognize the correct text.

For image recognition CAPTCHAs (like "select all images with traffic lights"), solvers often rely on Convolutional Neural Networks (CNNs), a type of deep learning model that excels at image classification. These models can be trained on large datasets of images to recognize and classify objects in pictures. There are multiple pre trained models available via APIs such as TensorFlow, PyTorch, or Google Vision which can be help with image recognition.

Solving Google's reCAPTCHA can look a bit different. Since it looks for human-like behaviour in the background, solvers need to simulate human mouse movements and clicks to trigger the checkbox. In this case, solvers leverage techniques like simulating user behavior through scripts. The checkbox itself may not require solving a puzzle, but interactions are designed to be human-like to pass Google's bot detection.

Solving an image-based reCAPTCHA is more challenging and requires more sophisticated techniques, often using a combination of automated image recognition models and human labor in the background. In some cases, solvers may use a mix of automated systems and crowdsourced labor where the system presents the challenge to humans who solve it in exchange for payment, in addition some automated steps. This process is known as Human-in-the-loop CAPTCHA solving.

Impact of CAPTCHAs on Web Scraping

Web scraping involves collecting large volumes of information from websites and search engines, which often triggers CAPTCHAs because of the usage of proxies and automated processes. While web scraping can be a valuable technique for gathering insights, it often faces obstacles such as CAPTCHAs, which are designed to ensure only humans can interact with a site. This creates a challenge for legitimate scraping efforts that aim to gather data without violating terms of service or overloading servers. So, often, web scrapers need to find ways to circumvent these protections through techniques such as using CAPTCHA-solving services, employing machine learning algorithms to recognize CAPTCHA patterns, or utilizing browser automation tools that simulate human behavior. This ongoing struggle between CAPTCHA systems and web scraping highlights the constant battle between security measures and the need for data extraction.

How SerpApi handles CAPTCHA challenges

SerpApi uses reliable, CAPTCHA-solving mechanisms, allowing businesses to efficiently scrape search engine results while bypassing CAPTCHAs in a compliant and ethical manner. We prioritize adhering to legal and ethical guidelines in our operations, ensuring that we use industry standard CAPTCHA-solving methods and best practices. SerpApi offers a legitimate, hassle-free solution for companies that need accurate, real-time data without the risks associated with manual scraping methods or unreliable third-party services.

Many third party CAPTCHA solving services exist which support multiple languages. Using them allows you to bypass all possible CAPTCHAs depending on the target website. These can be used along with proxies to avoid most or all blocks.

By taking the hassle of CAPTCHA solving off your hands, SerpApi lets you focus on what matters: gathering valuable, structured data from search engines.

Why Use SerpApi?

Here at SerpApi, we only perform web scraping on search engines providing publicly available data. The API calls replicate real-time searches with no login or authorization required. We are committed to maintaining legal and ethical web scraping practices and providing our customers with peace of mind.

SerpApi streamlines the process of web-scraping for you. We take care of proxies and any CAPTCHAs that may be encountered, so that you don't have to worry about your searches being blocked. If you were to implement your own scraper using tools like BeautifulSoup and Requests, you'd need to determine your own solution for this. SerpApi manages the intricacies of scraping and returns structured JSON results. This allows you to save time and effort by avoiding the need to build your own Google scraper or rely on other web scraping tools.

We also do all the work to maintain all of our parsers and adapt them to respond to changes on Google's side. This is important, as Google is constantly experimenting with new layouts, new elements, and other changes. By taking care of this for you on our side, we eliminate a lot of time and complexity from your workflow. We are dedicated to providing the most performant APIs, a legal shield protecting your right to scrape public data, and premium customer service.

To begin using SerpApi, create a free account on serpapi.com. You'll receive one hundred free search credits each month to explore the API. For usage requirements beyond that, you can view all our plans here after logging in: https://serpapi.com/change-plan.

Related Posts

You may interested in reading more about the complexities of web scraping:

Thank you for reading this blog post. If you have any questions, please feel free to reach out to us.