SerpApi provides a fast and reliable way to access search results data programmatically. Besides having the fastest response times and highest success rates of any search results scraping service available in the current market, SerpApi provides a number of helpful features that add versatility and enable you to maximize efficiency for your use case.

Some of these features may be easily overlooked, including new additions existing customers might not have heard about. Here are some tips for getting the maximum benefit from your SerpApi subscription.

Use Location Parameters

It can't be said enough that for maximum benefit, you need to take advantage of the location parameters SerpApi offers. Using location parameters allows you to accurately replicate a real user's search, and get SERP results reflecting a specific point of origin.

Location parameters also ensure a deeper level of consistency and reliability. You can read more about the location Parameters SerpApi supports here:

Extra APIs

In addition to the many APIs SerpApi sports for scraping search engine results pages, there are a number of free APIs that serve as useful tools to support your process.

Account API

The Account API allows you track your usage programmatically, so you can ensure you don't run out of searches or exceed your hourly throughput limit.

Locations API

The Locations API allows you to search our supported locations. These are based on Google Ads locations. You can use this API to get the location canonical name (e.g., "Austin,Texas,United States") or the location id (e.g., "585069efee19ad271e9c9b36") and pass this as the value of location when using our Google Search API or our Bing Search API. Using one of the values may return more precise results.

You can also get the GPS coordinates of a named location, which may be useful for the Google Maps API.

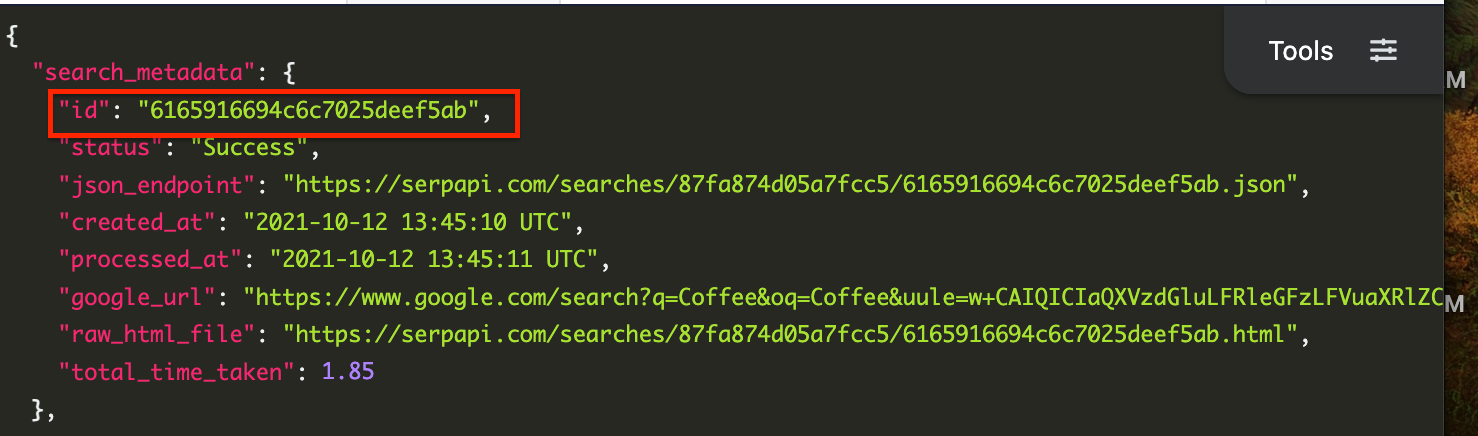

Search Archive API

All of your searches are stored for 30 days. During this time, you can access them in My Searches, or by using the Search Archive API. The Search Archive API is particularly useful, because it allows you to programmatically retrieve a specific search.

This is helpful for a few situations:

- You performed searches that weren't stored on your side, and you want to retrieve the information without spending more search credits.

- Something went wrong and you need to debug.

- You are using our

asyncparameter to perform a large number of searches in bulk.

The only prerequisite to using this API is that you need to have the Search ID of the search you want to retrieve. For this reason, it's recommended to store the Search ID for every search you perform.

The Search ID is included in the search_metadata object in the JSON response for each search:

Async Flow

Another often overlooked feature of SerpApi is the option to perform searches asynchronously. This is useful when you have a large number of searches to process, and you don't need an immediate response.

You can process searches asynchronously by setting the async parameter to true.

The basic flow is like this:

- You send a request to SerpApi with

async=true. - SerpApi returns immediately with a JSON response that contains only the

search_metadata. - You extract the

search_metadata.id. - Do this for a large number of searches.

- Use the Search IDs you've collected to query the Search Archive API for the full response.

This process prevents you from having to wait for each search to complete before starting the next one. For very large amounts of searches, it can drastically improve the amount of time it takes to complete them all.

You can learn more about performing async searches with Python here:

Or Node.JS here:

At the time of writing, SerpApi also has a feature in development that will further improve this flow:

Playground

If you're a SerpApi user and you haven't used the Playground, you should give this a try. This is a convenient user interface to try different searches and parameters with any of the APIs (except the "extras" mentioned above) and see the JSON response.

Even if you're an experienced developer and you're already comfortable writing code to access the APIs, it can be helpful to quickly and clearly see which parameters are available, how they work, and what types of data are returned in the response, or how subtle changes to your query might affect the results.

You can then use the "Export to Code" function to immediately get starter code for your query in your chosen programming language.

Renew Early / Auto Renew Early

SerpApi has a subscription based pricing model, meaning you can't purchase X amount of searches to use any time. However, SerpApi allows you to upgrade, downgrade, cancel, resubscribe, or renew at any time, which allows for enough flexibility for most use cases.

If your usage is inconsistent and you have concerns about using up the last of your available searches and experiencing an interruption of service, you can enable the Auto Renew Early feature. This means if you deplete your searches, your plan will automatically renew early so that you can continue using SerpApi without any interruption.

You can renew early or turn on auto early renewal by signing into an account, and navigating to https://serpapi.com/plan.

"Team" Feature

SerpApi currently supports a "Team" feature, allowing users to invite other users to join them on their existing plan. Each user who joins a team will have their own API key, and their searches will be tracked separately. This can be extremely helpful if you are using SerpApi for multiple features or environments, and want to keep track of the search volumes required for each. It can also be helpful for allowing another member of your team to login and manage billing concerns without having to share login information. You can try the team feature here:

Google Light API

SerpApi recently introduced a new feature supporting faster speeds for getting organic results, knowledge graphs, answer boxes, and other information from Google Search results. This API skips some of the special result types typically found in a Google Search results page, (which you can get with SerpApi's Google Search API) in order to prioritize speed.

This is helpful if you don't need all of the feature rich results returned by the Google Search API, and just need to get organic results quickly and consistently.

Conclusion

We've covered some of the newest and most commonly overlooked of SerpApi features, all with the potential to drastically improve your experience scraping search results from search engine results pages.

I hope you have found this article informative and easy to follow. If you have any questions, feel free to contact me at ryan@serpapi.com.