Welcome back my fellow gravy-drinker! Last time we saw how to use the Google Maps API to scrape Google Maps for a single location in a city, and pull in a collection of businesses, based on a simple query.

In this instalment, we will continue our quest to find the best Sunday Roast dinner London has to offer, using SerpApi's powerful tools to scrape Google Maps Reviews and find out what folks are saying about the city's numerous pubs.

But before we can scrape those reviews, we first need to find the pubs themselves. And while we know how to gather all the results for a single set of coordinates, covering the breadth of an entire city is a different challenge altogether.

We're going to take a deep dive into the process of slicing a large area into a grid, and running a search over each section of that grid. This article is going to be quite image-heavy, so good news if you're a visual learner!

Breaking a City into a Grid

Wouldn't it be great if we could just pick a zoom/elevation level that covers the entire city and scoop up all of the pubs in one fell swoop? Sadly that's not the Google Maps way.

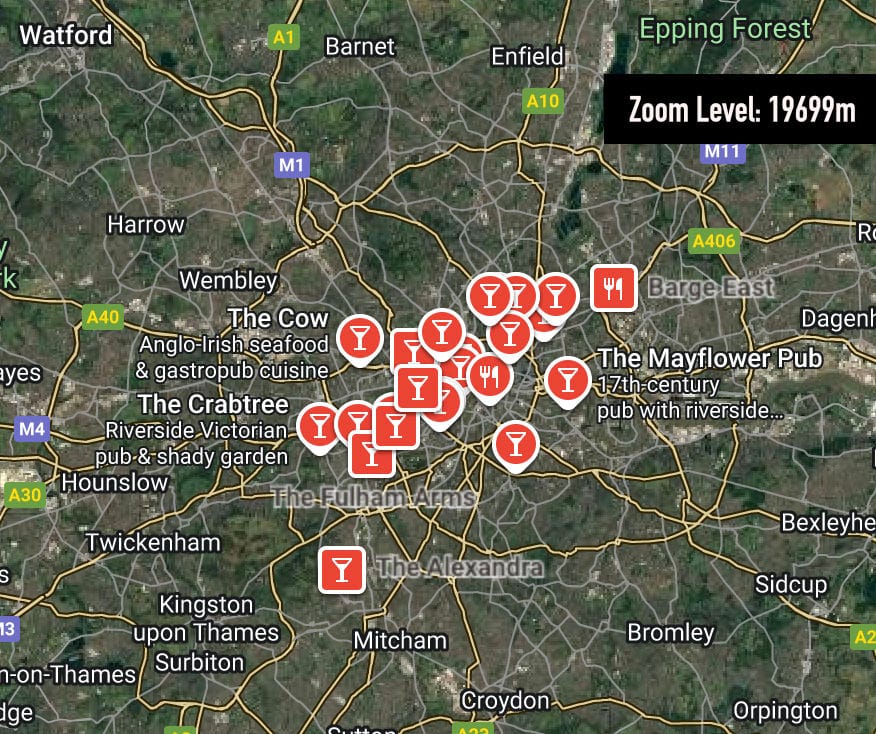

Here are a couple of screenshots at very different elevations/zoom levels - both the result of performing a single query directly on Google Maps:

We can see that in both views, we still only get around 20 results: this tells us that the zoom level dictates how granular our results will be. Therefore, we need to settle on a zoom level close enough to give us a decent amount of results per 'view' (or Google Maps search).

The size of the city you wish to traverse, will affect which zoom level you opt for. Once we've settled on this zoom level, we need to divide the map into a grid, then travel across the grid, pulling in pub listings for each section. We'll do this as follows:

Define a bounding box for London

Where does London begin and end? For the sake of this example we'll pick two sets of latitude/longitude values to represent the top left, and bottom right corners of our grid:

- NW corner: 51.70, -0.50

- SE corner: 51.28, 0.30

Let's see that in context, on the actual map:

Now I don't want to start any turf wars - this is just an example. If you want to try this exercise yourself, you are of course welcome to define your own city limits.

Slicing that box into a grid

If we want to scrape as many Google Maps reviews as possible across the city, we'll need to break our bounding box down into many smaller subsections of the city, and run an API query on each of them. This is because of the way Google Maps chooses to serve results based on zoom level and map position.

If that's not intuitive for you, just think about the previous screenshots of Google Maps we looked at: depending on how close you're zoomed in (and where the centre of the map is on your screen), will determine which results Google Maps will serve you.

So in order to get as many results as possible, we need to have several different 'views' to scrape from. To accomplish this, we can break down the bounding box we declared in the previous step, into a grid of smaller 'views' and scrape the results of each of them, and collect the results to cover the whole city.

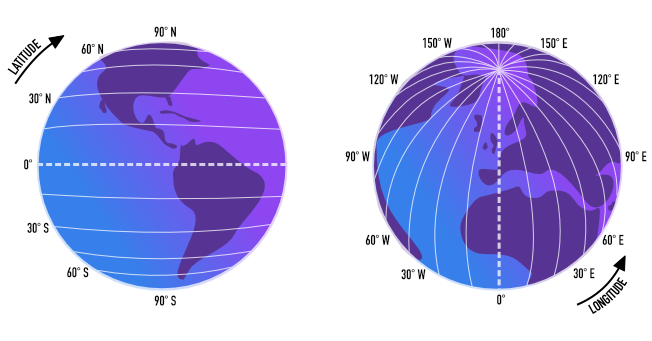

Latitude/Longitude

Let's take a quick trip back to Geography class and remind ourselves what latitude and longitude actually looks like:

When looking at coordinates, latitude is the first set of numbers which means longitude is the last set. When we increase latitude, we head North. When we decrease, we head South.

Similarly, when we increase longitude we head East - and therefore decreasing steers us toward the West.

If you have confusion between lat/long coordinates, don't worry - you're not the only one! Personally I think of it this way:

"Latitude looks kind of like a ladder, on which you climb up (N) or down (S)"

This little mnemonic helps me keep a strong visual in my mental model. OK, now we've revisited the basics of lat & long, we can continue.

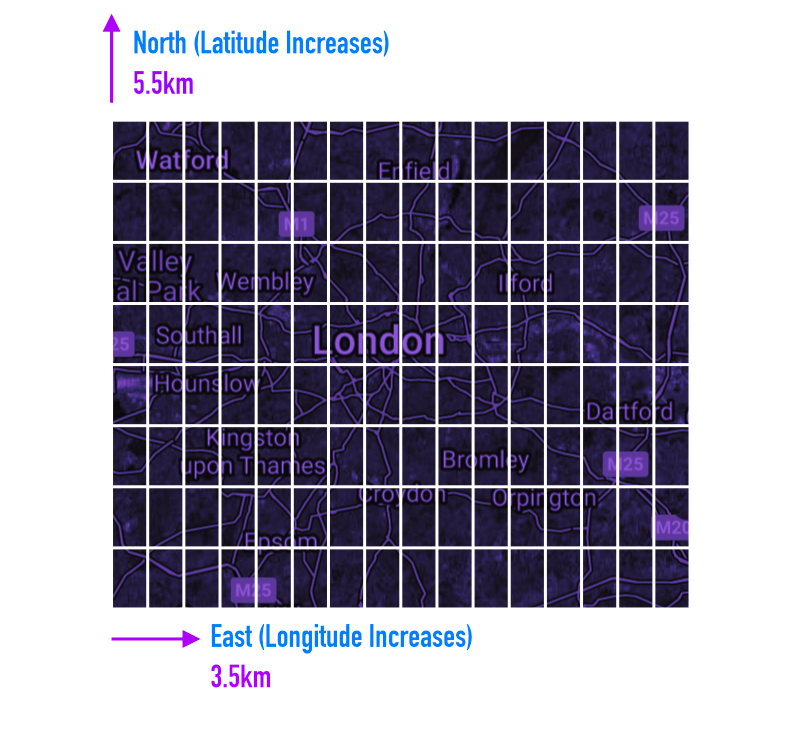

We have an area we will consider 'London' for the scope of this project, reflected in latitude/longitude points. Let's consider those for a moment:

- Latitude range = 51.28 → 51.70 → difference = 0.42°

- Longitude range = -0.50 → 0.30 → difference = 0.80°

We know that the distance of 1 degree of latitude remains relatively constant at roughly 111 kilometres (69 miles) anywhere on Earth because lines of latitude are parallel to each other.

However, longitude works slightly differently. The distance of 1 degree of longitude is not a constant value; it varies based on your location on Earth, specifically your latitude. This is because lines of longitude, known as meridians, are farthest apart at the equator and converge at the poles.

What this means for us, is that the simplest approach is to slice our bounding box into a grid of rectangles (instead of squares) using a single number - or 'step size':

GRID_STEP = 0.05

This will carve our bounding box into grid sections of 5.5 km in latitude (moving north to south), and 3.5km in longitude (moving east to west). With the measurements we're currently working with, we'll end up slicing London into a grid of 8 rows, by 16 columns.

Let's try and visualise that:

While it's possible to instead make the grid cells square, this is the simplest approach mathematically, so we'll go with it to keep the focus on scraping.

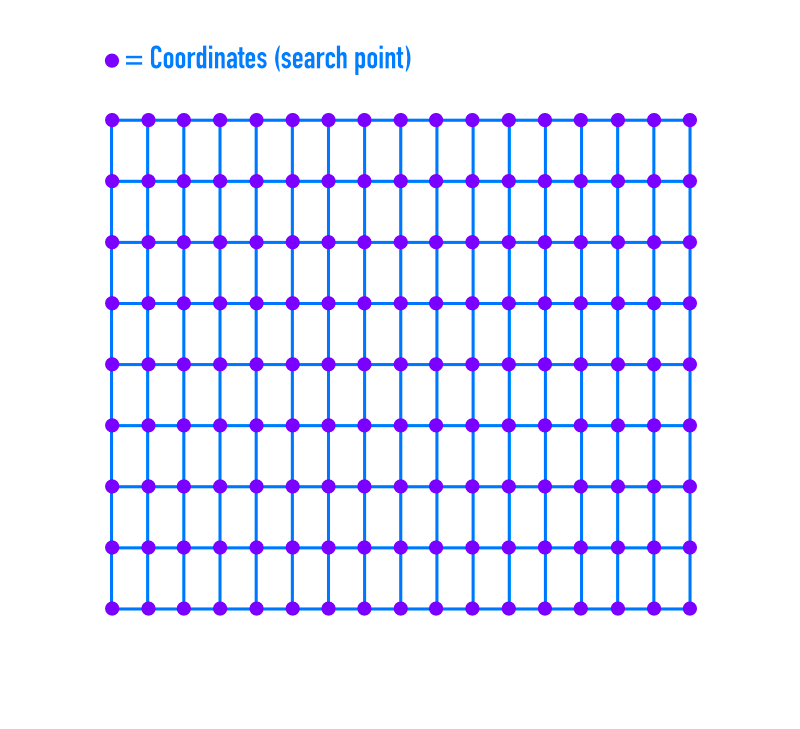

Search Points - Where Are They?

Each dot in the diagram below, marks a set of coordinates, and therefore shows us where on the map our script will trigger a search, to scrape reviews & information from Google Maps. As we can see, this is a lot of searches:

Our grid is 8 rows × 16 columns (128 cells), which means there are 9 × 17 = 153 grid intersections. So our script will make 153 total API calls — one per intersection point.

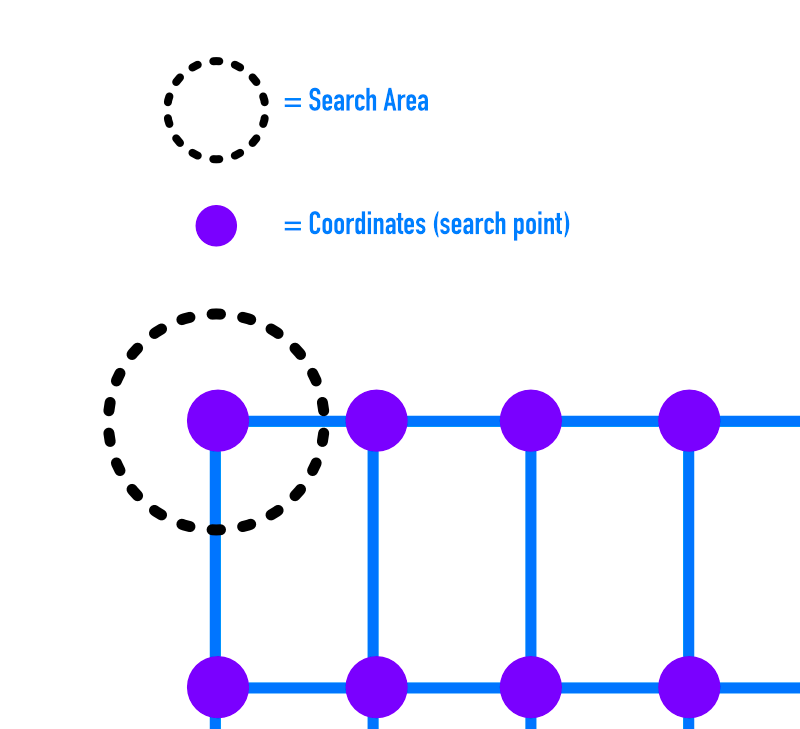

While it's not strictly correct to think of a Google Maps search as a circle, you can approximately think of it as a circular area centred on that coordinate, because Google’s ranking algorithm tends to prioritise results closest to the map centre, and the visible map region is roughly circular in effect when zoomed out.

Let's take a look at the grid, with a single search area visualised:

You might be wondering: "wouldn't we get less results outside of our boundary if we searched from the centre of each cell?" And it's true, there would be less overspill. You would also perform less searches. However, for the grid we have, and with the intention of being as thorough as possible, this approach will net us more results.

Overlapping Results

Let's take a quick look at the whole grid with search areas visualised so that we can see the overlap:

One of the first things we notice, is that because the coordinates on the edges of our grid are actually the centre of a search, we end up reaching outside of our grid for those particular searches. This could be remedied by making our grid smaller, if we were concerned with reducing our search area.

As we can see from those overlapping dotted circles, we're going to be performing searches that overlap, so we'll need to make sure that once we've pulled in results from Google Maps, that we're removing duplicates.

As mentioned in the first part of this series - there are many pubs in the UK that share the same name, even though they are completely unrelated. So we'll need to make sure that when we identify a result as a duplicate, that we are using a unique identifier to do so.

Fortunately part of SerpApi's Google Maps API JSON response, is a data_id which we will need when we want to scrape review information from the Google Maps Reviews API.

Adapting the Code

We've taken a really deep dive into the ideas behind performing a grid search, so if you're still with us: well done!

Now we're going to adapt the code from last time (you can find the files here).

In the last article, we searched for pubs at one set of coordinates. Now we're going to extend our application to iterate through the grid we defined above, to pull in a list of pubs from across the city.

Once we have a list of candidate pubs, we'll be ready for part 3 of the series where we'll use SerpApi to scrape Google Maps reviews to figure out where the fluffiest Yorkshire Puddings are hiding out!

LONDON_GRID Constant

This constant contains a Ruby Hash object, with the boundary coordinates we've chosen, along with the grid_step, which dictates the size of our grid cells. For a smaller city we'd want a smaller grid step.

# Bounding box and grid step for London

LONDON_GRID = {

lat_min: 51.28,

lat_max: 51.70,

lng_min: -0.50,

lng_max: 0.30,

grid_step: 0.05

}.freeze

We'll pass the LONDON_GRID hash into our CityGrid when we initialise it. Let's look at that next:

CityGrid Class

For the code relating to breaking the city into a grid, let's group this related logic into a CityGrid class:

# Handles dividing a city bounding box into a grid of points

class CityGrid

# Define getter methods for the following properties

attr_reader :lat_min, :lat_max, :lng_min, :lng_max, :grid_step

def initialize(grid_config)

@lat_min = grid_config[:lat_min]

@lat_max = grid_config[:lat_max]

@lng_min = grid_config[:lng_min]

@lng_max = grid_config[:lng_max]

@grid_step = grid_config[:grid_step]

end

# Generate all grid points (lat, lng) within the bounding box

def points

points = []

lat = lat_min

while lat <= lat_max

lng = lng_min

while lng <= lng_max

points << [lat, lng]

lng += grid_step

end

lat += grid_step

end

points

end

end

CityGrid is set up to accept a hash object which dictates all the boundaries and grid step size. With this information, the points method can be called to generate an array of grid points, for our RoastFinder class to iterate through and call the Google Maps API for each pair of coordinates.

RoastFinder Class (Updated)

OK time to upgrade our RoastFinder to include the power of CityGrid:

require 'serpapi'

require 'json'

require_relative 'pub'

# Require our city_grid.rb file (to have access to the CityGrid class)

require_relative 'city_grid'

LONDON_GRID = {

lat_min: 51.28,

lat_max: 51.70,

lng_min: -0.50,

lng_max: 0.30,

grid_step: 0.05

}.freeze

class RoastFinder

# adds a getter method for city_grid

attr_reader :pubs, :city_grid

API_KEY = "your api key"

# Instantiates a CityGrid object and assigns it to @city_grid

def initialize

@city_grid = CityGrid.new(LONDON_GRID)

end

# Adds call to grid_search

def run

pub_data = grid_search

@pubs = build_pubs(pub_data)

output_pubs

end

# Generate all grid points for the bounding box using CityGrid

def grid_points

city_grid.points

end

# Perform a grid search over the bounding box

# and deduplicate pubs by data_id

def grid_search

seen = {}

all_pubs = []

grid_points.each do |lat, lng|

pubs = fetch_pubs_at(lat, lng)

pubs.each do |pub|

next if seen[pub[:data_id]]

seen[pub[:data_id]] = true

all_pubs << pub

end

end

all_pubs

end

def fetch_pubs_at(lat, lng)

ll = format('@%.4f,%.4f,13z', lat, lng)

p "Fetching pub at #{ll}"

maps_params = {

api_key: API_KEY,

engine: 'google_maps',

q: 'pub sunday roast',

google_domain: 'google.co.uk',

gl: 'uk',

ll: ll,

type: 'search',

hl: 'en',

}

client = SerpApi::Client.new(maps_params)

client.search[:local_results] || []

end

def build_pubs(pub_data)

pub_data.map do |pub_hash|

Pub.new(

name: pub_hash[:title],

address: pub_hash[:address],

rating: pub_hash[:rating],

place_id: pub_hash[:data_id]

)

end

end

# Outputs count of pubs scraped and first 5 in collection

def output_pubs(n = 5)

puts "#{pubs.count} Pubs Found"

puts "First #{n} examples:"

puts JSON.pretty_generate(pubs.first(n).map(&:to_h))

end

end

if __FILE__ == $PROGRAM_NAME

RoastFinder.new.run

end

Since the RoastFinder class was started in the last part of this series, we'll just concentrate on what has changed since it was introduced:

- Adds the

LONDON_GRIDhash to the file - Adds a getter method for

city_grid(the instance variable which contains ourCityGridobject) initializenow creates aCityGridobject (passing in theLONDON_GRIDhash)runcalls the newgrid_searchmethodgrid_pointswraps thepointsmethod ofCityGrid(which generates a list of coordinates)grid_searchcallsCityGridto get an array of grid coordinates for a given city area, and then over each set of coordinates, callsfetch_pubs_atto scrape pubs at each location, before building an array ofPubobjects in the@pubsinstance variableoutput_pubshas now been updated to display the first 5 items in@pubs

As before, you can run this app by running the following from inside the directory containing your code:

# install dependencies:

gem install "serpapi"

# run script:

ruby roast_finder.rb

You can find the code for this section on our GitHub Tutorials repo here.

Conclusion

That’s it for part two of our gastronomic quest! Not only did we extend our script to scrape Google Maps over a large area, to pull in business information from nearly 1,000 businesses - but we went into thorough detail on the theory of how this technique works, giving you the tools to adapt the code for your own purposes.

In the next part of this series, we’ll make use of the place_id of each Pub object to head to SerpApi's Google Maps Reviews to start scraping customer reviews.

Once we have those reviews, we'll feed the results into sentiment analysis tools. We'll take a look at techniques we can use to weigh and score the various opinions on each establishment so our code can confidently identify the roast with the most. See you next time!