LLMs are extremely effective at summarizing content. While hallucination remains a critical problem, the current stage of OpenAI’s GPT-4 is already useful for many tasks. One improvement I wish Google would implement is the ability to ask follow-up questions. This could be useful when quickly scanning through restaurant reviews. For example, we could ask a follow-up question like, “What’s good and bad about restaurant A?” and it would then extract the important pieces of information based on our question. Another example could be, “I just want to grab something quick; which restaurants should I choose?”. There are many use cases that can benefit from this improvement, and I believe it can significantly enhance everyone’s lives.

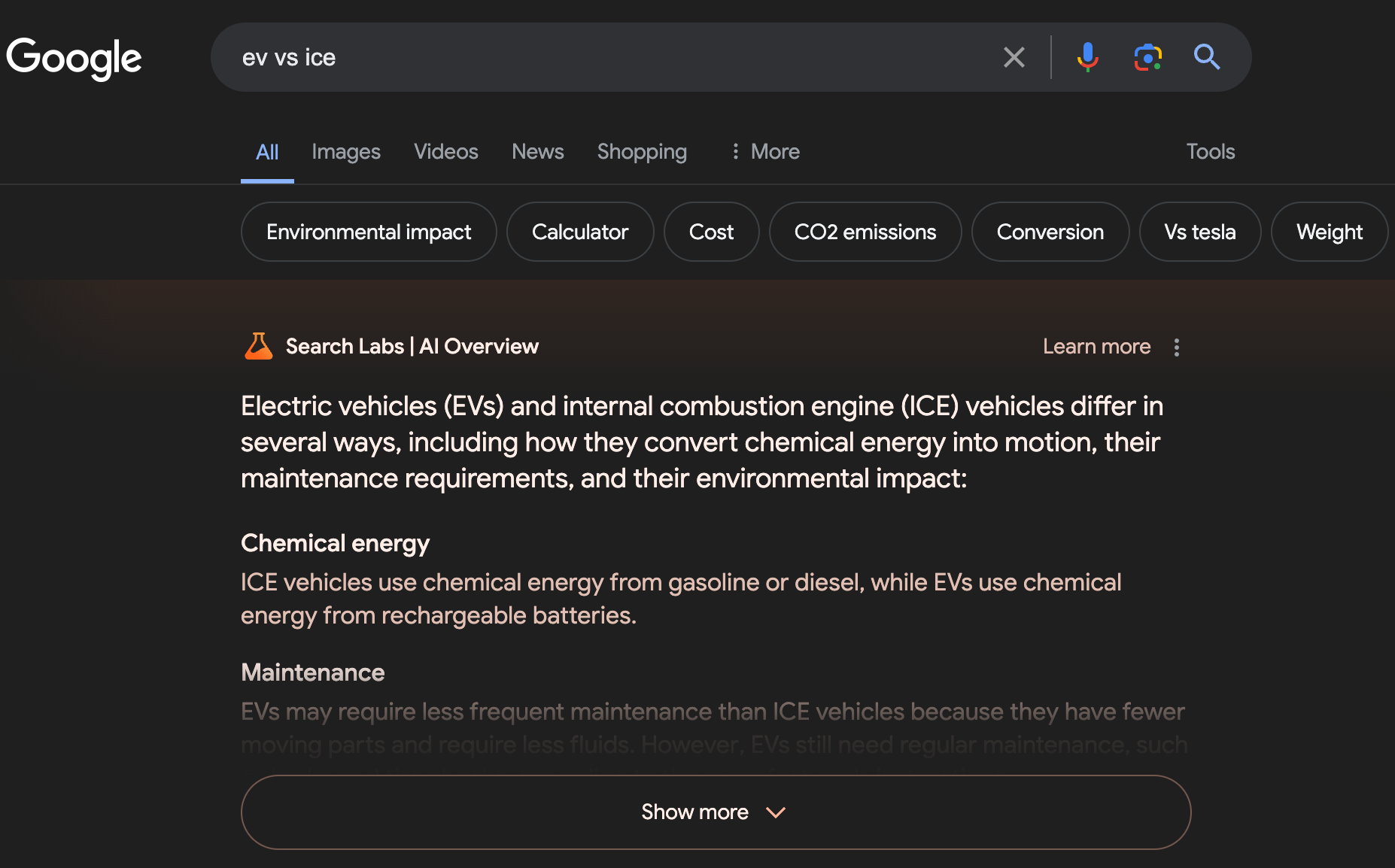

The current stage of AI implementation by Google is only able to work with factual information most of the time. For example, asking the different between EV and ICE.

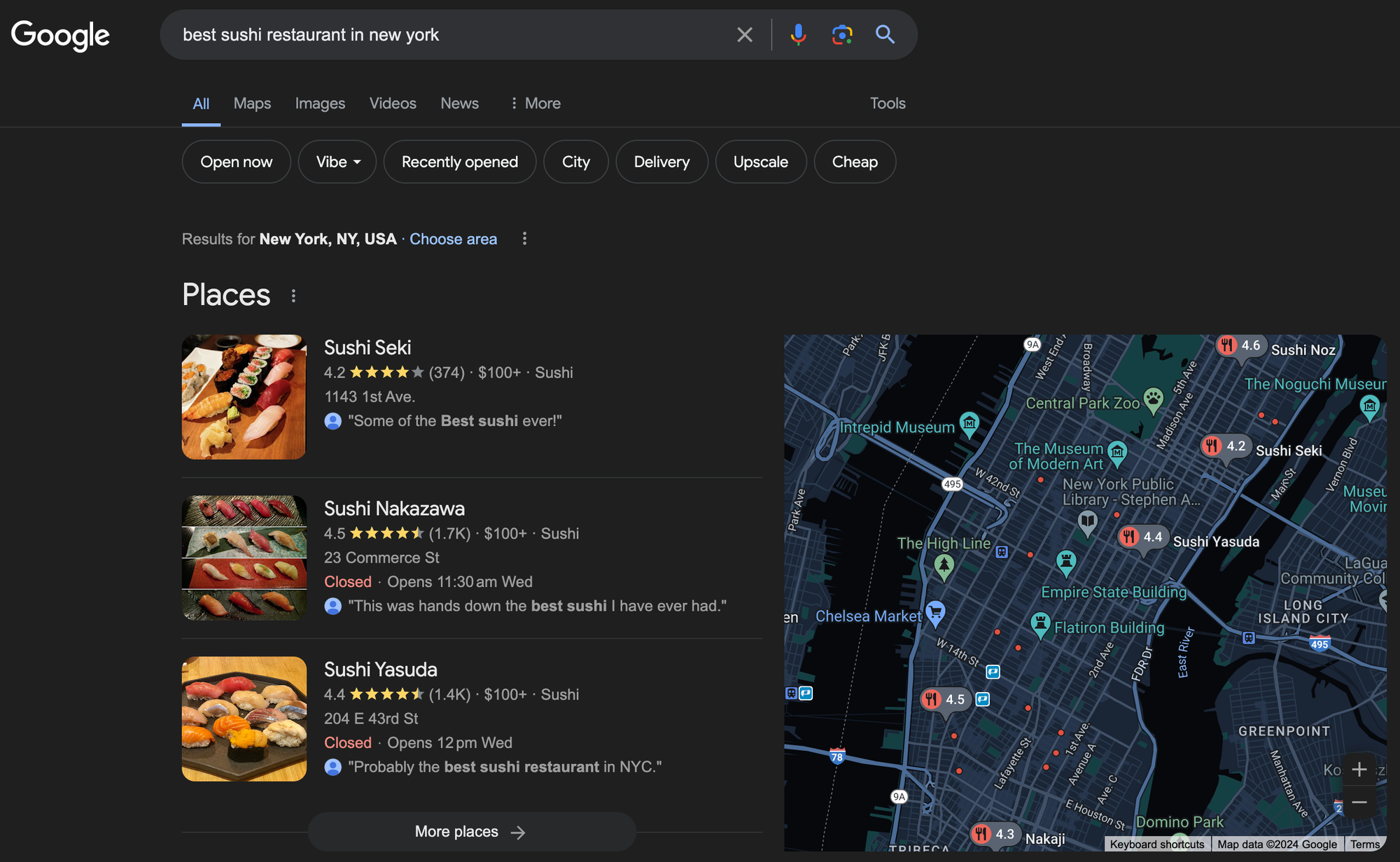

When asked about the best sushi restaurant to dine in, the results are filtered based on user comments mentioning ‘best sushi’, which is fine but it appears across all the restaurants shown. Therefore, it is important to go through each of the user reviews to accurately gauge the quality for aspects you probably care about, such as service quality, cleanliness, freshness, etc.

A DIY Solution

At SerpApi, we help users scrape Google and other search engines. We have a good amount of coverage on Google data, including, Google Search, Google Maps, Google Maps Reviews, Google Trends, Google Flights, Google Hotels, and many others. This gave me the idea that I could build a simple DIY solution using the data we can access.

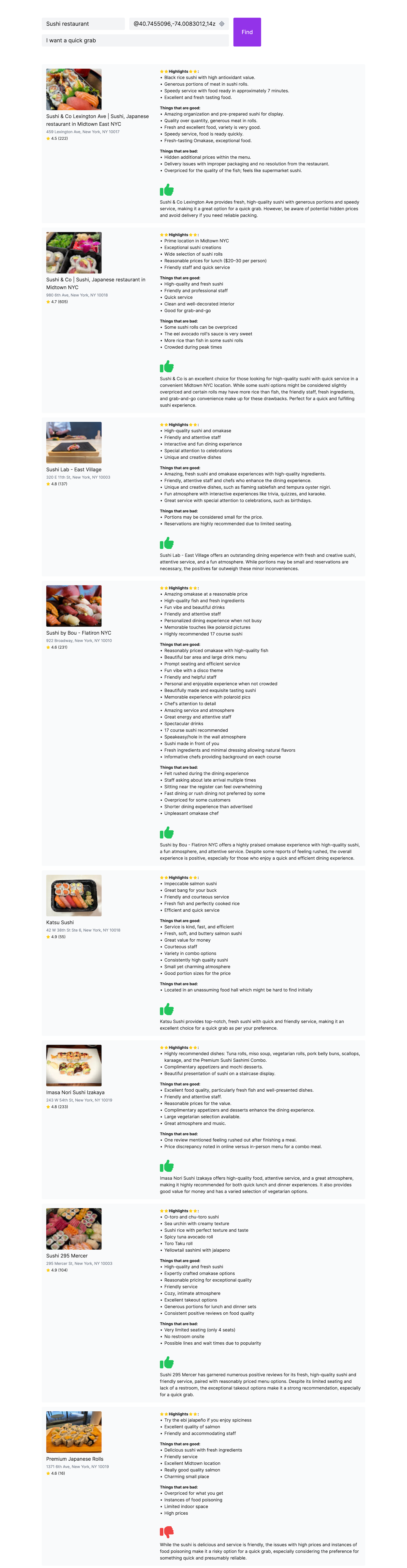

This DIY solution is made possible with OpenAI’s GPT-4 and SerpApi’s Google Maps and Google Maps Reviews APIs. We first retrieve the places and reviews using SerpApi’s API and then pass each place’s data to OpenAI’s GPT-4 for summarization.

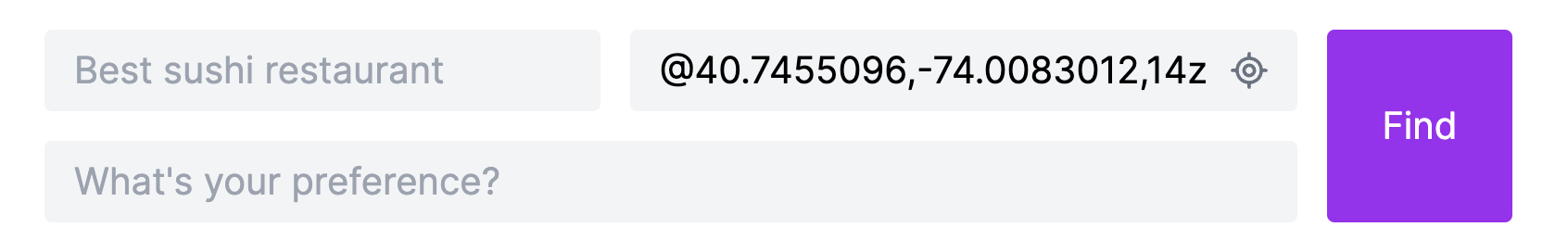

The inputs

Building a follow-up question UI could take some times to tweak and make it work well. This UI could serve as a proof of concept, mainly aiming to have LLMs perform the tasks for us. We have to provide the keyword we want to search and the location in GPS coordinates. You could search "Sushi restaurant", "Car Servicing", etc. The preference is optional but it could improve the final result.

The results

Looking for a quick grab at a sushi restaurant in New York

Honest and on time car servicing workshop in New York

This is great because I can now read through all the good and bad reviews at a glance for each place. An obvious improvement I should make is to attach a reference link to each line.

This is the prompt given to the LLM

You’re an expert reviewer. You are great at looking at the reviews for a given place and summarizing the good and bad aspects. It is also great to show a highlights section to the user. Take user preference (if present) into consideration when deciding if you recommend or not. When a JSON is provided by the user, look for the good and bad points, then return the result in JSON format with new keys ‘good’ and ‘bad’.

Response format:

{ good: [string], bad: [string], highlights: [string], recommended: true/false, reason: string }Github Repository: https://github.com/tanys123/thegoto

Conclusion

LLM is a great piece of technology that can help improve everyone’s life when it is well implemented. Google most probably developing a ChatGPT version of Google, hopefully it is going to be good. For now, I would rely on Perplexity.