For every academic researcher, student, Google Scholar is a goldmine of scholarly articles, research papers, academic publications, journal articles, theses, and conference proceedings.

Scraping Google Scholar can provide you with data for your research projects, citation analysis - identify influential works and understand the impact of the research, literature reviews, bibliographic management, keyword and topic analysis, and more.

With SerpApi, you'll have every tool to harness the power of Google Scholar for your academic needs. Let's embark on this scholarly journey!

Setting up a SerpApi account

SerpApi offers a free plan for newly created accounts. Head to the sign-up page to register an account and complete your first search with our interactive playground. When you want to do more searches with us, please visit the pricing page.

Once you are familiar with all results, you can utilize SERP APIs using your API Key.

Scrape your first Google Scholar results with SerpApi

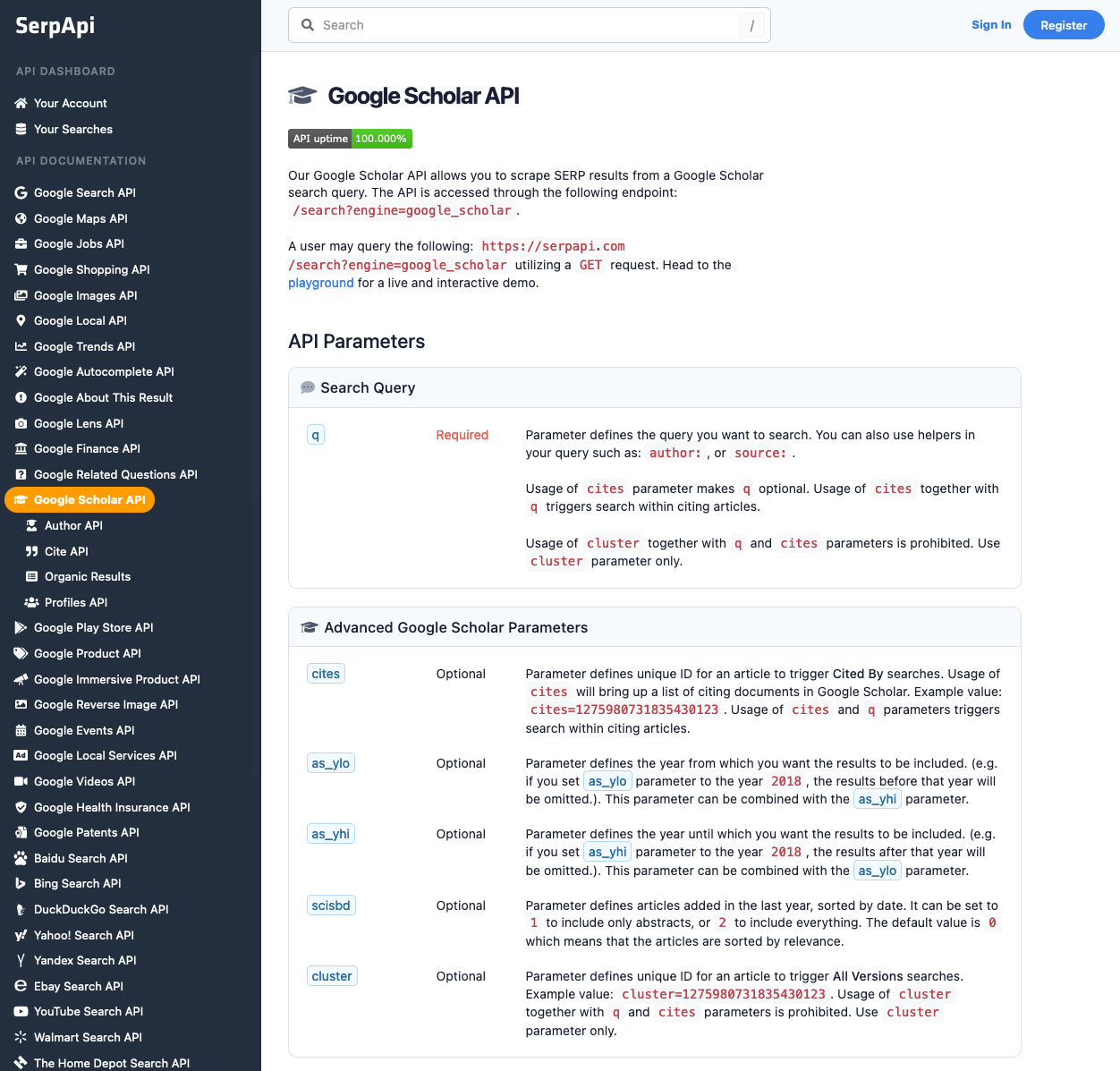

Head to the Google Scholar Results from the documentation on SerpApi for details.

In this tutorial, we will scrape organic results when searching with "bacteria" keyword. The data contains: "position", "title", "link", "description", "author", and more. You can also scrape more information with SerpApi.

First, you need to install the SerpApi client library.

pip install google-search-resultsSet up the SerpApi credentials and search.

from serpapi import GoogleSearch

import os, json

params = {

'api_key': 'YOUR_API_KEY', # your serpapi api

'engine': 'google_scholar', # SerpApi search engine

'q': 'bacteria'

}

To retrieve Google Scholar Results for a given search query, you can use the following code:

results = GoogleSearch(params).get_dict()['organic_results']You can store Scholar Results JSON data in databases or export them to a CSV file.

import csv

header = ['position', 'title', 'link', 'description', 'author']

with open('google_scholar.csv', 'w', encoding='UTF8', newline='') as f:

writer = csv.writer(f)

writer.writerow(header)

for item in results:

print(item)

writer.writerow([item.get('position'), item.get('title'), item.get('link'), item.get('description'), item.get('publication_info', {}).get('summary')])

This example is using Python, but you can also use your all your favorite programming languages likes Ruby, NodeJS, Java, PHP....

If you have any questions, please feel free to contact me.