Bing Maps, similar to Google Maps, allows you to browse local places all around the world. It is rich with data such as reviews, operating hours, business phone numbers, business websites, and much more. This article showcases the step by step to scrape Bing Maps data.

Get the endpoint that loads the data

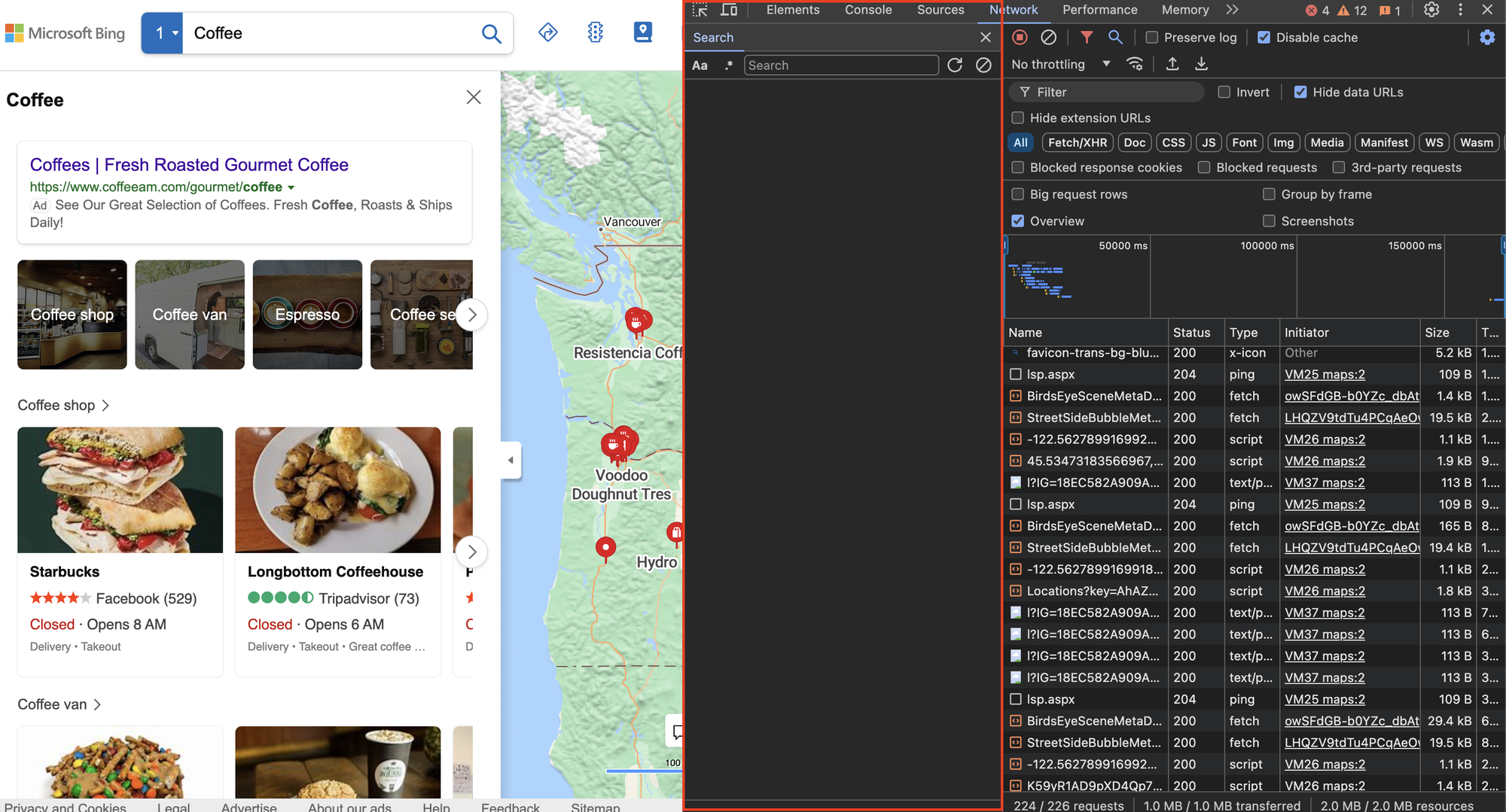

Bing Maps allows you to search using any keywords. We’re interested in the results that appear when you use specific keywords. To do this, search for places as you normally would, but keep the Chrome DevTools Network tab open.

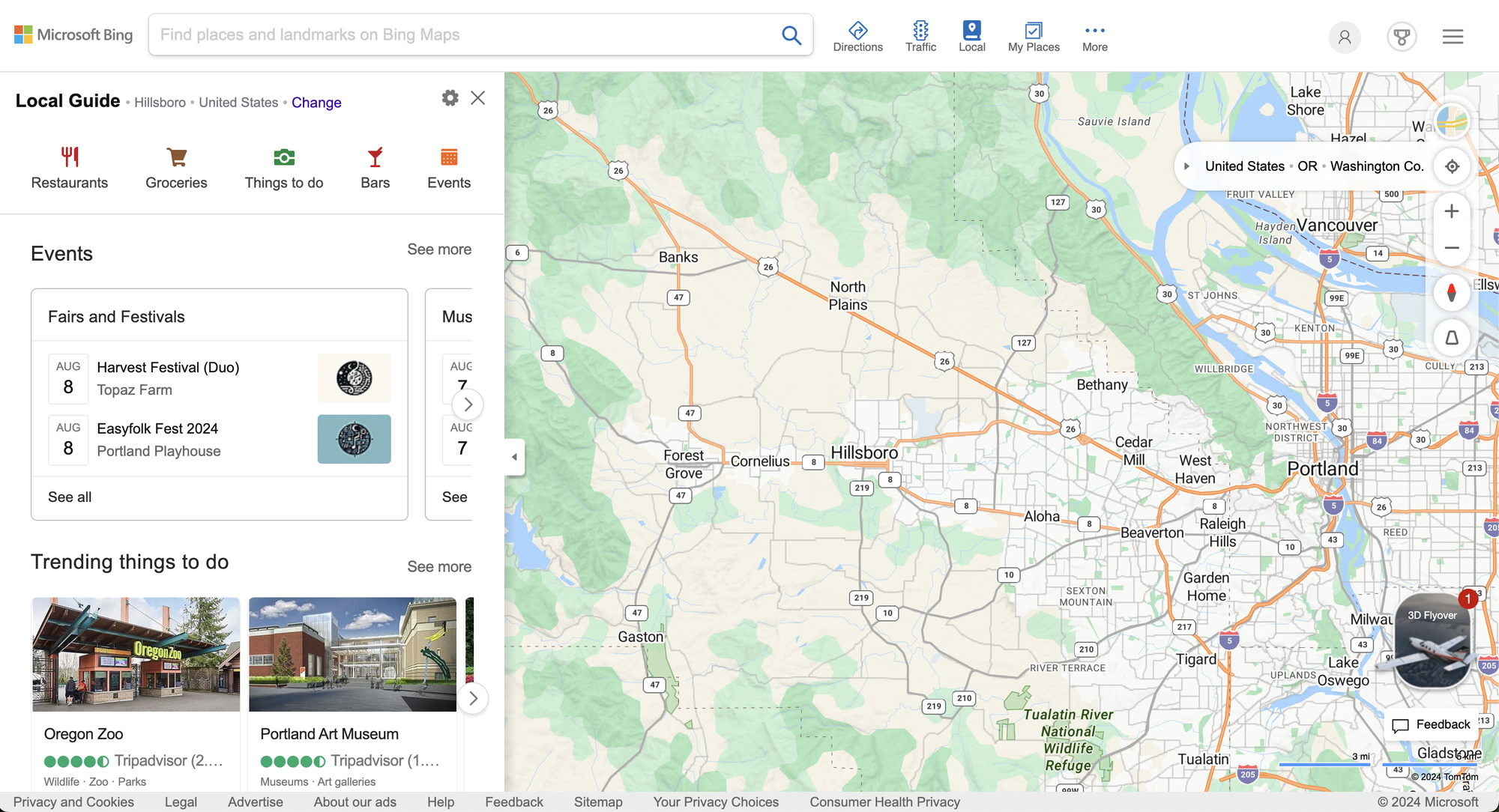

Visit https://www.bing.com/maps. It is the entry point that loads everything else.

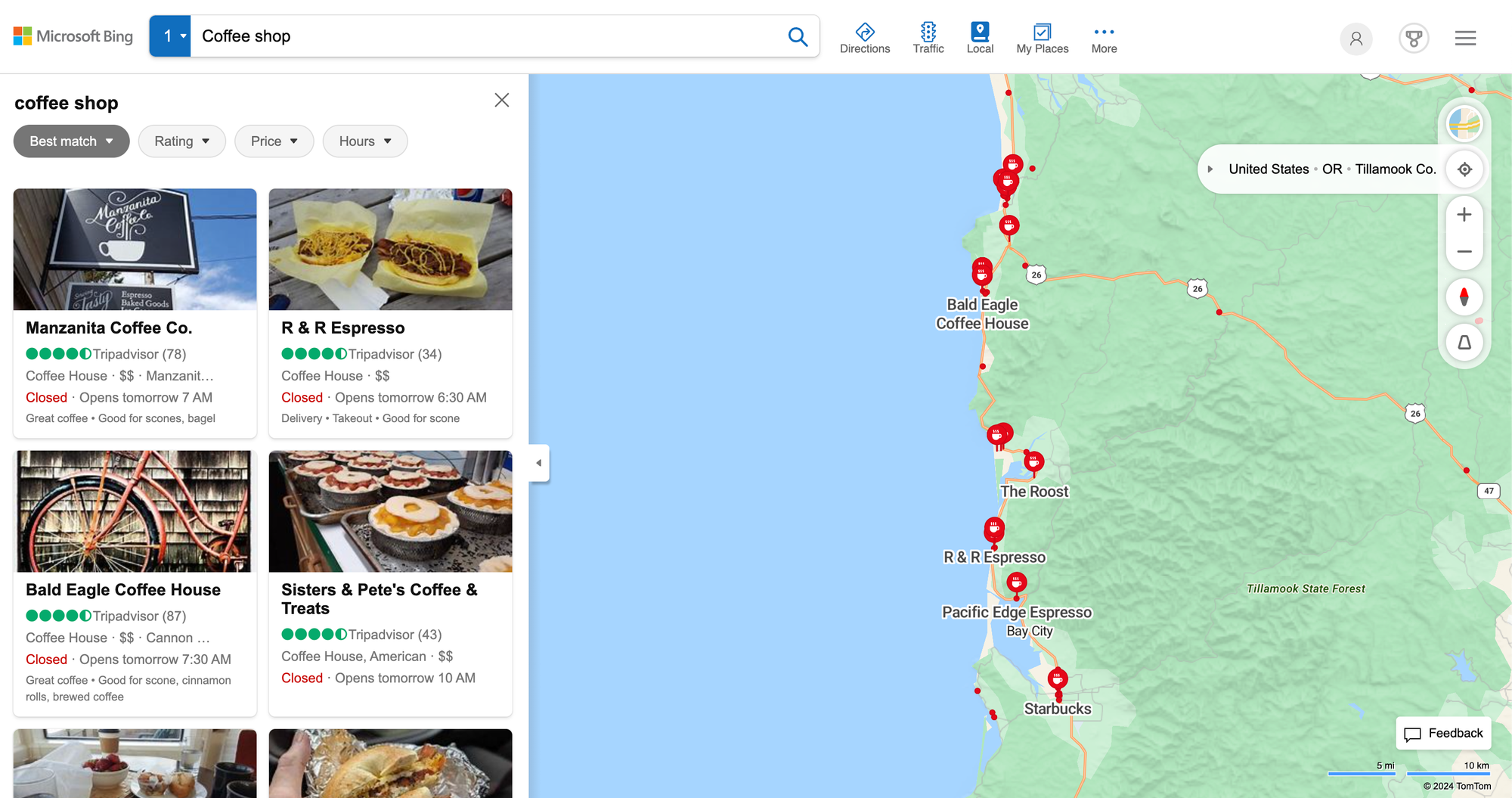

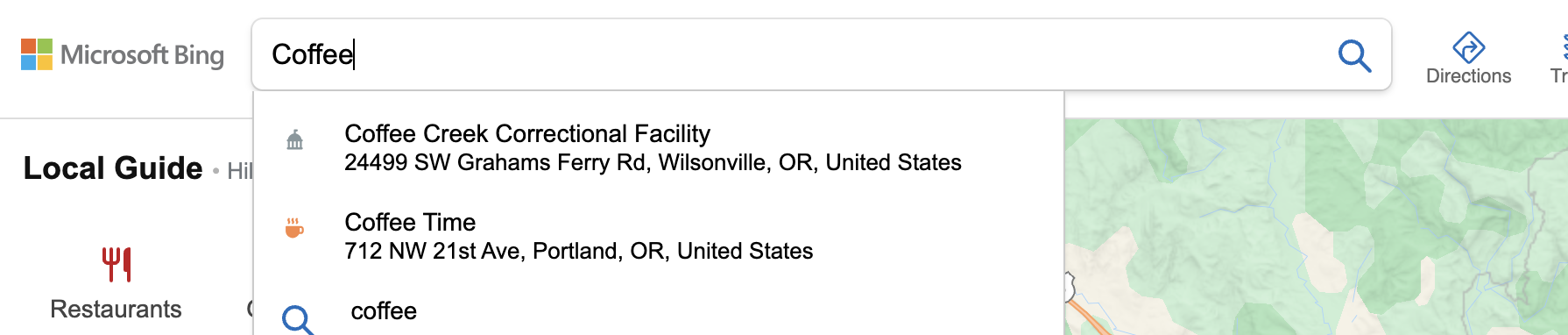

Then, in the search box, type in Coffee (or any term you prefer) and press Enter.

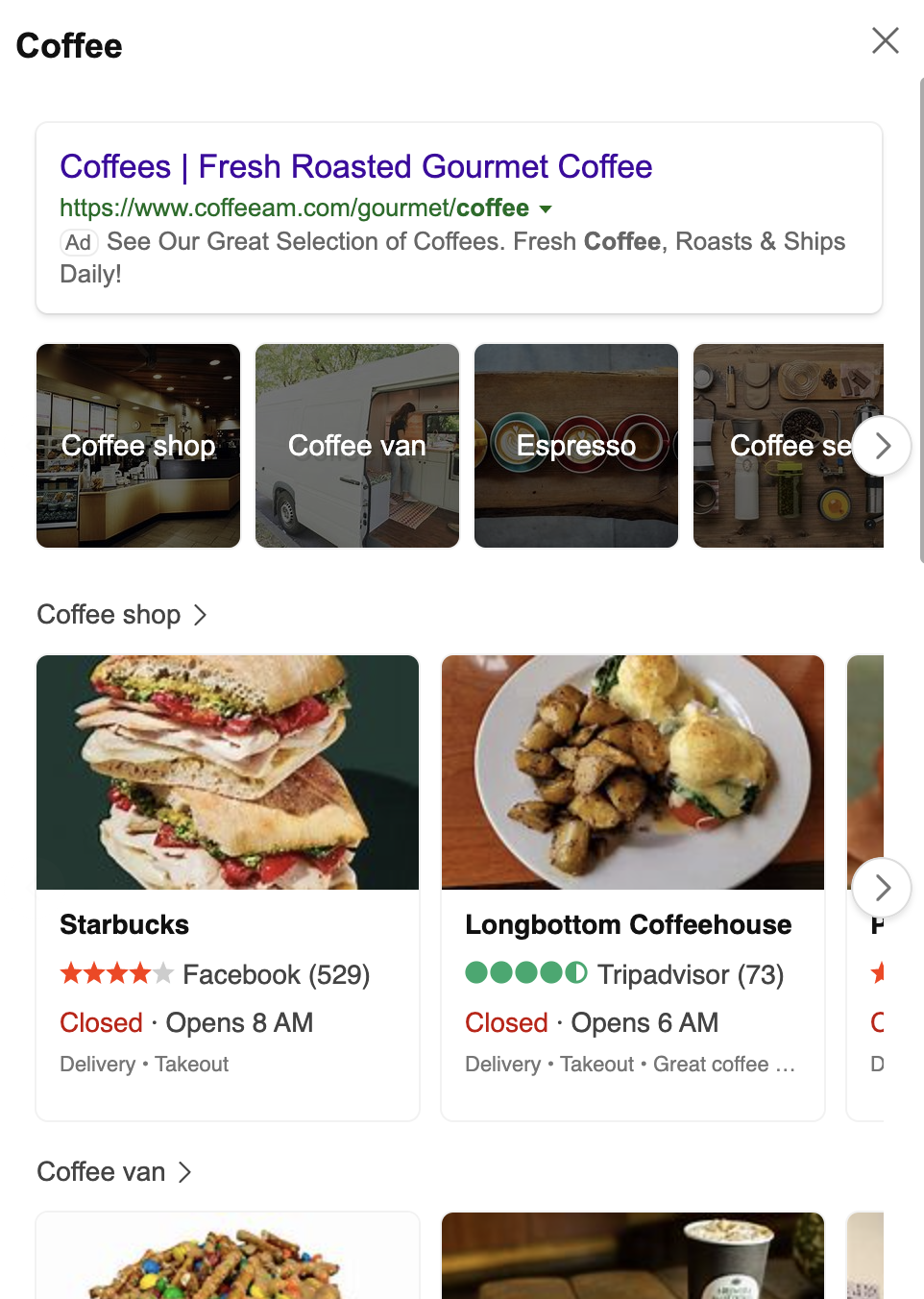

Here is the results that I got for the search keyword Coffee and it is the results we want to scrape.

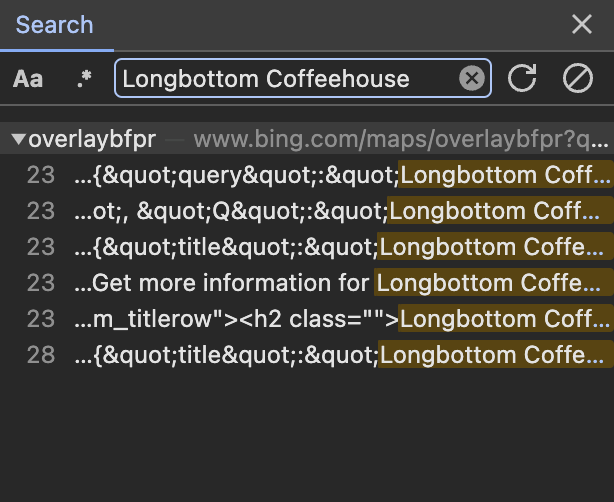

Since we have the Network tab open, it will record every request. Now, we need to find the request that loaded the results. Chrome DevTools is very effective in the search department. Visit the Network tab and press CMD/CTRL + F to bring up the search panel. This panel will go through each of the requests and find the keyword we provide. It checks the request’s URL, headers, parameters, response—basically everything.

With this, we can narrow down the request that returns the data. I like to use uncommon keywords, as they will help identify the specific request we’re looking for quickly. We found Longbottom Coffeehouse in the response body of the overlaybfpr request, this looks to be the the endpoint we want.

Nice! We now know that the endpoint to retrieve the results is https://www.bing.com/maps/overlaybfpr.

Once we know the endpoint, I like to copy it into Postman to experiment with it. The goal is to understand all the parameters and identify which ones are unnecessary for a successful request and should be discarded. It is good practice to reduce the parameters to the minimum necessary.

Not every site is as straightforward as this. Some websites implement security mechanisms to prevent scraping. However, always remember that if the data can be seen on your screen in your browser, there must be a way to access it, no matter how difficult it is.

After rounds of experiments in Postman, I am able to reduce the request parameters from:

curl 'https://www.bing.com/maps/overlaybfpr?q=Coffee&filters=MapCardType%3A%22unknown%22%20direction_partner%3A%22maps%22%20tid%3A%2218EC582A909A4FE587F1F58CA085D666%22&mapcardtitle=&appid=E18E19EF-764F-41A9-B53E-6E98AE519695&p1=&count=18&ecount=18&first=0&efirst=1&localMapView=45.62388270686944,-123.23158264160168,45.44543940974867,-122.56278991699222&cardtype=unknown&FORM=MPSRBX&ads=1&cp=45.53473183566967~-122.89718627929688&MCIG=18EC582A909A4FE587F1F58CA085D666'down to:

curl --location 'https://www.bing.com/maps/overlaybfpr?q=Coffee&filters=direction_partner%3A%22maps%22&count=18&first=0&cp=45.53473183566967~-122.89718627929688'The parameters we need are:

{

"q": "String - Search query",

"filters": "String (URL encoded) - Useful to find specific place",

"count": "Integer - number of results to return per page",

"first": "Integer - Results offset",

"cp": "String - GPS coordinates"

}Very cool 😃

The same endpoint can also be used to find a specific place; that's where the filters parameter is used. Here is the request that retrieves Longbottom Coffeehouse details:

curl --location 'https://www.bing.com/maps/overlaybfpr?filters=direction_partner%3A%22maps%22%20local_ypid%3A%22YN719x12441851%22&count=18&first=0&cp=45.53473183566967~-122.89718627929688'The filters parameter before encoded:

'direction_partner:"maps" local_ypid:YN719x12441851'Parsing the data

The response is in HTML format, so we need to parse the data using a HTML parser library. In this tutorial, I will showcase the code in Ruby, feel free to ping me if you want to see the code in another programming language. We will be using Nokolexbor as the HTML parser library, you can find the install instructions in the repository.

First, we have to import the libraries. And then we define the parameters.

require 'net/http'

require 'uri'

require 'cgi'

require 'nokolexbor'

params = {

q: 'Coffee',

filters: CGI.escape('direction_partner:"maps"'),

count: 18, # `count` will not apply on all case, it depends on how Bing Maps render the layout

first: 0,

cp: '45.53473183566967~-122.89718627929688'

}Next, make the request to the endpoint we identified, using the parameters.

uri = URI('https://www.bing.com/maps/overlaybfpr')

uri.query = URI.encode_www_form(params)

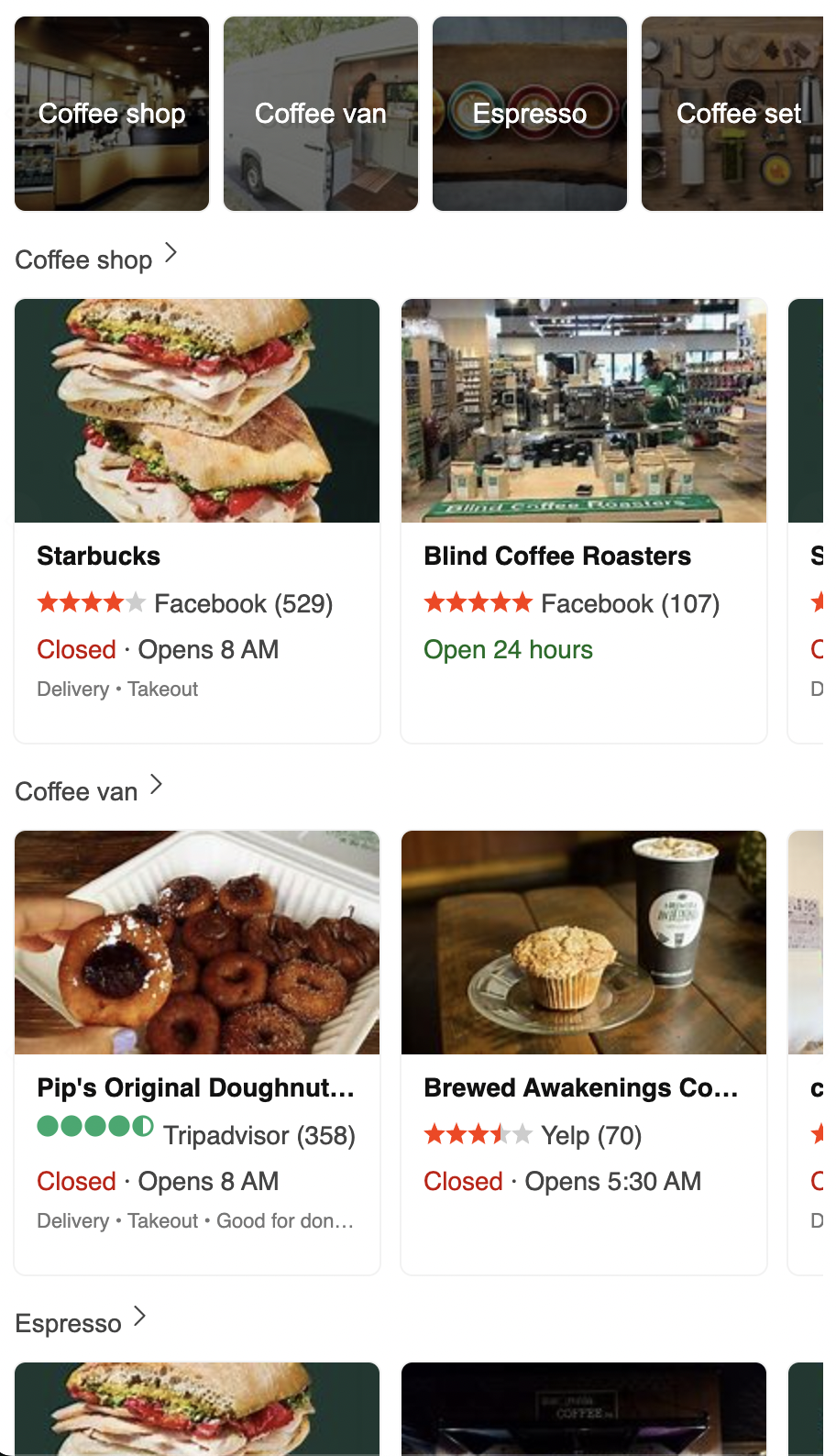

response = Net::HTTP.get_response(uri)The result that I got from the above operations.

Finally, we convert the HTML body into Nokolexbor document which allows us to find elements using CSS selectors. The below code retrieves the title, operating hours and rating of the place.

doc = Nokolexbor::HTML(response.body)

places = doc.css('.lMCard')

places.each do |place|

title = place.at_css('.lm_titlerow')&.text

hours = place.at_css('.opHours')&.text

rating = place.at_css('.csrc[aria-label]')&.[]('aria-label')

rating = rating[/\d+(\.\d+)?/]&.to_f if rating

puts "#{title} (#{rating || 'N/A'}) \n\s\s #{hours}\n\n"

endOutput:

Starbucks (4.0)

Closed · Opens 8 AM

Blind Coffee Roasters (5.0)

Open 24 hours

Starbucks (4.0)

Closed · Opens 5 AM

Peet's Coffee (4.5)

Closed · Opens 5 AM

Starbucks (4.5)

Closed · Opens 4:30 AM

Pip's Original Doughnuts & Chai (4.5)

Closed · Opens 8 AM

Brewed Awakenings Coffee Roasters (3.5)

Closed · Opens 5:30 AM

compass coffee (4.0)

Closed · Opens 7 AM

Brewed Cafe & Pub (4.5)

Closed · Opens 7 AM

Caravan Coffee (5.0)

Closed · Opens 7 AM

Starbucks (4.0)

Closed · Opens 5 AM

Insomnia Coffee Co., Beaverton (4.5)

Closed · Opens 6 AM

Starbucks (4.0)

Closed · Opens 6 AM

Starbucks (4.5)

Closed · Opens 4:30 AM

Espresso Me Service (N/A)

Closed · Opens 7 AM

Target (3.0)

Closed · Opens 8 AM

Walmart Supercenter (2.0)

Closed · Opens 6 AM

Sunrise Bagels Beaverton (4.5)

Closed · Opens 7 AM

The Tao of Tea (4.5)

Closed · Opens 11 AM

Cost Plus World Market (4.0)

Closed · Opens 10 AM

Hydro Flask (1.5)

Closed · Opens 8 AM

Crate & Barrel (3.0)

Closed · Opens 10 AM

Walmart Supercenter (2.5)

Closed · Opens 6 AM

Walmart (N/A)

Closed · Opens 6 AMFull code

require 'net/http'

require 'uri'

require 'cgi'

require 'nokolexbor'

params = {

q: 'Coffee',

filters: CGI.escape('direction_partner:"maps"'),

count: 18,

first: 0,

cp: '45.53473183566967~-122.89718627929688'

}

uri = URI('https://www.bing.com/maps/overlaybfpr')

uri.query = URI.encode_www_form(params)

response = Net::HTTP.get_response(uri)

doc = Nokolexbor::HTML(response.body)

places = doc.css('.lMCard')

places.each do |place|

title = place.at_css('.lm_titlerow')&.text

hours = place.at_css('.opHours')&.text

rating = place.at_css('.csrc[aria-label]')&.[]('aria-label')

rating = rating[/\d+(\.\d+)?/]&.to_f if rating

puts "#{title} (#{rating || 'N/A'}) \n\s\s #{hours}\n\n"

endThank you for following through and I hope you find it useful. If you have any questions, feel free to reach out to me. Happy scraping.

Add a Feature Request💫 or a Bug🐞