Introduction

Coffee shops are booming in my area. Every month, I hear about a new one opening, which makes me wonder:

Will these business last long? Or are they just part of a seasonal hype?

This curiosity inspired me to create an AI agent that can explore the coffee shop market and predict its potential success. While I'm focusing on coffee shops in this project, the same framework can be applied to other businesses, such as restaurants, salons, gyms, and retail stores. However, because LLMs are limited by their knowledge cutoff dates, having access to real-time data is crucial for obtaining the most up-to-date insights.

Traditionally, entrepreneurs relied on guesswork, small surveys, or costly market research. Now, thanks to tools like Google Maps and Google Reviews, we can access real-time information to guide better decisions.

In this project, we will use these two API solutions from SerpApi to help us gather data easily:

Google Maps API: To scrape business listings and locations.

Google Maps Reviews API: To extract detailed customer reviews and ratings.

Check out the tutorial below on how you can get started using SerpApi's Google Maps API and Google Maps Reviews API using Python.

Comprehensive Getting Started Tutorial

Please keep in mind that this AI agent is intended for educational purposes only. While it provides useful insights, predictions may not always be entirely accurate. To get the best results, it's a good idea to combine AI insights with your own careful market research.

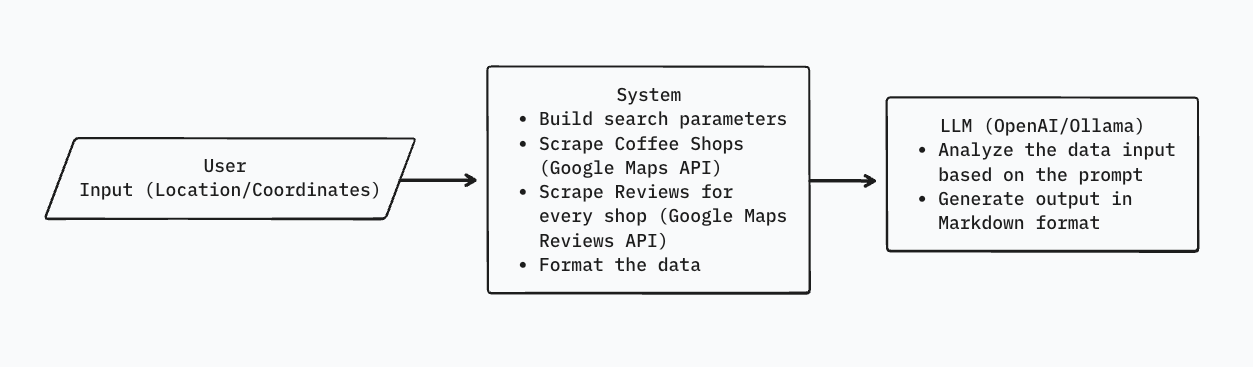

How the AI Agent Works

- Search for coffee shops using SerpApi's Google Maps API

- Input either a location (e.g., "Austin, TX") or GPS coordinates.

- Scrape all the local coffee shops with metadata.

- Pull reviews with SerpApi's Google Maps Reviews API

- Extract customer opinions, ratings, and timestamps for each competitor.

- AI-Powered Analysis

- Summarize competitor density and pricing tiers.

- Perform sentiment analysis on customer reviews.

- Predict the success probability of a new coffee shop in the area.

- Output structured tables and an action plan in a clean Markdown file.

Using SerpApi to scrape data from Google Maps

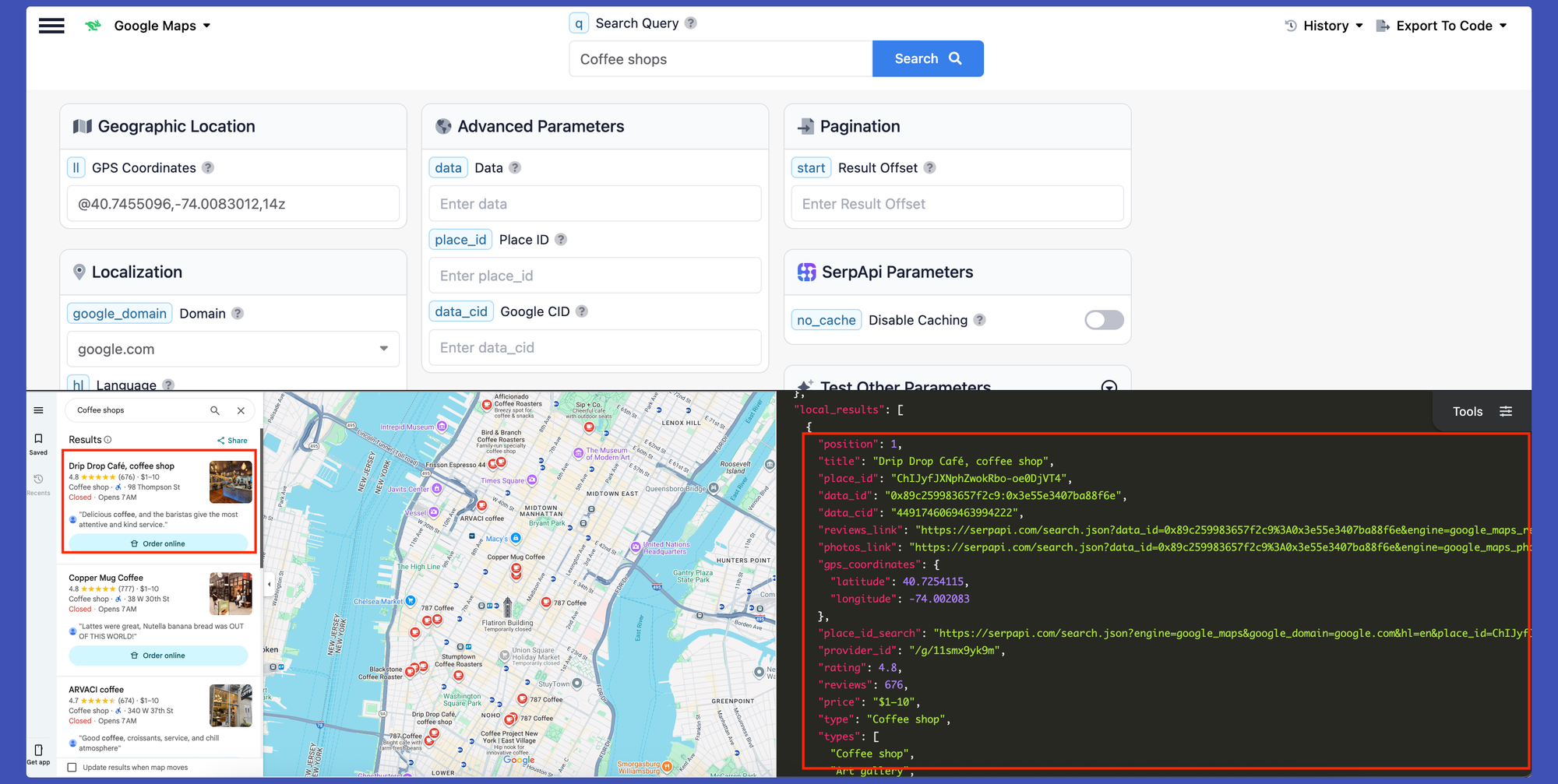

SerpApi's Google Maps API allows us to gather organized data from Google Maps quickly and easily, without the hassle of CAPTCHA, proxies, or manual scraping stress.

With just one API request, you can access a wealth of information. Feel free to explore our Google Maps API playground to see what data you can retrieve. Below, there's a sample screenshot from our playground to give you an idea.

However, this is the data we need:

titledata_id(We need this information to be able to retrieve the reviews later)gps_coordinatesaddressratingreviewspriceoperating_hours

All data will be passed to the AI model for analysis, except for the data_id, which is required to scrape Google Maps Reviews.

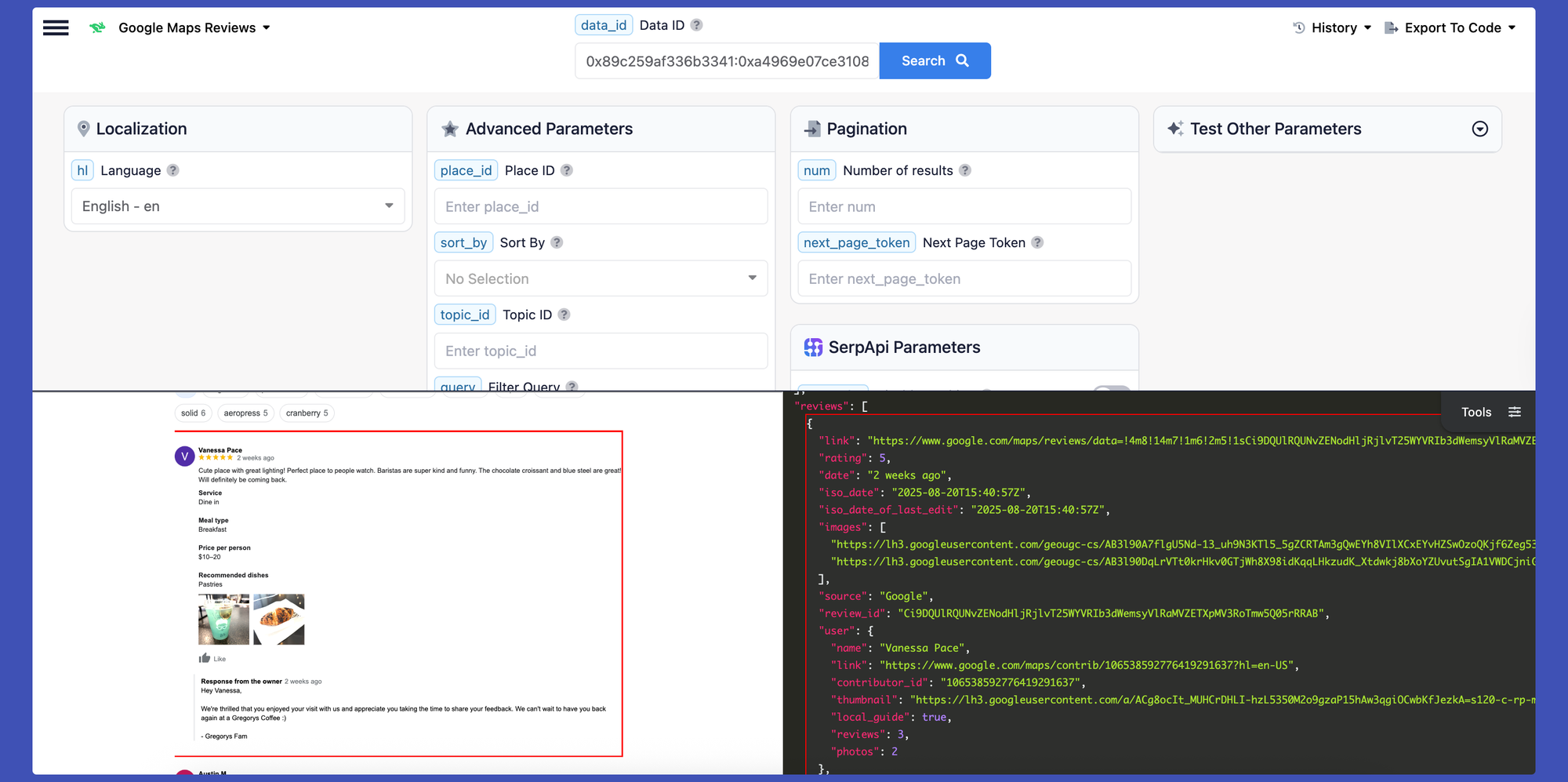

Beyond ratings, reviews tell the real story. Using SerpApi's Google Maps Reviews API, we can pull recent customer feedback for each competitor. You can visit our Google Maps Reviews API playground to explore the additional information we can collect.

The data that we're interested in collecting:

snippetwhich is the customer's reviewratingdate

Set up the scraper

Prerequisites

- Python 3.9+

- Install dependencies:

pip install google-search-results openai python-dotenv requests

- SerpApi API keys (sign up here to get 250 free searches/month).

- OpenAI API key or download the gpt-oss model locally via ollama.

- Create a

.envfile with your API keys.

SERPAPI_API_KEY=your_serpapi_key_here

OPENAI_API_KEY=your_openai_key_here # If you're using OpenAIImport the necessary libraries

Before we begin, let's import the necessary libraries for making API calls, logging, managing environment variables, and handling dates and times.

import os

import json

import time

import logging

from datetime import datetime

from dotenv import load_dotenv

from serpapi import GoogleSearch

from openai import OpenAI

import requestsFor our convenience, we will create a log to record debug information. It will be saved in the debug.log file.

logger = logging.getLogger("coffee_agent")

logger.setLevel(logging.DEBUG)

file_handler = logging.FileHandler('debug.log')

file_handler.setFormatter(logging.Formatter('%(asctime)s %(levelname)s: %(message)s', datefmt='%Y-%m-%d %H:%M:%S'))

logger.addHandler(file_handler)

stream_handler = logging.StreamHandler()

stream_handler.setFormatter(logging.Formatter('%(asctime)s %(levelname)s: %(message)s', datefmt='%Y-%m-%d %H:%M:%S'))

logger.addHandler(stream_handler)Scrape data from Google Maps

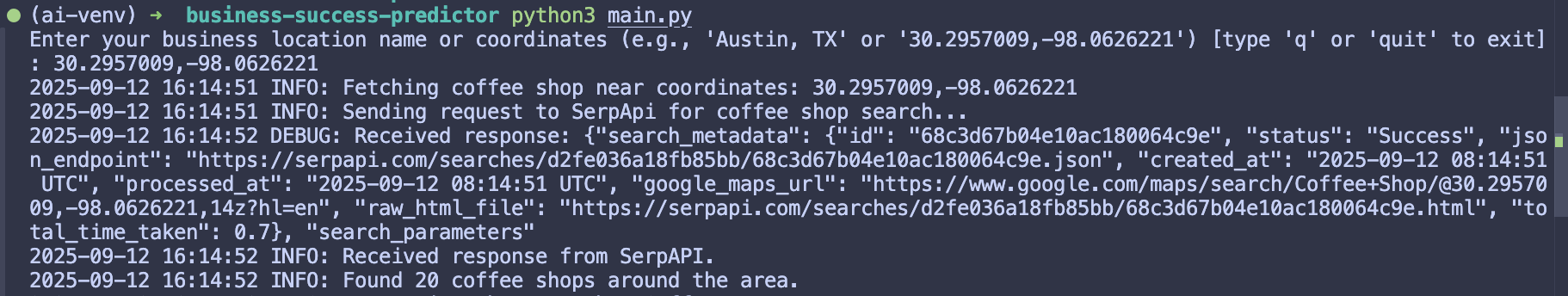

The first step is to query Google Maps for coffee shops around a given location or coordinates.

We define a helper function to build the search parameters:

BUSINESS_TYPE = "Coffee Shop"

def get_search_params(user_input, api_key):

if "," in user_input and all(part.replace('.', '', 1).replace('-', '', 1).isdigit() for part in user_input.split(',')):

logger.info(f"Fetching {BUSINESS_TYPE.lower()} near coordinates: {user_input}")

return {

"engine": "google_maps",

"q": BUSINESS_TYPE,

"ll": f"@{user_input},14z",

"api_key": api_key

}

else:

logger.info(f"Fetching {BUSINESS_TYPE.lower()} in location: {user_input}")

return {

"engine": "google_maps",

"q": f"{BUSINESS_TYPE} in {user_input}",

"api_key": api_key

}This function builds the right parameters depending on whether the user types a city name or latitude/longitude coordinates.

You can change the value for BUSINESS_TYPE based on your business of interest.

Now let's use it to scrape nearby coffee shops:

def fetch_shops_details(search_params):

logger.info(f"Sending request to SerpApi for {BUSINESS_TYPE.lower()} search...")

response = requests.get("https://serpapi.com/search", params=search_params)

data = response.json()

logger.debug(f"Received response: {json.dumps(data)[:500]}")

logger.info(f"Received response from SerpAPI.")

local_results = data.get("local_results", [])

logger.info(f"Found {len(local_results)} {BUSINESS_TYPE.lower()}s around the area.")

return local_resultsThis part of the script sends a request to SerpApi's Google Maps API to retrieve local business results based on the area specified by the user's input.

Visit our Google Maps API documentation for more information.

Scrape data from Google Maps Reviews

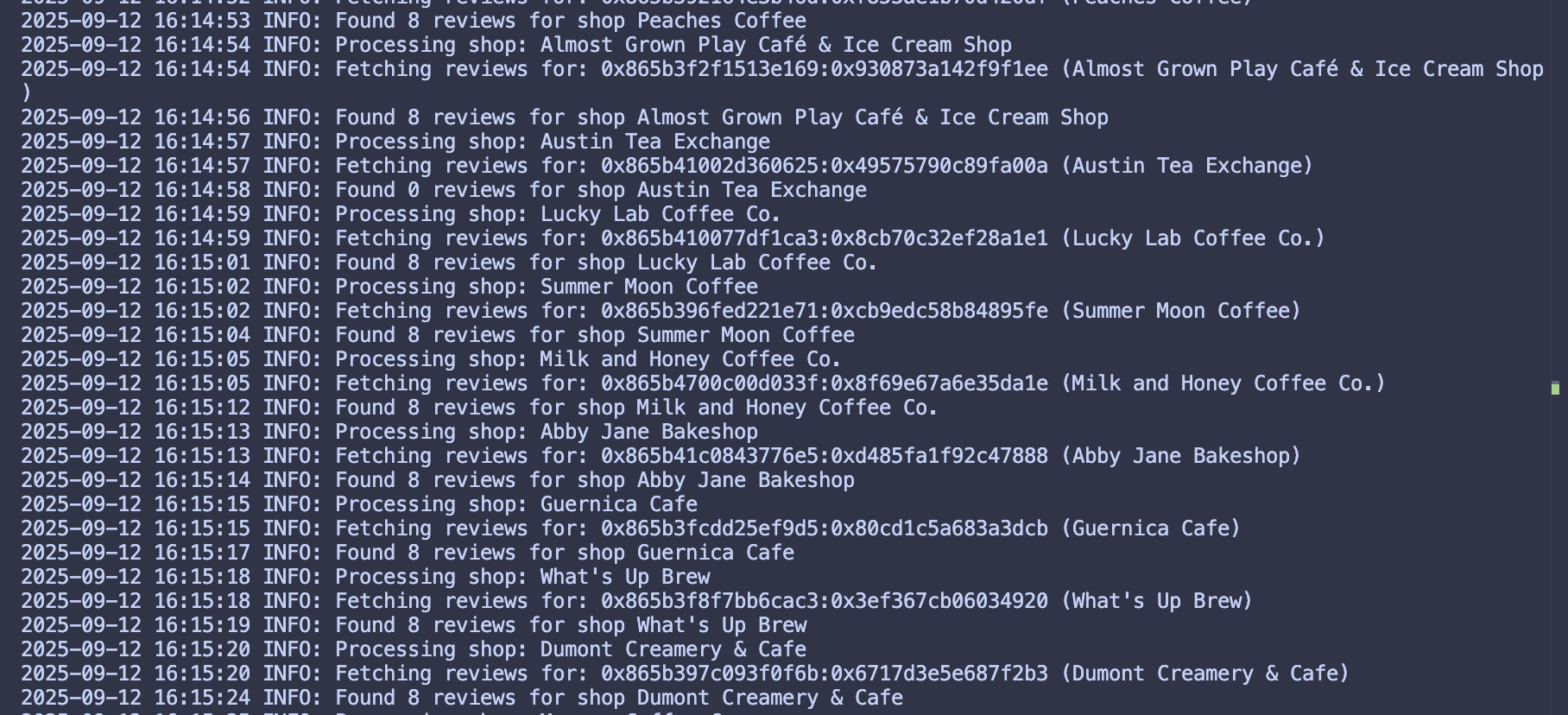

Each coffee shop result has a unique data_id, which we get from the previous API call. We can use this data_id to retrieve customer reviews.

def fetch_reviews(data_id, shop_title):

logger.info(f"Fetching reviews for: {data_id} ({shop_title})")

review_params = {

"api_key": os.getenv("SERPAPI_API_KEY"),

"engine": "google_maps_reviews",

"data_id": data_id,

"hl": "en"

}

review_search = GoogleSearch(review_params)

review_results = review_search.get_dict()

reviews = review_results.get("reviews", [])

logger.info(f"Found {len(reviews)} reviews for shop {shop_title}")

time.sleep(1)

return [

{

"review_text": review.get("snippet"),

"review_star_rating": review.get("rating"),

"timestamp": review.get("date")

}

for review in reviews

]This script sends a request to SerpApi's Google Maps Reviews API to scrape each customer's reviews and returns the collected reviews to each coffee shop's data.

In our example, 20 coffee shops were found in the area from the previous search, resulting in 20 requests for Google Maps Reviews. Check out your search history here.

Visit our Google Maps Reviews documentation for detailed information.

Build Competitor Dataset

Finally, let's put everything together into a string format for the AI to analyze.

def format_competitor_data(competitors):

competitor_data_str = ""

for shop in competitors:

competitor_data_str += f"\n- {shop['business_name']}\n - Address: {shop['address']}\n - GPS: {shop['GPS_coordinates']}\n - Star Rating: {shop['star_rating']}\n - Review Count: {shop['review_count']}\n - Opening Hours: {shop['opening_hours']}\n - Price Level: {shop['price_level']}\n - Customer Reviews:\n"

for review in shop["customer_reviews"]:

competitor_data_str += f" - \"{review['review_text']}\" | {review['review_star_rating']} stars | {review['timestamp']}\n"

return competitor_data_strSet up an AI agent

Now that we have the competitor data, let's build an AI agent to analyze it.

Design the AI prompt

LLMs perform best when provided with clear, structured instructions. Here's an example prompt.

def build_prompt(competitor_data_str):

return f"""

You are **{BUSINESS_TYPE} Success Forecaster**, an AI agent that predicts the potential success of a new {BUSINESS_TYPE.lower()} in a given location.

You will be fed structured data about competitor {BUSINESS_TYPE.lower()}s, including:

{competitor_data_str}

Your tasks are:

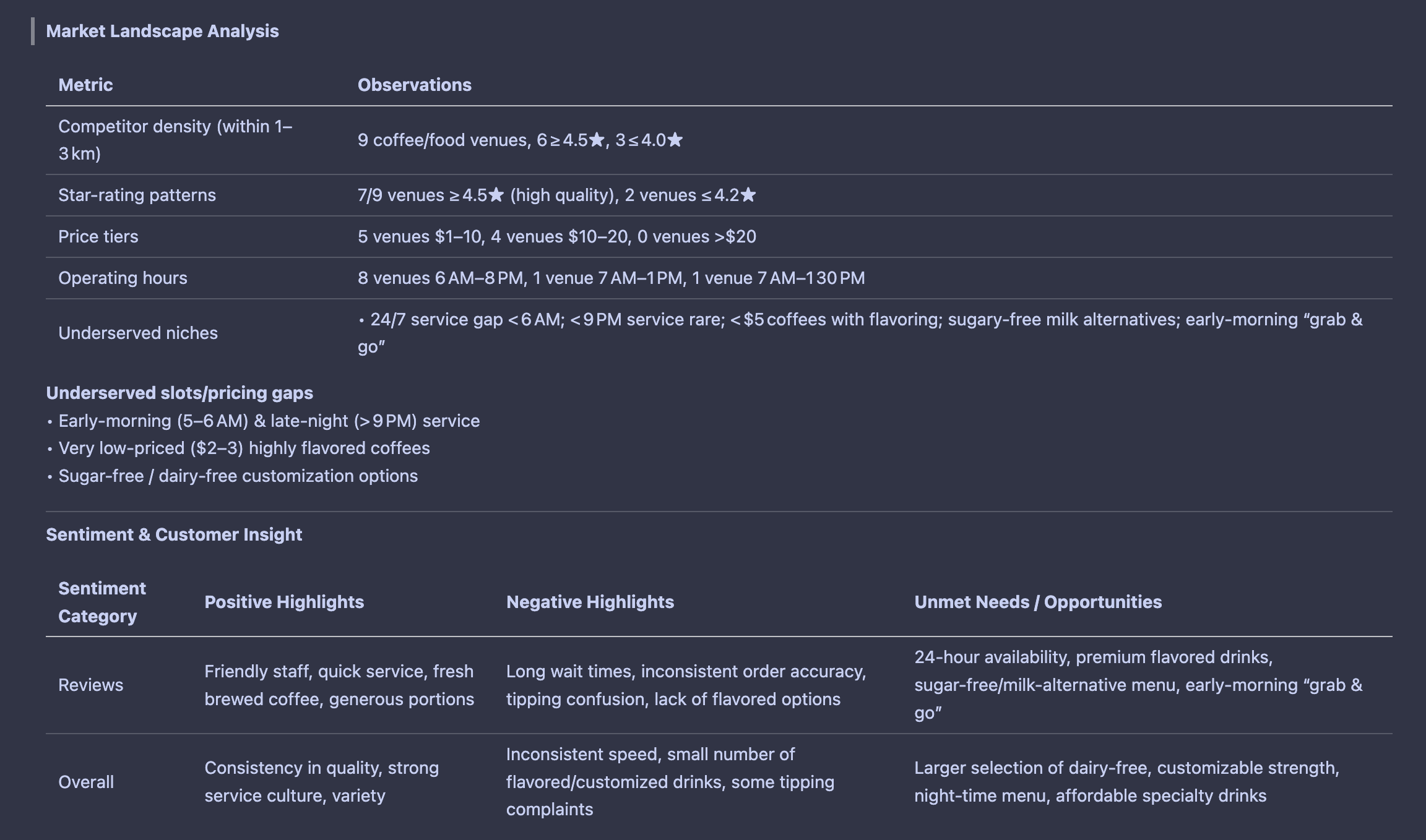

1. **Market Landscape Analysis**

- Summarize competitor density within 1–3 km.

- Identify star-rating patterns, price tiers, and operating hours.

- Highlight underserved niches (pricing gaps, late-night, early-morning).

- Output:

- A **2-column table** with | Metric | Observations |.

- A short **bullet list of underserved slots/pricing gaps**.

2. **Sentiment & Customer Insight**

- Perform sentiment analysis on reviews.

- Extract recurring positives, negatives, and unmet needs.

- Output:

| Sentiment Category | Positive Highlights | Negative Highlights | Unmet Needs / Opportunities |

|--------------------|---------------------|---------------------|-----------------------------|

- Conclude with exactly **3 key takeaways** in bullet points.

3. **Success Prediction**

- Estimate a **success probability score (0–100%)** in bold.

- Output:

- A **2-column table** with | Metric | Value |.

- A bullet list of the **Top 3 drivers of success/failure**.

4. **Recommendations**

- Output:

- A **2-column table** with | Differentiator | Rationale |.

- A **2-column Risks & Mitigations table**.

- End with an **Action Plan** that:

- Is written as **one concise paragraph (1–2 sentences, max 3 lines)**.

- Starts with: **Action plan:** (exact text, bolded).

- Specifies **location, operating hours, pricing, or promotions** directly tied to the analysis.

- Avoids bullet points, timelines (“next 2 weeks”), or generic advice.

⚠️ Rules for consistency:

- Always be clear, data-driven, and practical.

- Do not give generic answers; tailor insights directly to the provided data.

- Use tables where defined, and bullets only where instructed.

- Keep tone business-strategic, concise, and data-driven.

- Do not mix styles between sections.

"""

Notice how we use tables, bullet points, and structured outputs to make things clearer and easier to follow. You can experiment with different prompts to see what works best for you.

Run the AI Agent

def main():

load_dotenv()

api_key = os.getenv("SERPAPI_API_KEY")

while True:

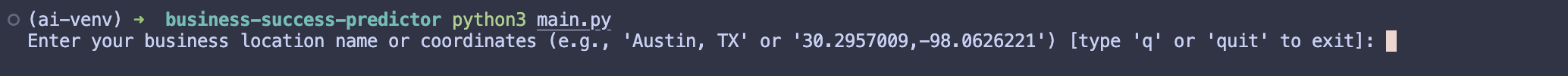

user_input = input("Enter your business location name or coordinates (e.g., 'Austin, TX' or '30.2957009,-98.0626221') [type 'q' or 'quit' to exit]: ").strip()

if user_input.lower() in ["q", "quit"]:

print("Exiting program.")

return

if not user_input:

print("[ERROR] Input cannot be empty. Please enter a valid location name or coordinates.")

continue

if not isinstance(user_input, str):

print("[ERROR] Input must be a string.")

continue

break

global now

now = lambda: datetime.now().strftime('%Y-%m-%d %H:%M:%S')

search_params = get_search_params(user_input, api_key)

local_results = fetch_shops_details(search_params)

competitors = build_competitor_data(local_results)

competitor_data_str = format_competitor_data(competitors)

prompt = build_prompt(competitor_data_str)

client = OpenAI(

base_url="http://localhost:11434/v1", # Local Ollama API

api_key="ollama" # Dummy key

)

response = client.chat.completions.create(

model="gpt-oss:20b",

messages=[

{"role": "user", "content": prompt}

]

)

ai_output = response.choices[0].message.content

logger.debug(f"AI response: {ai_output[:500]}")

# Save AI output to markdown file with timestamp

md_filename = f"ai_response_{datetime.now().strftime('%Y%m%d_%H%M%S')}.md"

with open(md_filename, "w", encoding="utf-8") as md_file:

md_file.write(ai_output)

print(ai_output)

if __name__ == "__main__":

main()When you run this script, the AI will output a market analysis, sentiment breakdown, prediction, score, and action plan in Markdown format.

The full source code is available in our GitHub repository

What's next?

While this prototype focuses on coffee shops, the same approach could easily apply to other businesses as well, such as:

- Restaurants

- Gym

- Salons

- Co-working spaces

Some possible improvements that you can make:

- Add charts & visualizations (e.g., competitor density heatmaps)

- Deploy as a Flask/FastAPI web app so anyone can test it in their city.

- Turn into a dashboard tool for entrepreneurs scouting opportunities.

Conclusion

By combining real-time Google Maps data with AI analysis, we can quickly gain insights into competition, customer sentiment, and market gaps.

Of course, no AI can perfectly predict business success. However, tools like this can provide entrepreneurs with a faster and more data-driven way to explore opportunities.

Note:

If you'd like to explore the Google Maps API and Google Reviews API for other use cases, be sure to check out our in-depth tutorial below