After hearing so many great things about DeepSeek, the new competitor to OpenAI, I finally got the chance to try out their API. In this blog post, I'd like to explore the different capabilities of DeepSeek API.

At this time of writing, the API is not very stable yet. The response may take a long time, some features are still in beta, or it may return unexpected results.

Getting started with the DeepSeek API

How to get the DeepSeek API Key? You can register for free at https://platform.deepseek.com/. But in order to use the API, you need to top up your balance.

Note: There are currently high demands for DeepSeek, so you might get the "503 Service Temporarily Unavailable error" upon trying.

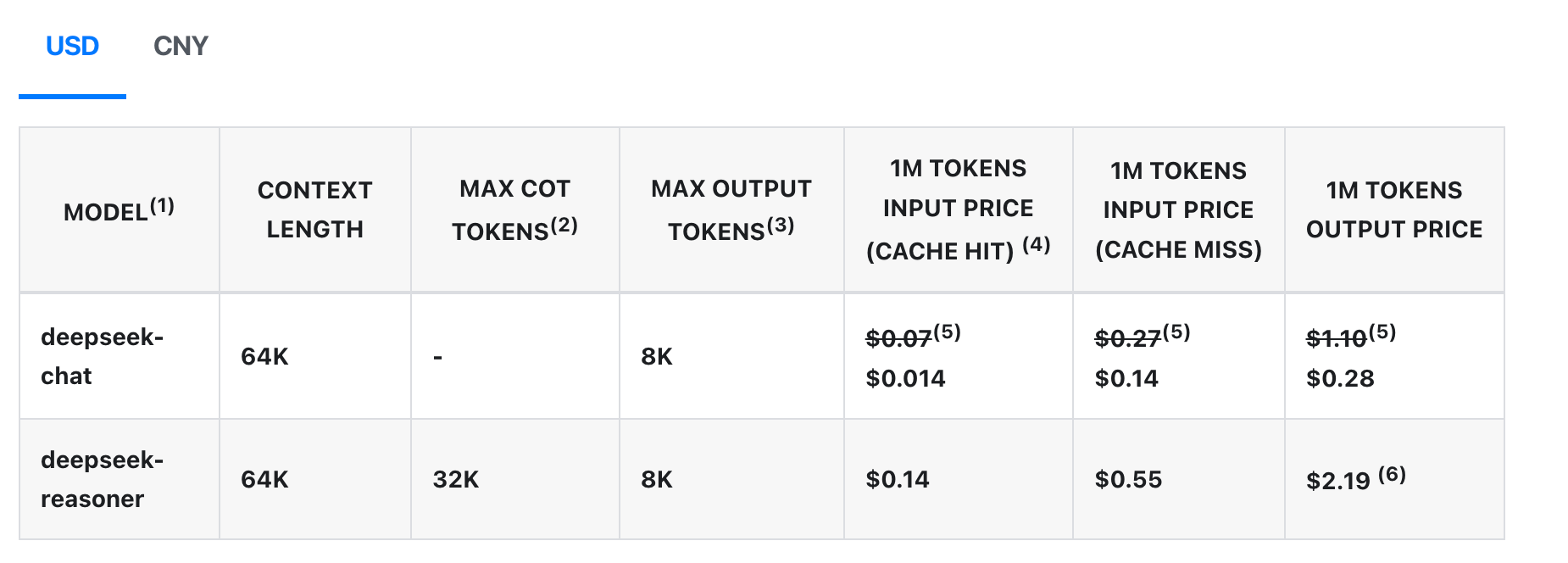

Here is the current pricing and models available:

Deepseek-chat vs Deepseek-reasoner model

Currently, they offer two different models. For basic chat completion, we can use the deepseek-chat model, which is currently on DeepSeek-v3. While for more deep research, you can use deepseek-reasoner, which is currently on version DeepSeek-r1.

The deepseek-reasoner model will generate a Chain of Thought (CoT) first to enhance the accuracy of its response.

Basic Chat Completion

The DeepSeek API uses an API format compatible with OpenAI. When using Python or NodeJS, we can install the OpenAI SDK first.

Here is what the basic chat completion API looks like for NodeJS

// Install OpenAI SDK first: `npm install openai`

import OpenAI from "openai";

const openai = new OpenAI({

baseURL: 'https://api.deepseek.com',

apiKey: '<DeepSeek API Key>'

});

async function main() {

const completion = await openai.chat.completions.create({

messages: [{ role: "system", content: "Tell me about DeepSeek." }],

model: "deepseek-chat",

});

console.log(completion.choices[0].message.content);

}

main();Python sample

# Install OpenAI SDK first: `pip3 install openai`

from openai import OpenAI

client = OpenAI(api_key="<DeepSeek API Key>", base_url="https://api.deepseek.com")

response = client.chat.completions.create(

model="deepseek-chat",

messages=[

{"role": "system", "content": "You are a helpful assistant"},

{"role": "user", "content": "Tell me about DeepSeek"},

],

stream=False

)

print(response.choices[0].message.content)cURL sample

curl https://api.deepseek.com/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer <DeepSeek API Key>" \

-d '{

"model": "deepseek-chat",

"messages": [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Tell me about DeepSeek!"}

],

"stream": false

}'Chat prefix Completion (Beta)

We can provide a prefix message to assist the AI to complete the message. We can provide both the beginning and the ending of the response.

- Last

roleon the messages list must beassistant - Set

prefixparameter for assistant to true - Since it's still in beta, user needs to set

base_url="https://api.deepseek.com/beta - We can add

stopparameter to determine the last part of the completion.

Code Example:

from openai import OpenAI

client = OpenAI(

api_key="<your api key>",

base_url="https://api.deepseek.com/beta",

)

messages = [

{"role": "user", "content": "Please write a code for binary search"},

{"role": "assistant", "content": "```python\n", "prefix": True}

]

response = client.chat.completions.create(

model="deepseek-chat",

messages=messages,

stop=["```"],

)

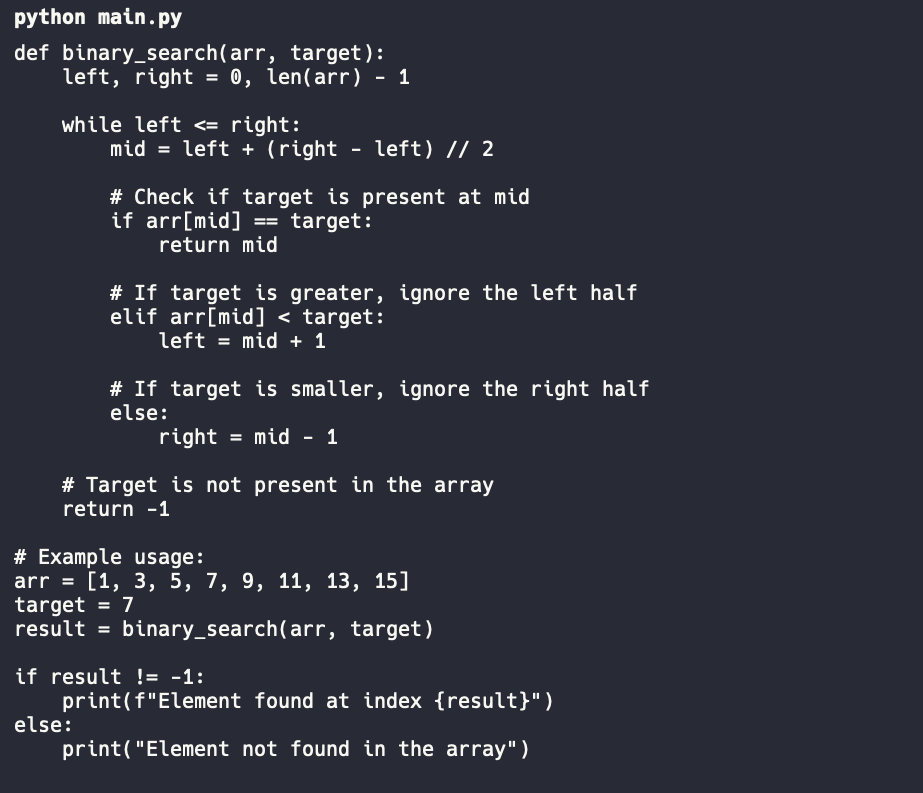

print(response.choices[0].message.content)Here is the result. There is only code, nothing else.

FIM Completion (Beta)

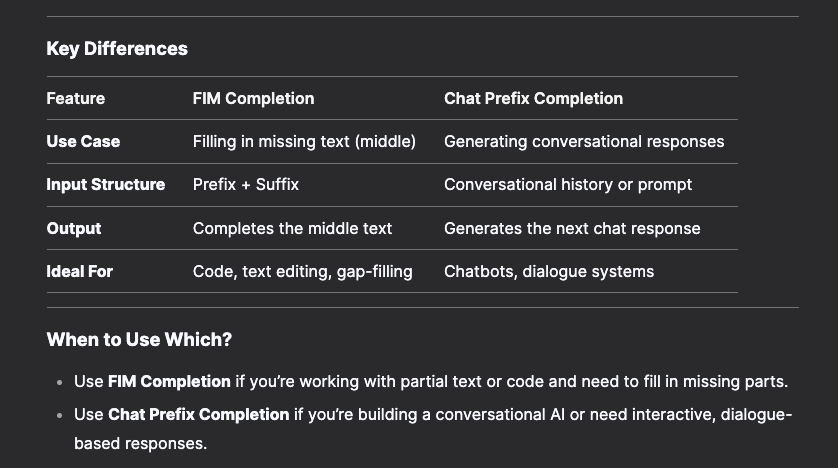

Deepseek also offers Fill in the middle (FIM) completion where we provide the prefix and suffix for the system. We declare the prefix at prompt and suffix at suffix parameter.

response = client.completions.create(

model="deepseek-chat",

prompt="def fib(a):",

suffix=" return fib(a-1) + fib(a-2)",

max_tokens=128

)

print(response.choices[0].text)I'm not really sure how it's different from the chat prefix feature, except it's simpler to use. So, why don't we ask DeepSeek AI themself? Here is the answer:

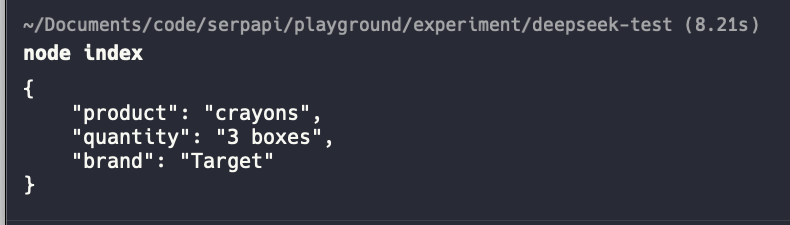

JSON Output

This is one of the most important features of AI models. Whenever we need to extract particular information from raw inquiry, we can use the JSON Output feature to return a structured JSON from a raw string so we can use it further to call other functions or API.

Rule:

- Include the

jsonword, on the system_prompt - Set the

response_formatparameter to{'type': 'json_object'}. - Optional: Set the

max_tokensparameter to prevent the JSON string from being truncated.

I'm going to use NodeJS this time for the example:

import OpenAI from "openai";

const client = new OpenAI({

baseURL: 'https://api.deepseek.com',

apiKey: 'YOUR_API_KEY'

});

async function main() {

const system_prompt = `

The user will provide some sentence. Please parse the "product", "quantity", "brand" and output them in JSON format.

EXAMPLE INPUT:

I need to buy 2kg of potatoes from Walmart.

EXAMPLE JSON OUTPUT:

{

"product": "potatoes",

"quantity": "2kg",

"brand": "Walmart"

}

`

const user_prompt = `My son needs 3 boxes of crayons from Target.`

const messages = [

{ role: "system", content: system_prompt },

{ role: "user", content: user_prompt }

]

const response = await client.chat.completions.create({

model: "deepseek-chat",

messages: messages,

response_format: {

"type": "json_object",

}

});

console.log(response.choices[0].message.content);

}

main();

Ensure the base URL is not /beta. Here is the result:

When using the JSON Output feature, the API may occasionally return empty content. You can add additional prompts to prevent this from happening.

Take a look at this use case on how to provide real-time data to DeepSeek API using JSON Output and SerpApi.

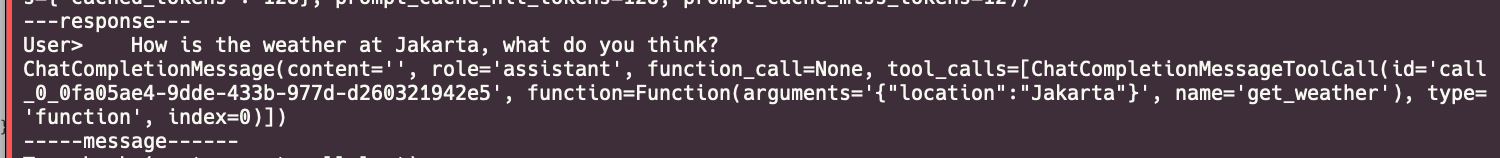

Function Calling

Similar to other AI APIs, DeepSeek also provides function calling to call external functions to perform other actions beyond chat completion. You can call a custom function that you made or use any API.

Disclaimer: Currently, this feature is not stable, which may result in looped calls or empty responses.

I tried to replicate the code from the documentation without luck. I keep getting an error, so I guess we have to wait until the next version.

def send_messages(messages):

response = client.chat.completions.create(

model="deepseek-chat",

messages=messages,

tools=tools

)

return response.choices[0].message

tools = [

{

"type": "function",

"function": {

"name": "get_weather",

"description": "Get weather of an location, the user shoud supply a location first",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g. San Francisco, CA",

}

},

"required": ["location"]

},

}

},

]

messages = [{"role": "user", "content": "How is the weather at Jakarta, what do you think?"}]

message = send_messages(messages)

print(f"User>\t {messages[0]['content']}")

print(message)

tool = message.tool_calls[0]

messages.append(message)

messages.append({"role": "tool", "tool_call_id": tool.id, "content": "24℃"})

message = send_messages(messages)

print(f"Model>\t {message.content}")Result before returning error:

I'm able to see the first API call returning the location and the function name correctly. Unfortunately, the second call hasn't been successfully returned yet.

Tips (Alternative):

Based on my experience with different AI APIs, you can also use the JSON Output feature to achieve similar things. Using the JSON Output feature, you can extract the "parameters" you want to use to call another function.

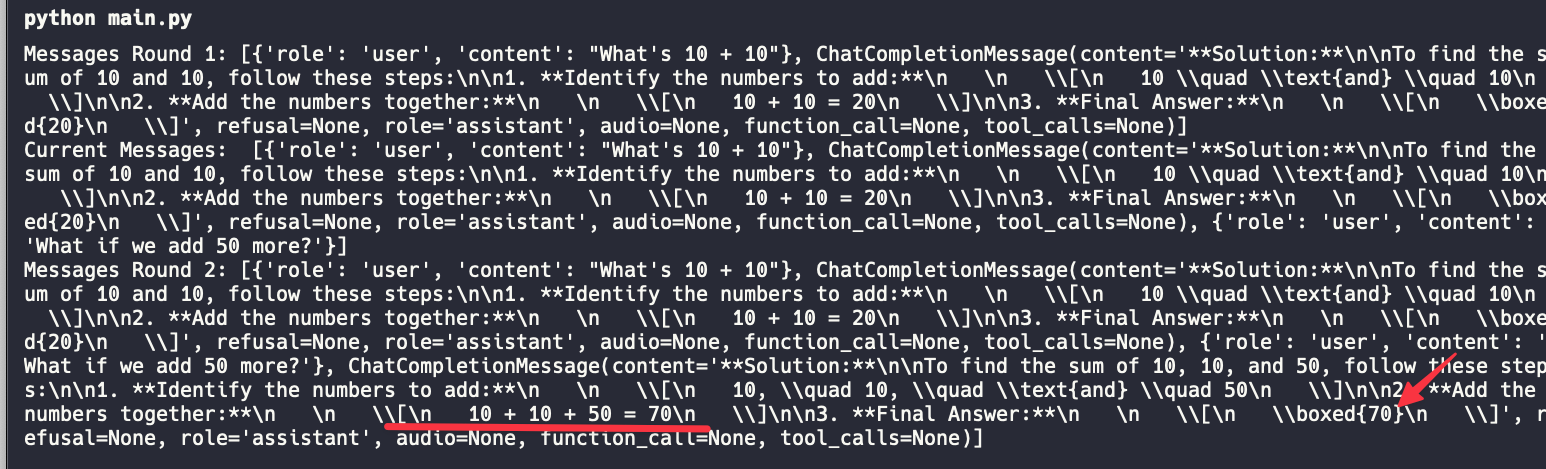

Multiround Conversation (AI Assistant) at DeepSeek

The chat completion is stateless. The API won't remember anything by default when you send separate requests to the API. Therefore, we need to attach all previous conversations to provide context to the API.

Here is the code I tried:

# Round 1

messages = [{"role": "user", "content": "What's 10 + 10"}]

response = client.chat.completions.create(

model="deepseek-chat",

messages=messages

)

messages.append(response.choices[0].message)

print(f"Messages Round 1: {messages}")

# Round 2

messages.append({"role": "user", "content": "What if we add 50 more?"})

print("Current Messages: ", messages)

response = client.chat.completions.create(

model="deepseek-chat",

messages=messages

)

messages.append(response.choices[0].message)

print(f"Messages Round 2: {messages}")So basically, it calls the chat.completion method 2 times. On the second call, we include the message and result from the first call for the context.

As you can see on the log, it understands that I want to add the previous summary with the current number.

Learn more about conversation chat with DeepSeek API using Multiround conversation feature.

Reasoning API

As mentioned, DeepSeek offers a different model for better reasoning capability called deepseek-reasoner. There's an option to return the response in stream or not. Let's try it.

messages = [{"role": "user", "content": "9.11 and 9.8, which is greater?"}]

response = client.chat.completions.create(

model="deepseek-reasoner",

messages=messages

)

reasoning_content = response.choices[0].message.reasoning_content

content = response.choices[0].message.contentSimilar to other functions, unfortunately, I haven't been able to run this feature successfully. I'll try it again on another day and share the result.

Frequently Asked Questions

How do we calculate the token usage?

Here is the rough estimation we can use

- 1 English character ≈ 0.3 token.

- 1 Chinese character ≈ 0.6 token.

However, different models may have different tokens. DeepSeek provides a demo tokenizer code, which we can download from this page: https://api-docs.deepseek.com/quick_start/token_usage

What's the API rate limit?

Currently, there's no rate limit, but since there are currently high demands on the API, you should expect a very slow response from time to time.

Is DeepSeek API free?

It's not. You need to top up your balance before you start using the API.

I hope this helps!