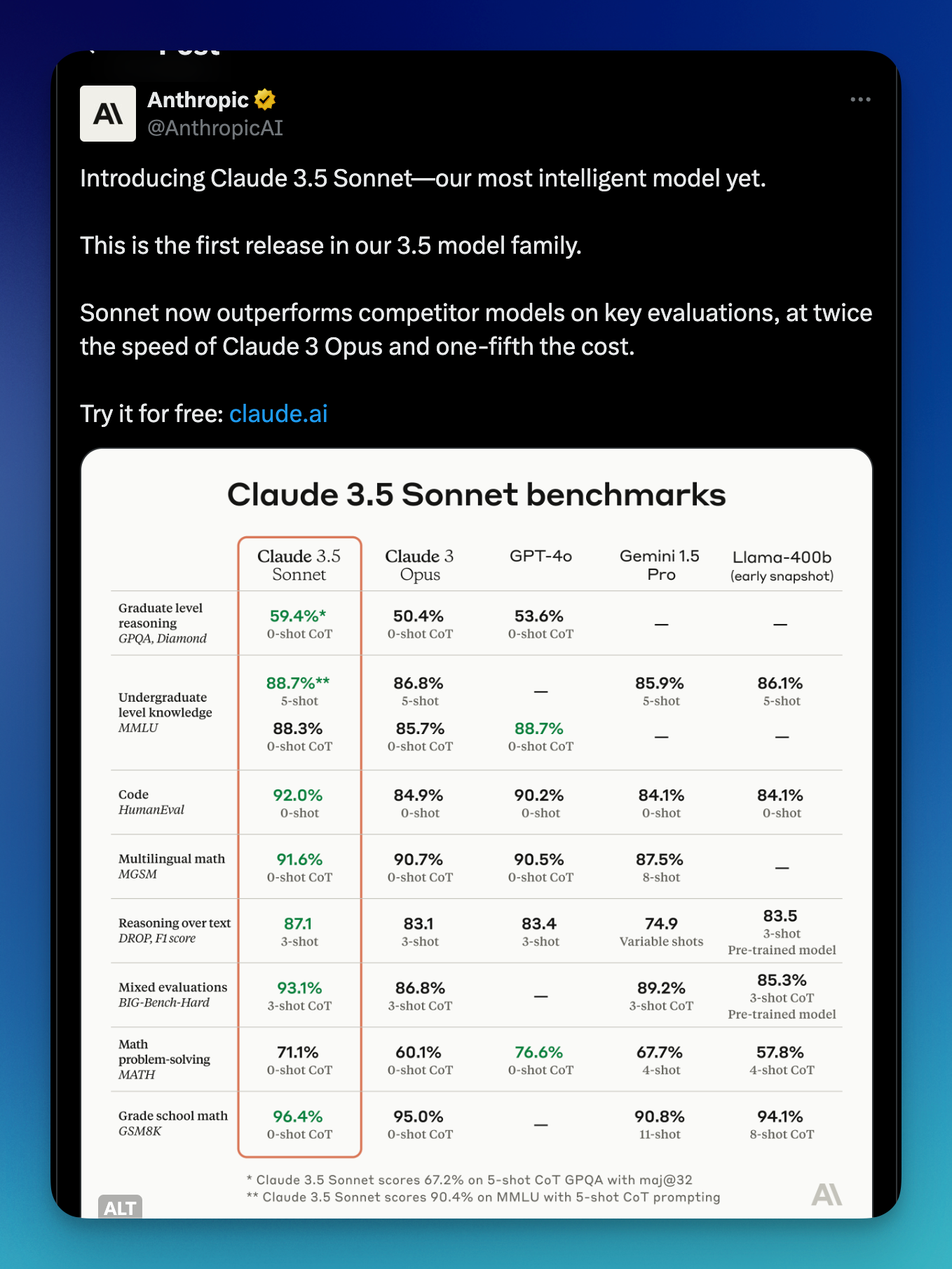

Claude AI announced its latest model: 3.5 Sonnet. It claims to be the perfect balance between speed and performance compared to other API models, including GPT-4o by OpenAI.

I haven't tried Claude API before. I guess it's a good time to do so! In this blog post, we'll explore how to connect ClaudeAI knowledge to the internet by enabling the "tool use" or function calling to call custom functions.

Preparation using Claude AI API

If you used Claude API before, feel free to skip this step. You can also read more detailed information from Claude getting started page. I'm going to use Python, feel free to use Javascript or a simple GET request using other languages.

Create a virtual env

python -m venv claude-envActivate virtual env

On MacOS/Linux: source claude-env/bin/activate

On Windows: claude-env\Scripts\activate

Install Python SDK

pip install anthropicSet up your API Key

Make sure to register at anthropic.com and get your API Key. You can then set it with (On Mac/Linux)

export ANTHROPIC_API_KEY='your-api-key-here'On Windows

setx ANTHROPIC_API_KEY "your-api-key-here"Basic implementation

Here is the basic implementation to ensure everything has been set up correctly.

import anthropic

client = anthropic.Anthropic()

message = client.messages.create(

model="claude-3-5-sonnet-20240620",

max_tokens=1000,

messages=[

{

"role": "user",

"content": [

{

"type": "text",

"text": "Hello AI, what's up!"

}

]

}

]

)

print(message.content)Introduce function calling

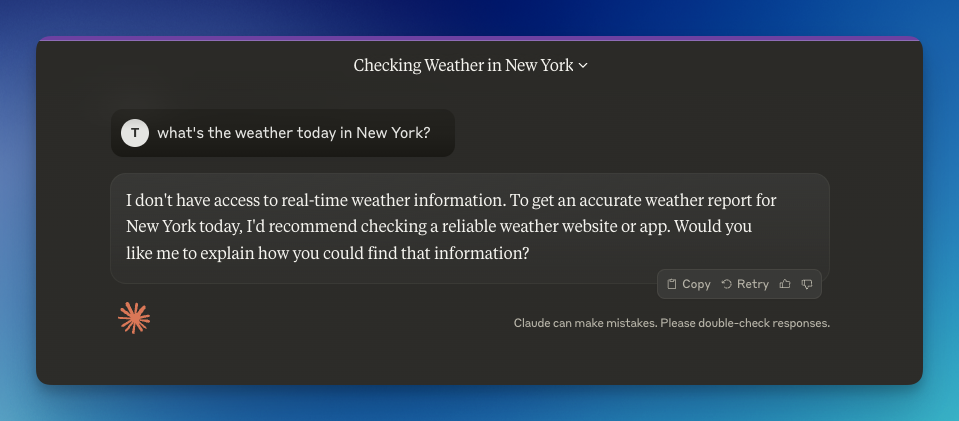

Every AI model has a cutoff knowledge by a certain time. That's why we can't access real-time data. Here's the response from Claude AI if we try to ask about it:

To get rid of this limitation, we can use "tool" or "function calling" by Claude. Using this, we can call a custom function, whether calling external APIs or just a simple function that we build.

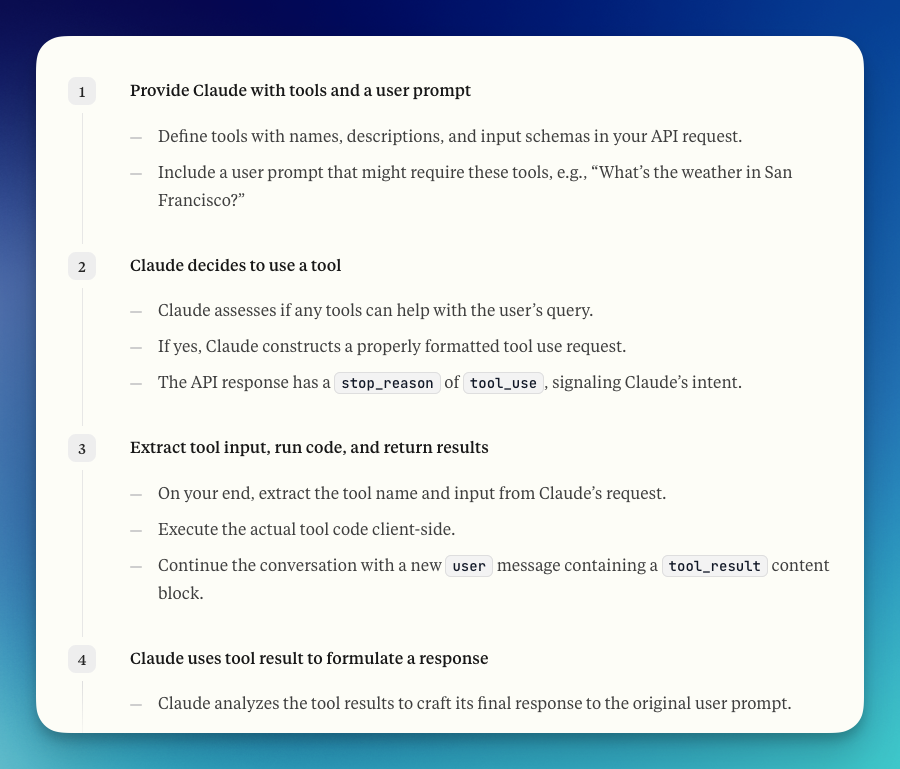

Function calling Flow

Here's what the flow looks like:

Function calling simple demo

Let's see a basic implementation of function calling.

All code samples in this blog post available at: https://github.com/hilmanski/claude-ai-function-calling-python

Step 1: Prepare a custom function

Here is a custom function we can implement. We crate a score checker function to determine if the student passes or fails.

def score_checker(score):

if score > 0.5:

return "Pass"

else:

return "Fail"

Step 2: Call AI using the tools option

Now, let's call the AI while enabling the tool function. tools parameter is an array of custom functions we want to use. In this example, I only implement one function.

client = anthropic.Anthropic()

message = client.messages.create(

model="claude-3-5-sonnet-20240620",

max_tokens=1000,

tools=[

{

"name": "score_checker",

"description": "A function that takes the score provided and return the results.",

"input_schema": {

"type": "object",

"properties": {

"score": {

"type": "string"

}

},

"required": ["score"]

}

}

],

messages=[

{

"role": "user",

"content": "I got the result. My score is 0.6"

}

]

)

print(message)Notes:

It's important to create the input_schema properly. Declare any parameters you want to grab from the user's prompt. So we can use these values to upon calling the function.

Let's try to run this function.

Run it with python3 yournamefile.py. You should see something along this line:

Message(

id='msg_01C1242anh7F8GzKvXDt1q2i',

content=

[TextBlock(

text="Thank you for providing your score. Let's use the score_checker function to evaluate your result.", type='text'),

ToolUseBlock(id='toolu_01RQnFNwvWPq3eV75NwdTqLz', input={'score': '0.6'}, name='score_checker', type='tool_use')],

model='claude-3-5-sonnet-20240620', role='assistant', stop_reason='tool_use', stop_sequence=None, type='message', usage=Usage(input_tokens=375, output_tokens=76)

)The goal of running this function is to grab the score from the prompt. It's available on input.

Step 3: Calling the custom function

Now that we have the score, we can call the custom function.

dataFromCustomFunction = None

if message.stop_reason == "tool_use":

tool_params = message.content[1].input

tool_name = message.content[1].name

# call available tool function

if tool_name == "score_checker":

result = score_checker(float(tool_params["score"]))

dataFromCustomFunction = result

print(result)Notes:

- We need to verify that the AI needs to call a custom function by checking the stop_reason value.

- We grab the parameters

- We grab the function name we need to call

- Run the function by providing the parameter we got at tool_params

At this step, we already have the answer from our custom function.

Step 4: Respond in natural language

We've prepared everything, except one thing; We want to respond to the user's prompt in a natural human language. Not just return "Pass" or "Fail", which sound super robotic. We want to show an emotion in the answer!

That's why we'll run another call to the Claude AI.

response = client.messages.create(

model="claude-3-5-sonnet-20240620",

max_tokens=1000,

messages=[

{

"role": "user",

"content": "Answer to the customer. The customer exam status is " + dataFromCustomFunction

}

]

)

print(response.content[0].text)We provide the context and value from the custom function. Now, it's time for the AI to answer this. Here is the result:

Congratulations! I'm pleased to inform you that your exam status is Pass. Well done on your successful performance. If you have any questions about your results or next steps, please don't hesitate to ask. Great job on your achievement!Roughly, it took 5.5s to run this.

Function calling + Internet

We've seen the basic implementation. Let's replace the static function with calling an external API, which enables our AI to grab knowledge from the internet.

In this example, I'm going to use Google direct answer box API from SerpApi. This API allows us to grab any real-time data provided by Google.

Step 1: Install SerpApi

Make sure to register at serpapi.com. Grab your API key from the dashboard and export it like before.

export SERPAPI_API_KEY=your_api_keyInstall SerpApi Python package

pip install serpapiStep 2: Declare SerpApi function

Let's prepare the function to call SerpApi API

import os

import serpapi

def get_search_result(query):

client = serpapi.Client(api_key=os.getenv("SERPAPI_API_KEY"))

results = client.search({

'q':query,

'engine':"google",

})

if 'answer_box' not in results:

return "No answer box found"

return results['answer_box']For now, we are only interested in the answer_box section.

Step 3: Adjust the message create function

Let's replace the custom tool we have previously with this:

client = anthropic.Anthropic()

message = client.messages.create(

model="claude-3-5-sonnet-20240620",

max_tokens=1000,

tools=[

{

"name": "get_search_result",

"description": "A function that take a search query from the sentence and search the answer from Google.",

"input_schema": {

"type": "object",

"properties": {

"query": {

"type": "string"

}

},

"required": ["query"]

}

}

],

messages=[

{

"role": "user",

"content": "What's the weather in new York today"

}

]

)Just in case we're not using the tools, we can return the program earlier.

if message.stop_reason != "tool_use":

print("End... No tool used")

exit()Step 4: Call the custom functions

Now let's call the API when needed.

# Only continue when tools needed

dataFromCustomFunction = None

if message.stop_reason == "tool_use":

tool_params = message.content[1].input

tool_name = message.content[1].name

# call available tool function

if tool_name == "get_search_result":

result = get_search_result(tool_params["query"])

# limit the response to 1000 characters only

if len(str(result)) > 1000:

result = str(result)[:1000] + "..."

dataFromCustomFunction = result

print(result)Notes: I'm limiting the result to only the first 1000 characters. You can adjust this depending on what data you need. If the results from your custom function are short, you won't need to limit the result.

Step 5: Answer in natural language

Now is the final step. Let's call the Claude AI to respond in natural human language based on the data we have.

response = client.messages.create(

model="claude-3-5-sonnet-20240620",

max_tokens=1000,

messages=[

{

"role": "user",

"content": "Answer to the customer in a nice way " + dataFromCustomFunction

}

]

)

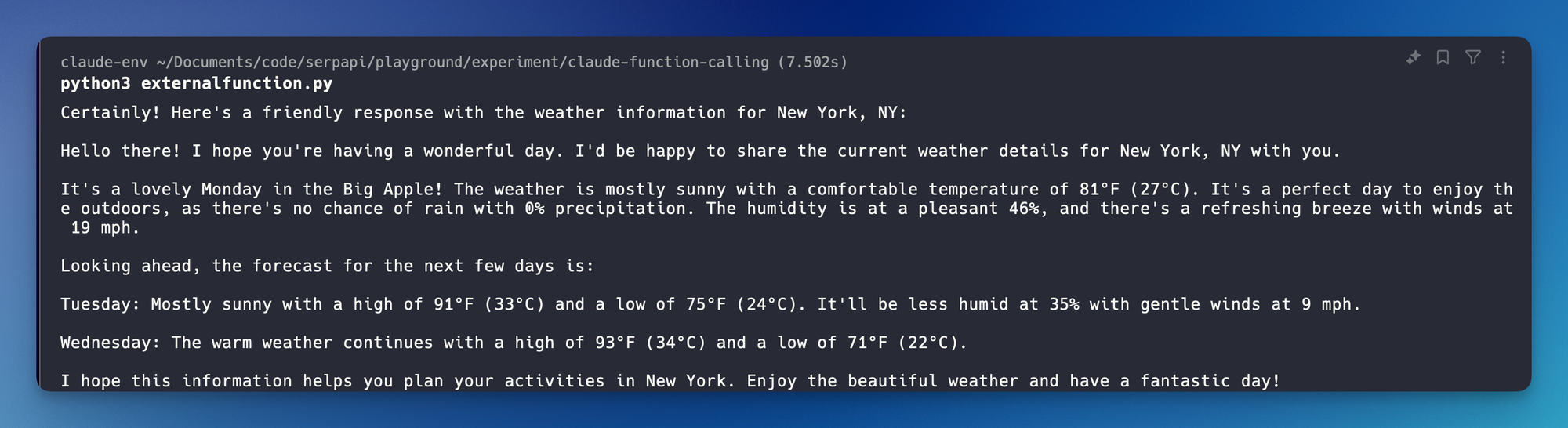

print(response.content[0].text)Here's the response I got upon running this program:

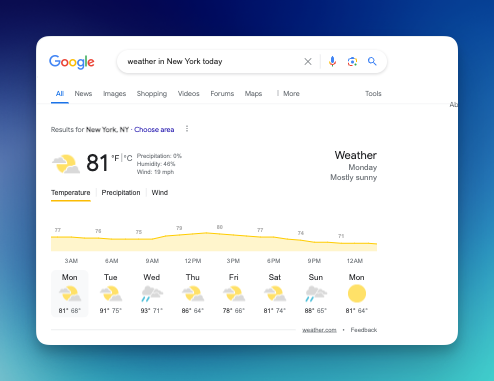

Let's double-check that we have the accurate result from the Google answer box.

That's it! I hope you enjoyed reading this blog post! Feel free to try calling other APIs using the same flow.