Intro

SerpApi released a new DuckDuckGo Maps API in August. You can use it to scrape location details from DuckDuckGo Maps.

In this tutorial, we will discuss some of the advantages the DuckDuckGo Maps API offers, and create an implementation in JavaScript/Node.js based on a common use case - we will build a script to find all of the Coffee Shops in Austin, Texas.

If you do not need an explanation and just want to download and use the application, you can access the full code in the GitHub repository.

Advantages of SerpApi

SerpApi is a web-scraping API that streamlines the process of scraping data from search engines. This can also be done manually, but SerpApi provides several distinct advantages.

Besides providing real-time data in a convenient JSON format, SerpApi covers proxies, captcha solvers, and other necessary techniques for avoiding searches being blocked. You can click here for a comparison of the process of scraping Google Search Results manually vs using SerpApi, or here for an overview of the techniques you can use to prevent getting blocked.

Advantages of the DuckDuckGo Maps API

For SerpApi users who are already familiar with SerpApi's Google Maps API, you may be wondering what the benefits of the DuckDuckGo Maps API are.

The primary advantage the Duck Duck Go Maps API has over the Google Maps API is its unique bbox_parameter, which allows you to set specific boundaries for your search.

Let's take a look at a common use case. You want to scrape business listings in a particular city, and you want to make sure you get them all. You'd also like to avoid any duplicates, so as not to waste your searches.

With Google Maps, the only option is to increase the zoom parameter to its maximum value, estimate the radius of the search, and keep changing the GPS coordinates to try to cover the entire area.

The trouble with this is that Google doesn't provide a consistent search radius. If there are few results available near the GPS coordinates, you're going to get some results further away. This makes it impossible to cover an area with the highest precision, without having to filter out duplicates.

With DuckDuckGo Maps on the other hand, we have a bbox_parameter and a strict_bbox parameter. When strict_bbox is set to 1, only results within the defined area will be returned.

Prerequisites

- You will need to be somewhat familiar with JavaScript. You will have an easier time if you are familiar with ES6 syntax and features, as well as Node and Npm.

- You need to have the latest versions of Node and Npm installed on your system. If you don’t already have Node and Npm installed you can visit the following link for help with this process: https://docs.npmjs.com/downloading-and-installing-node-js-and-npm

- You need to have an IDE installed on your system that supports JavaScript and Node.js. I recommend VSCode or Sublime, but any IDE that supports Node.js will do.

- You will also need to sign up for a free SerpApi account at https://serpapi.com/users/sign_up.

Preparation

First, you will need to create a package.json file. Open a terminal window, create a directory for the project, and CD into the directory.

mkdir wallpaper-changer

cd wallpaper-changer

Create your package.json file:

npm init

Npm will walk you through the process of creating a package.json file.

After this we need to make a few changes to the package.json file.

We will be using the ES Module system rather than CommonJs, so you need to add the line type="module" to your package.json.

{

"name": "wallpaper-changer",

"version": "1.0.0",

"description": "Change your wallpaper to a randomized image from the web",

"type": "module",

"main": "index.js",

We will also add a start script for convenience:

"scripts": {

"start": "node index.js",

"test": "echo \"Error: no test specified\" && exit 1"

},

Next, we need to install our dependencies. For this project, we will use the SerpApi for JavaScript/TypeScript Node module, and the dotenv module. If you're not familiar with dotenv, it is a module for handling environment variables (like file paths and API keys).

npm install serpapi dotenv

If you haven’t already signed up for a free Serpapi account go ahead and do that now by visiting https://serpapi.com/users/sign_up and completing the signup process.

Once you have signed up, verified your email, and selected a plan, navigate to https://serpapi.com/manage-api-key . Click the Clipboard icon to copy your API key.

Then create a new file in your project directory called .env and add the following line:

SERPAPI_KEY = “PASTE_YOUR_API_KEY_HERE”

Scraping DuckDuckGo Maps Search Results with the SerpApi DuckDuckGo Maps API

We're ready to write the code. Let's start with a simple call to the DuckDuck Go Maps API.

Create a file in your project directory called index.js and add the following code:

import { config, getJson} from "serpapi";

import * as dotenv from "dotenv";

dotenv.config();

config.api_key = process.env.SERPAPI_KEY;

const params = {

engine: "duckduckgo_maps",

q: "Coffee",

bbox:"30.341552964181687,-97.87405344947078,30.16321730812698,-97.50702877159034",

strict_bbox: "1"

}

function getResults(){

getJson( params, (results) => {

console.log(results);

});

}

getResults();

Run npm start in your terminal. A list of coffee shops and their details should be printed.

Now that we know the call to SerpApi is working, let's remove the hardcoded q and bbox parameters and add them as parameters in our getResults() function:

import { config, getJson} from "serpapi";

import * as dotenv from "dotenv";

dotenv.config();

config.api_key = process.env.SERPAPI_KEY;

const params = {

engine: "duckduckgo_maps",

strict_bbox: "1"

}

function getResults(q, bbox){

params.q = q;

params.bbox = bbox;

getJson( params, (results) => {

console.log(results);

});

}Searching in a Grid Pattern

The limitation of DuckDuckGo Maps is that it never returns more than 20 results per search and it doesn't have a pagination feature. So for it to be effective in getting all possible results, you need to zoom in close enough that you can be sure there aren't more than 20 results available. You then need to iterate over the area you want to search in a grid pattern.

Determining the GPS Coordinates

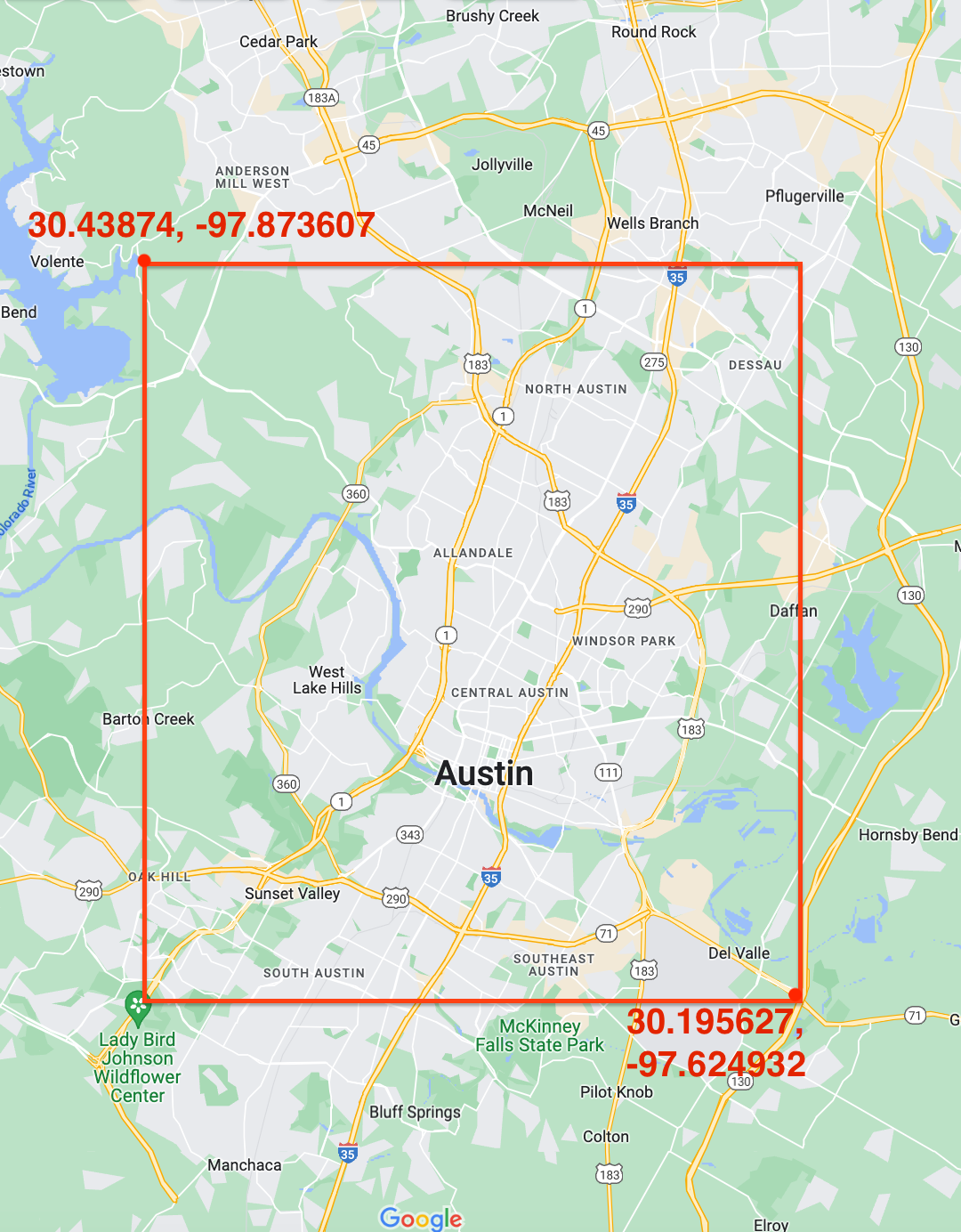

To get all of the coffee shops in Austin, Texas, we first need to define the GPS coordinates for Austin. We can get these by opening a view of Austin in Google Maps, and right-clicking where we want the corners to be.

In a real-life application, we might be more particular about defining the area we want to search, but for this example, we will use the area shown below:

Calculating the Grid Size

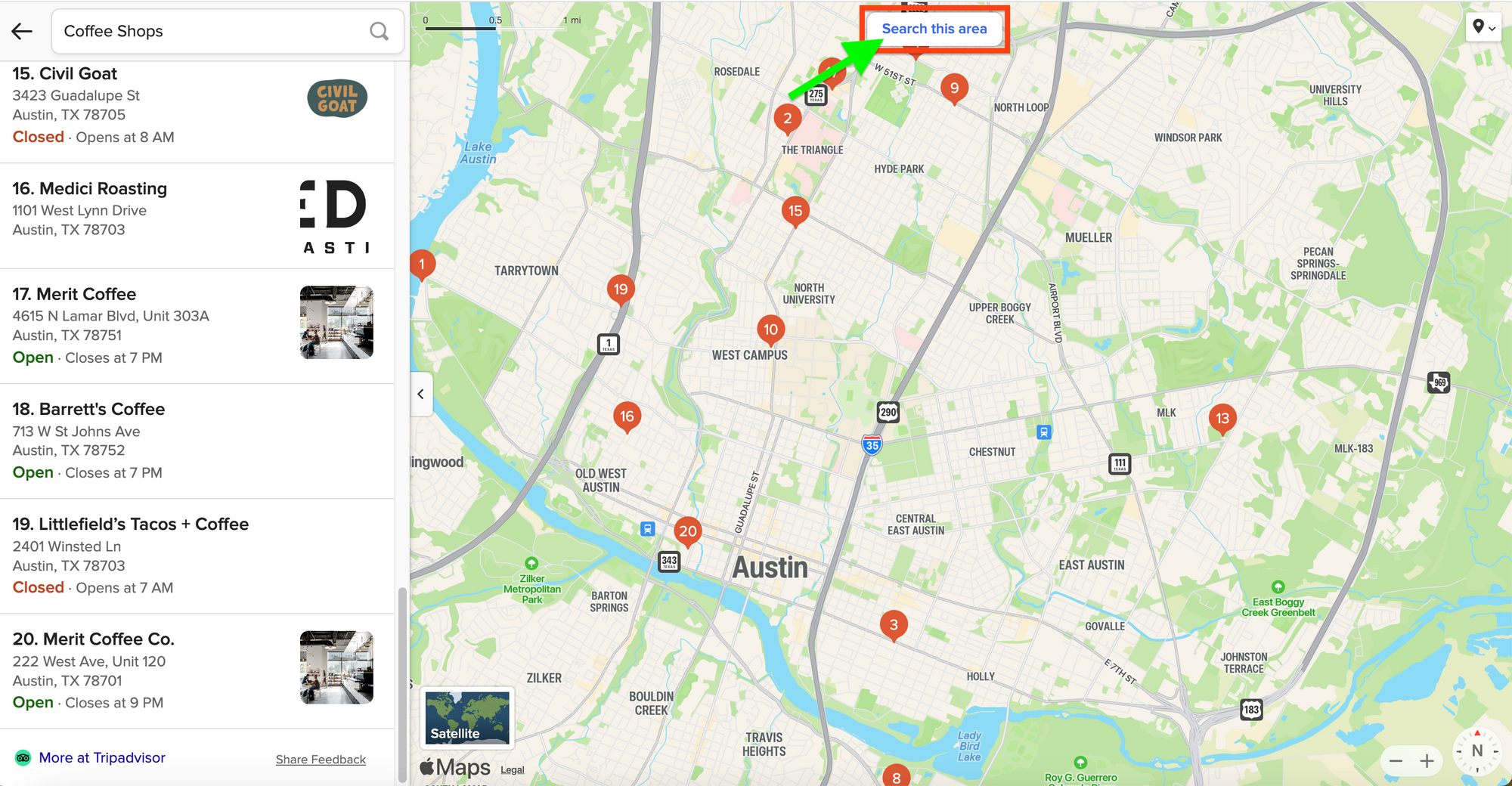

Now we need to determine the optimal grid size. We open DuckDuckGo in the browser and search for "Austin, TX".

We want to find all of the coffee shops in Austin, so we search for "Coffee".

We then navigate to the area where we expect to have the most dense population of results. Let's assume downtown Austin has the most Coffee Shops. We navigate to downtown Austin in the map window, zoom in, and click the "Search this area" button at the top of the map.

There should be 20 results the first time we do this, if not, we need to zoom out.

Then we zoom in little by little, clicking the "Search this area" button until only 19 results are returned. We move the map window around a little bit and click the button again to make sure nowhere returns 20 results.

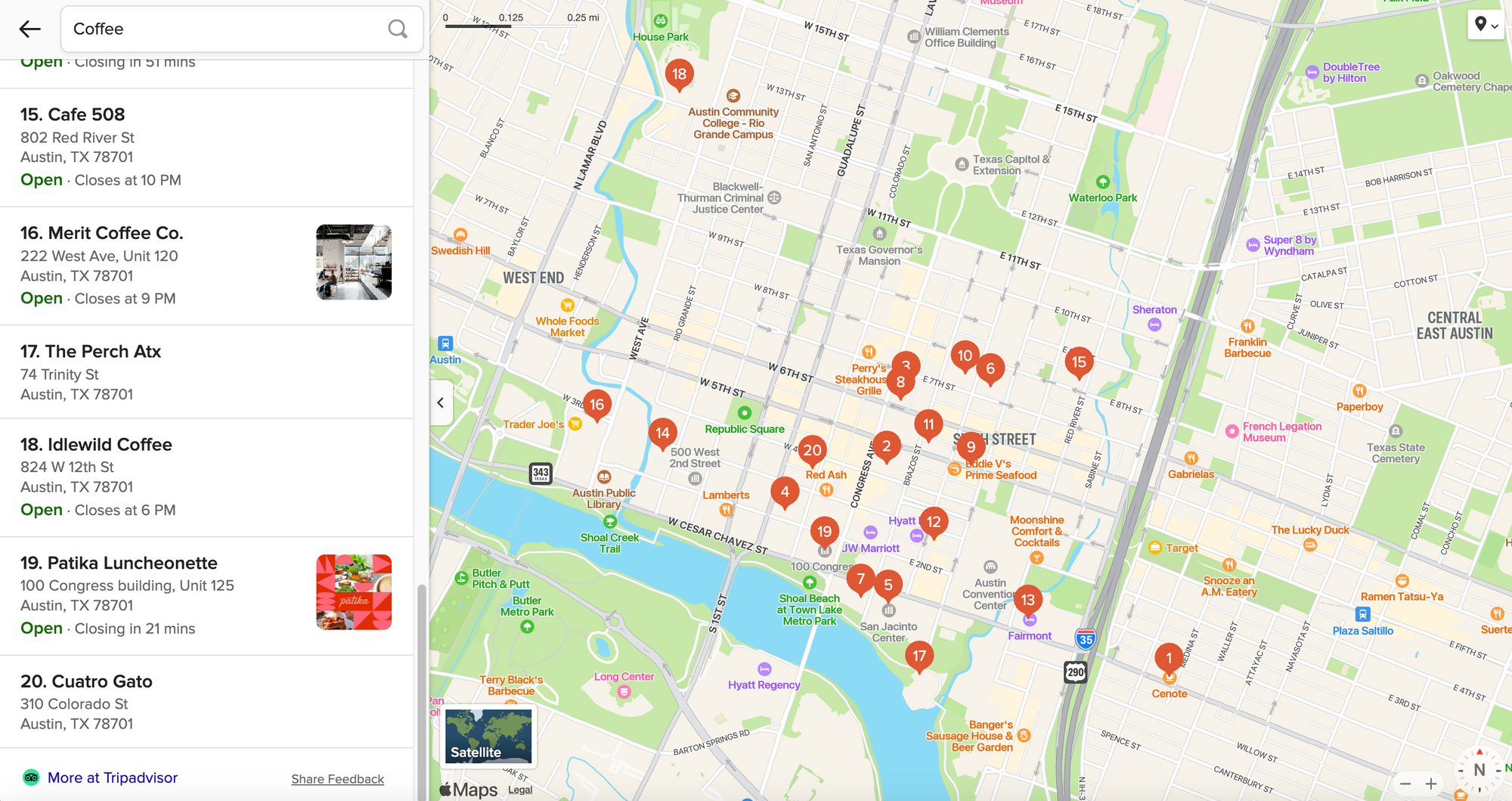

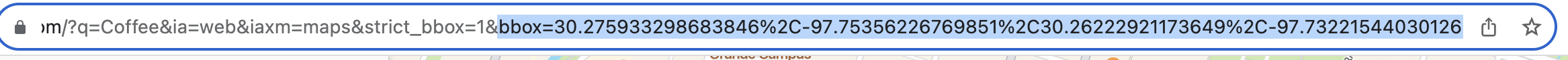

Now that we've determined the appropriate zoom distance for getting the maximum number of results, we need to extract the bbox value from the URL in the address bar to determine the size of our latitude and longitude offsets.

The bbox parameter includes two sets of GPS coordinates. The first latitude-longitude pair represents the top left corner of a box, and the second pair represents the bottom right corner.

If we want to move from top-left to bottom right in a grid pattern, we need to subtract the second pair from the first to get the offsets we need. So if our bbox parameter is bbox=30.275933298683846,-97.75356226769851,30.26222921173649,-97.73221544030126 we need to do the following calculations:

30.26222921173649 - 30.275933298683846 = 0.01370408694

-97.73221544030126 - -97.75356226769851 = -0.02134682739Looping Over the Grid

For simplicity, let's define some constants for the area we want to search and the latitude and longitude offsets:

const area = [30.43874, -97.873607,30.195627,-97.624932];

const lat_offset = -0.01370408694;

const lon_offset = 0.02134682739;Now add the following function:

function findAll(q, area, lat_offset, lon_offset){

const coords = [area[0], area[1], area[0] + lat_offset, area[1] + lon_offset];

/loop while the top part of our window is above and to the left of the bottom and right borders of the area we want to search/

while(coords[0] > area[2]){

while(coords[1] < area[3]){

const coords_string = coords.toString();

console.log(coords_string);

getResults(q, coords_string);

coords[1] += lon_offset;

coords[3] += lon_offset;

}

coords[1] = area[1];

coords[3] = area[3];

coords[0] += lat_offset;

coords[2] += lat_offset;

}

}In the code above, we first define a coords array with the GPS coordinates for the first grid section we want to search in.

We then create a nested while loop to move through all of the grid squares from top left to bottom right.

Before adding a call to the findAll() function and running it from the terminal, we need to make a few more changes.

Right now, the getResults() function will print the full JSON response for each search. Let's modify it to just print the number of results for each search, and the names of all of the locations returned:

function getResults(q, bbox){

params.q = q;

params.bbox = bbox;

getJson( params, (results) => {

const local_results = results.local_results;

if(local_results){

console.log(local_results.length - 1 + " results");

for (const result of local_results){

console.log(result.title);

}

}

});

}Another consideration is that we've defined very small grid squares for our search, meaning it will use a lot of search credits to complete. Before running the code with the offsets we calculated, let's test it with some larger offsets to reduce the number of search credits used:

findAll("coffee", area, 0.5, 0.5);After adding the call to findAll, type npm start into your terminal.

If you did everything correctly, you should see a list of coffee shops in Austin printed out.

Now to get the maximum number of coffee shops, let's use the constants we defined for lat_offset and lon_offset:

findAll("coffee", area, lat_offset, lon_offset);If you run this, you should see a long list of results printed in the terminal, and you shouldn't see any duplicates.

Conclusion

We have seen how to scrape local results from DuckDuckGo Maps using the DuckDuckGoMaps API, and how to use the bbox parameter to iterate over a grid and get as many results as possible. You now have a template you can modify in any way you need.

I hope you found the tutorial informative and easy to follow. If you have any questions, feel free to contact me at ryan@serpapi.com.

Links:

Full code on Github

SerpApi DuckDuckGo Maps API Documentation

SerpApi Playground

Google Local Services API

Google Local Services API

Baidu Search API

Baidu Search API

Bing Search API

Bing Search API

DuckDuckGo Search API

DuckDuckGo Search API

Ebay Search API

Ebay Search API

Walmart Search API

Walmart Search API

The Home Depot Search API

The Home Depot Search API

Naver Search API

Naver Search API