You might have follow-up questions after the original answer when talking with AI. Let's see what happens when we ask a second question to DeepSeek API.

Here's my Python code that runs chat completions method twice

import os

from openai import OpenAI

from dotenv import load_dotenv

load_dotenv()

DEEPSEEK_API_KEY = os.getenv('DEEPSEEK_API_KEY')

client = OpenAI(api_key=DEEPSEEK_API_KEY, base_url="https://api.deepseek.com")

# First Question

messages = [{"role": "user", "content": "I really like Pokemon. Can you tell me one fun fact about it?"}]

response = client.chat.completions.create(

model="deepseek-chat",

messages=messages

)

print(response.choices[0].message.content)

# Second question

messages = [{"role": "user", "content": "What do you think about that?"}]

response = client.chat.completions.create(

model="deepseek-chat",

messages=messages

)

print("\n--------------\n")

print(response.choices[0].message.content)

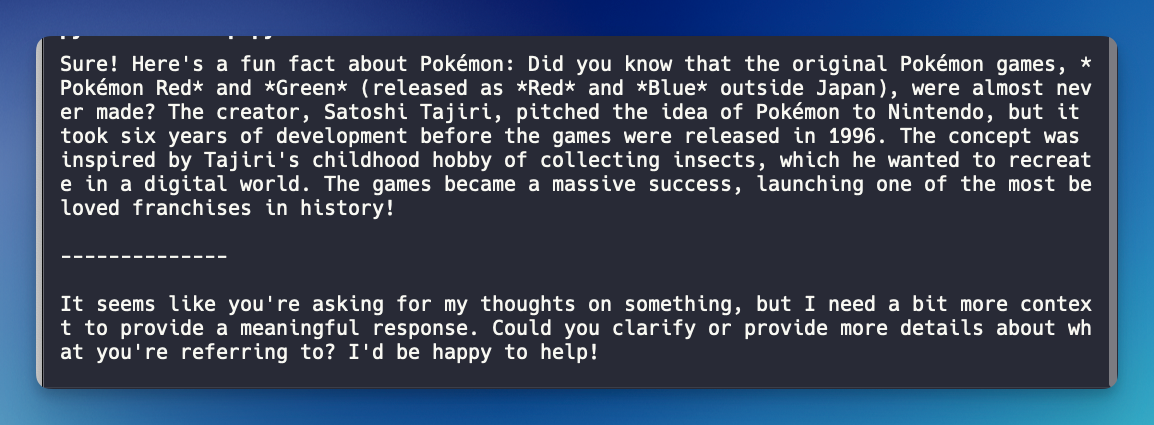

My two questions are:

- I really like Pokemon. Can you tell me one fun fact about it?

- What do you think about that?

Here is the response

As you can see, the second response shows that the AI model is confused about what we're asking.

How to remember a conversation?

The DeepSeek API is stateless. If you run multiple requests, even if they're on the same file, it won't remember the previous conversation.

Multi-round conversation

The DeepSeek API documentation recommends the following method:

Combine all previous conversation history and pass it to the chat API for each request.

So, the idea is to combine the response and the original question for each turn. This way, the AI model will always have the context of our conversation.

Here is the Python code sample:

import os

from openai import OpenAI

from dotenv import load_dotenv

load_dotenv()

DEEPSEEK_API_KEY = os.getenv('DEEPSEEK_API_KEY')

client = OpenAI(api_key=DEEPSEEK_API_KEY, base_url="https://api.deepseek.com")

# Round 1

messages = [{"role": "user", "content": "I really like Pokemon. Can you tell me one fun fact about it?"}]

response = client.chat.completions.create(

model="deepseek-chat",

messages=messages

)

messages.append(response.choices[0].message)

print(f"Messages Round 1: {messages}")

print("\n--------------\n")

# Round 2

messages.append({"role": "user", "content": "What do you think about that?"})

response = client.chat.completions.create(

model="deepseek-chat",

messages=messages

)

# In case you want to continue the conversation, you can append the response to the messages list like this:

# messages.append(response.choices[0].message)

# Otherwise, you can print the response like this:

print(response.choices[0].message.content)

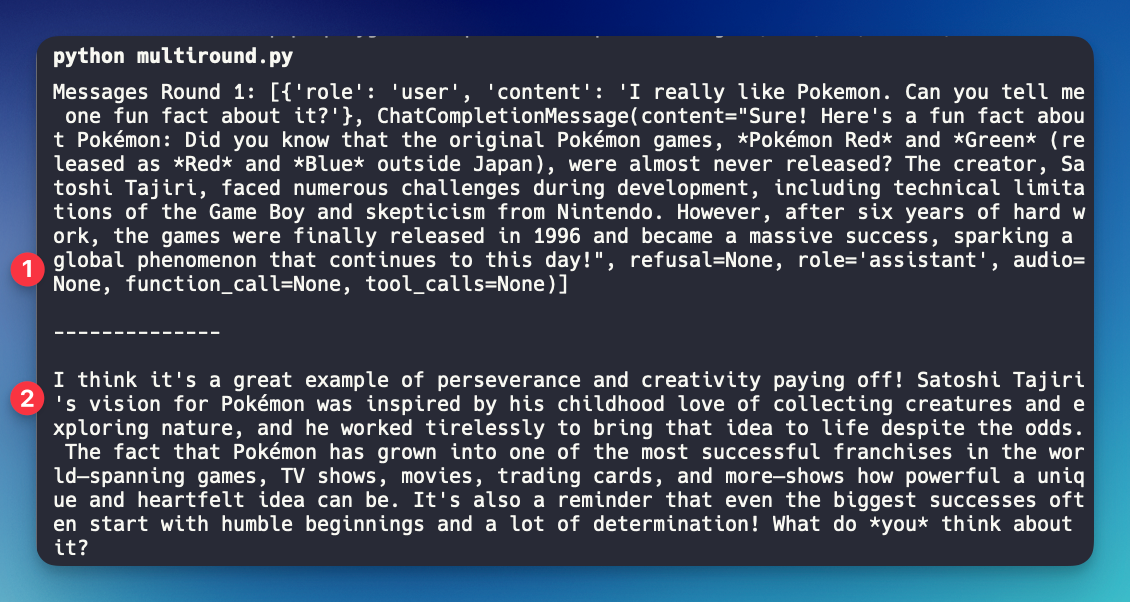

Here is the result:

It works successfully! Since we provide the complete conversation each time we talk with the API, it now has the full context of our discussion. As you might have guessed, the longer the conversation, the more tokens it requires.

The Alternative

I've experimented in the past with using Groq, and we can summarize each conversation to shorten the string.

This method also has some caveats:

- it could lose some detail later.

- we require an extra request to summarize the conversation.

That's it! I hope this helps you to build a conversational AI app using the DeepSeek API.

In case you missed it, learn the basics of DeepSeek API here: