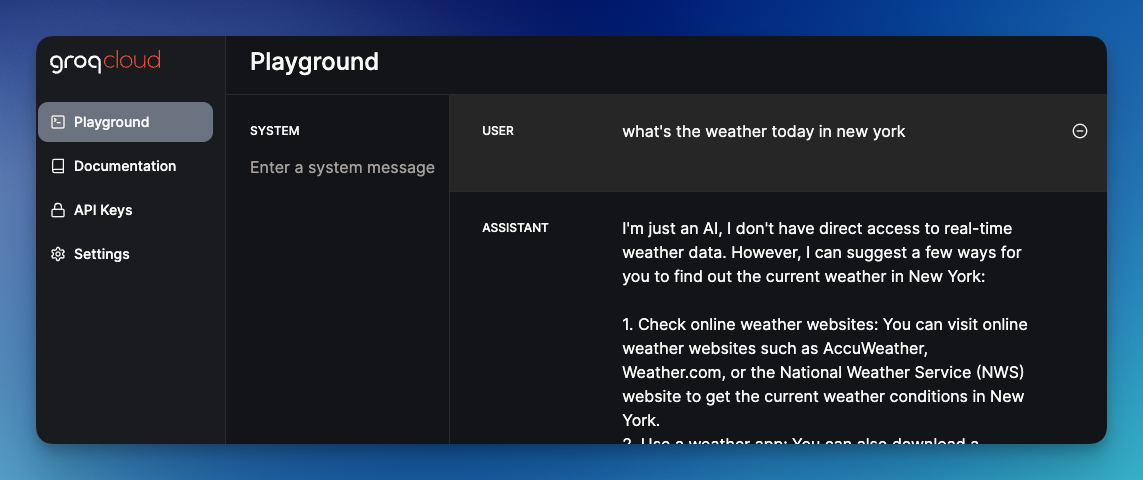

We've seen how fast running an AI using Groq API (Feel free to read: Create a super fast AI assistant with Groq). But here is what happens if we ask Groq for real-time data. It'll tell us that "I'm just an AI, I don't have direct access to real-time data ...":

In this post, we'll see how to enable Groq API to cleverly detect when users' inquiries need access from the internet and parse the correct arguments from the conversation to call the 3rd party API.

Tool use in Groq

We call this a tool use or function calling. Tool use is a more broad term since there are usually several tools available to us depending on the platform or the models. For example, tools for code interpreters, reading PDFs, etc.

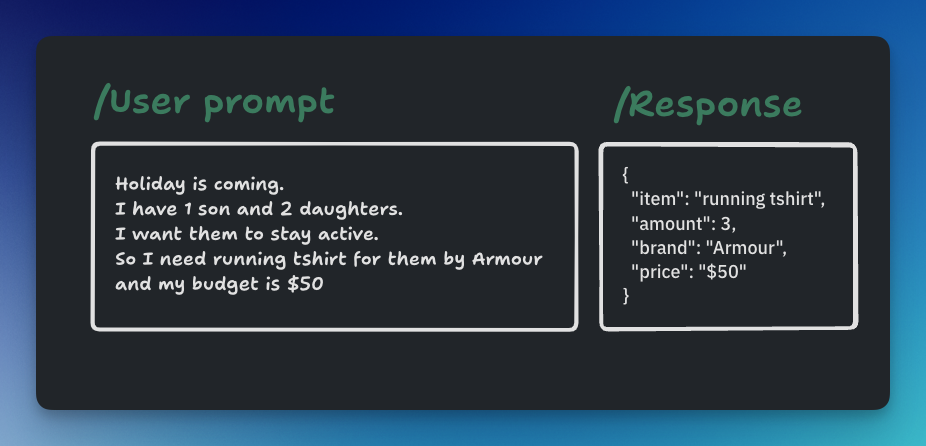

Here is what the function call can do for us:

It can turn a human message into a structured JSON that is ready to be passed as arguments in a custom function.

"Groq API endpoints support tool use for programmatic execution of specified operations through requests with explicitly defined operations. With tool use, Groq API model endpoints deliver structured JSON output that can be used to directly invoke functions from desired codebases." - Groq documentation.

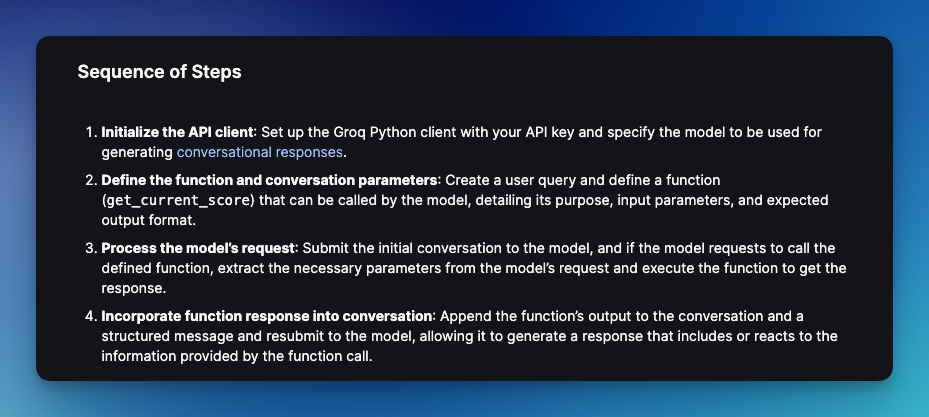

It's similar to what function calling in OpenAI. Read more about connecting OpenAI to the internet here.Here is the sequence of steps that are needed:

Sample API to use

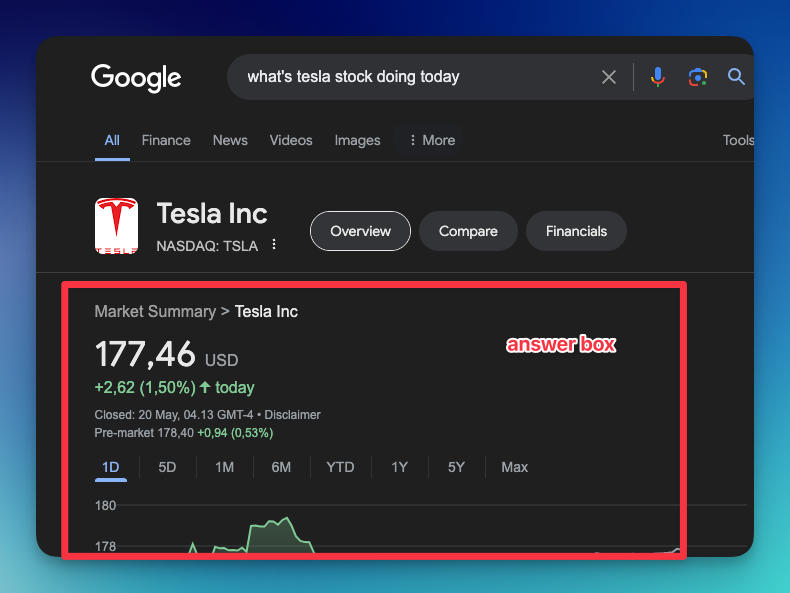

Google often provides an answer in the answer box. Answers to questions like "What's the weather today?" or "What's the current stock of Tesla?" can be found immediately in the answer box.

We'll use SerpApi - Google answer box API to collect this result programmatically. So, this is the "custom API" we'll call from our tool at Groq, when it's needed.

Please note that you can use any API or build a custom function on your code. It doesn't have to be this API.

Video Tutorial

If you prefer to watch a video, here's the video explanation of this blog post

As you can see, using a Web Search API like SerpApi will enable your AI model to access real-time data from the internet.

Function call example in Groq

Code time! This is step-by-step instructions on how to use function calls at Groq. I'm using NodeJS. Feel free to use any language you want.

Here is the full source code on GitHub:

Step 1: Install dependencies

Let's install express for routing, groq-sdk itself, and SerpApi as the external API that we want to call.

npm i groq-sdk express dotenv serpapi --saveStep 2: Add your env key

Make sure to register at Groq and SerpApi. You can grab the API keys from each of these sites. Create a new .env file and paste your keys here:

GROQ_API_KEY=

SERPAPI_KEY=Step 3: Basic setup

Create a new index.js file for our program.

const express = require('express');

// Express Setup

const app = express();

app.use(express.json());

const port = 3000

require("dotenv").config();

const { GROQ_API_KEY, SERPAPI_KEY } = process.env;

// GROQ Setup

const Groq = require("groq-sdk");

const groq = new Groq({

apiKey: GROQ_API_KEY

});

const model = "llama3-8b-8192"

// Extrernal API to call

const { getJson } = require("serpapi");

async function getSearchResult(query) {

// ...

}

async function run_conversation(message) {

//...

}

app.post('/test', async (req, res) => {

const { message } = req.body;

const reply = await run_conversation(message)

res.send({

reply

})

})

app.listen(port, () => {

console.log(`Example app listening on port ${port}`)

})Step 4: Prepare our custom function

As mentioned, we want to implement a function that Groq can call. I'm using Google Answer box API by SerpApi for this. Here's the implementation of the getSearchResult function:

async function getSearchResult(query) {

console.log('------- CALLING AN EXTERNAL API ----------')

console.log('Q: ' + query)

try {

const json = await getJson({

engine: "google",

api_key: SERPAPI_KEY,

q: query,

location: "Austin, Texas",

});

return json['answer_box'];

} catch(e) {

console.log('Failed running getJson method')

console.log(e)

return

}

}As you can see, I'm returning the answer_box from the response, we don't care about the rest of the response for this use case. We'll later call this function.

Step 5: Calling custom function from Groq

Now, let's see what our run_conversation methods look like. It's pretty long, but bear with me, I'll explain it soon:

async function run_conversation(message) {

// Let the LLM know about the custom function

const messages = [

{

"role": "system",

"content": `You are a function calling LLM that can uses the data extracted from the getSearchResult function to answer questions that need a real time data`

},

{

"role": "user",

"content": message,

}

]

// Parepare our custom function

const tools = [

{

"type": "function",

"function": {

"name": "getSearchResult",

"description": "Get real time data answer from Google answer box",

"parameters": {

"type": "object",

"properties": {

"query": {

"type": "string",

"description": "The query to search for in Google",

}

},

"required": ["query"],

},

},

}

]

// Run the chatCompletion method

const chatCompletion = await groq.chat.completions.create({

messages,

model,

tools,

tool_choice: "auto",

max_tokens: 4096

});

const response_message = chatCompletion.choices[0]?.message

const tool_calls = response_message.tool_calls

// If no tool calls needed, we can return the response as it is

if(!tool_calls) {

const respond = response_message.content

return respond

}

const available_functions = {

"getSearchResult": getSearchResult,

} // You can have multiple functions

// Since there could be more than one function, we need to loop the result

for(let i=0; i<tool_calls.length; i++){

const tool_call = tool_calls[i]

const functionName = tool_call.function.name

const functionToCall = available_functions[functionName]

const functionArgs = JSON.parse(tool_call.function.arguments)

const functionResponse = await functionToCall(

query=functionArgs.query

)

messages.push({

tool_call_id: tool_call.id,

role: "tool",

name: functionName,

content: JSON.stringify(functionResponse)

})

}

console.log(messages)

// Call the chatCompletion method with addition information from the custom API

const second_response = await groq.chat.completions.create({

model,

messages

}).catch(async (err) => {

if (err instanceof Groq.APIError) {

console.log(err)

} else {

throw err;

}

return err

});

const respond = second_response.choices[0]?.message.content

return respond

}Notes

- We let the LLM know about it's capabilities on the first messages.

- We prepared the tools that consist of the JSON structure for this function. Feel free to adjust the details of the function key. Don't forget to list all the parameters needed by the custom API inside the parameters -> properties key.

- Call the chatCompletion method, to decide if we need to call a custom function or not. *We let the AI decide this.

- If tool_calls available, this means we need to call a function, we'll loop the functions and call it based on it's name while passing the relevant arguments which the LLM already prepared for us on the initial response.

- We run each of the custom functions inside the loop.

- Send another chatCompletion method to Groq to run the "actual response" based on the information we have on the custom API.

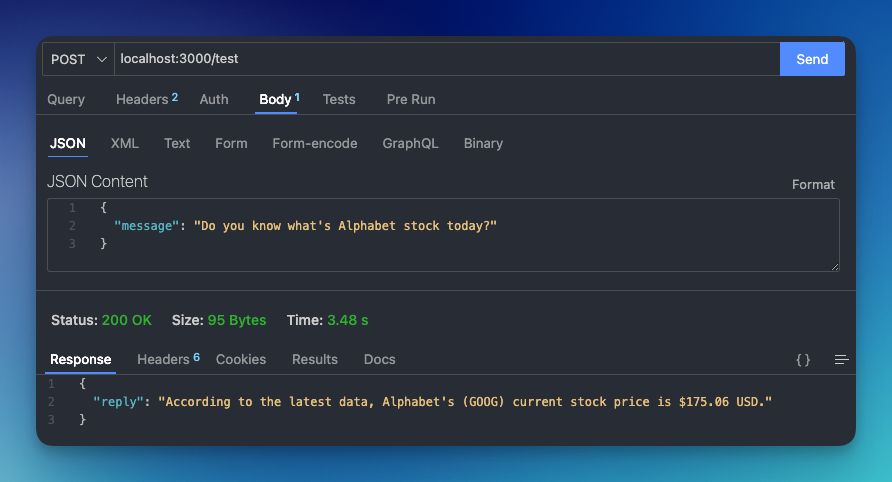

Here's the sample result:

Function calling comparison: Groq vs OpenAI API

After testing my program several times, I can conclude:

- Groq returns a much faster response.

- Groq can parse the relevant parameter from the human's natural language.

- Griq isn't very good when deciding if they need to call the custom API or just respond to it themself. In my case, it always tries to call my custom function.

Fallback answer

As mentioned, currently, Grog is not working very well when the user asks a general question that doesn't need a custom function. One way you can prevent the error is by catching if the tool result is undefined; you can send a new simple chat completion without the tools parameter to Groq.

That's it! Feel free to try it yourself and let me know the result!