After Covid, more and more companies allow employees to work remotely. To reduce the complexity and risk associated with establishing legal entities in foreign countries, many companies will choose “Employer of Record” to onboard their remote employees.

If you are building an "Employer of Record" entity, the fastest way to grow your business is to generate massive leads daily or weekly, filter by your criteria, enrich the data, and send cold emails. How can we automate the process and make it production-ready for your business?

In this post, I'll guide you step by step. The most difficult part is scraping hundreds of businesses daily or weekly with SerpApi. Then, you can use Excel and your favorite CRM tool for filtering, enriching, and sending cold mail.

Setting Up a SerpApi Account

SerpApi offers a free plan for newly created accounts. Head to the sign-up page to register an account and complete your first search with our interactive playground. When you want to do more searches with us, please visit the pricing page.

Once you are familiar with all the results, you can utilize SERP APIs using your API Key.

Scrape your first 10 jobs

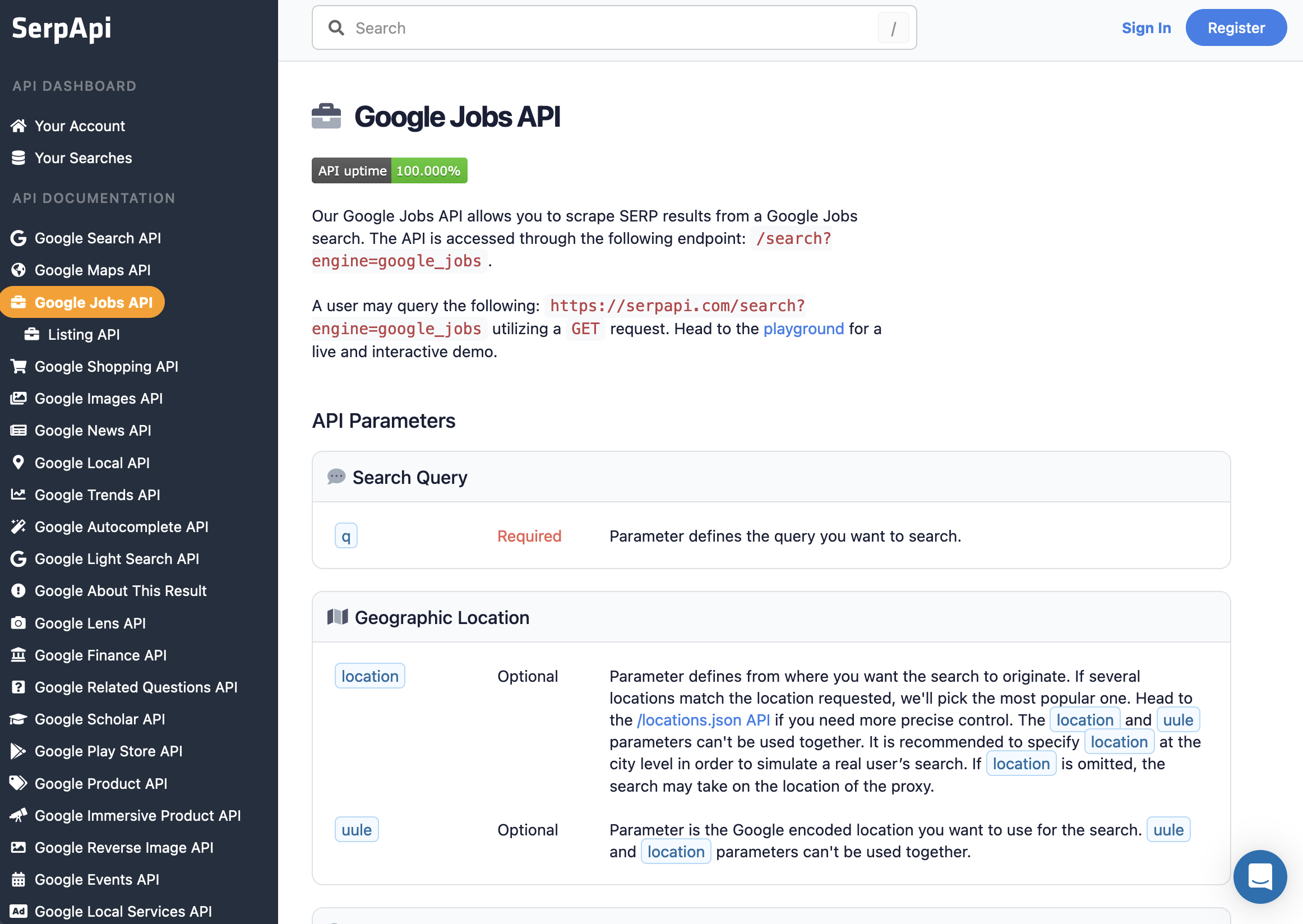

Let's run a case, you are targeting the companies hiring "Junior Python Developer". Now I'll guide you to scrape your first 10 "Junior Python developer" jobs with company info in New York. The best way to do this is using our SerpApi Google Jobs API. Head to our Google Jobs API document, then.

you need to install the SerpApi client library.

pip install google-search-resultsSet up the SerpApi credentials and search.

import serpapi

import os, json

import csv

params = {

'api_key': 'your_api_key', # your serpapi api

'engine': 'google_jobs', # SerpApi search engine

'q': 'junior python developer',

'location': 'New York, United States',

}

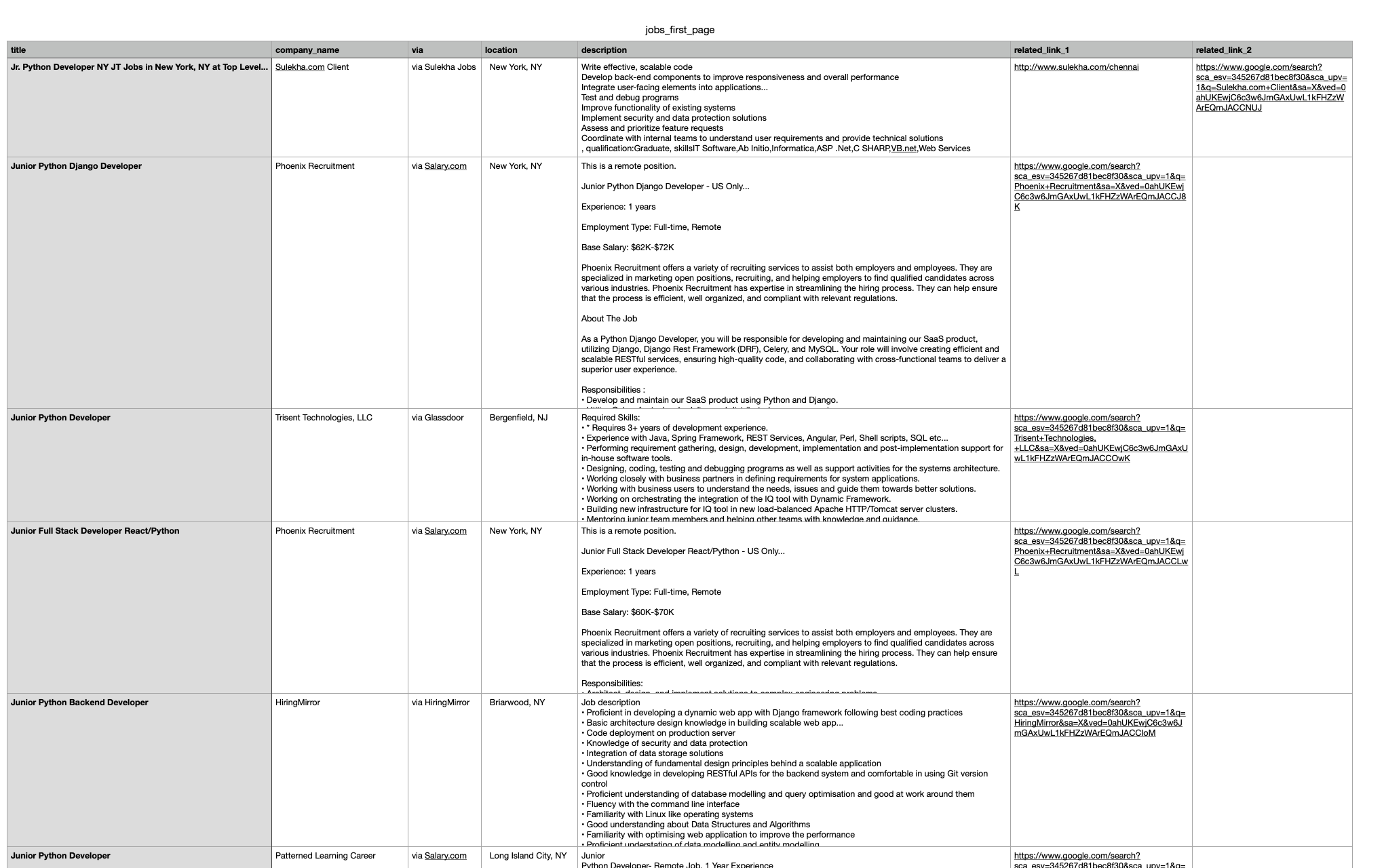

Extract the first 10 leads

results = serpapi.Client().search(params)["jobs_results"]

header = ['title', 'company_name', 'via', 'location', 'description', 'related_link_1', 'related_link_2']

with open('jobs_first_page.csv', 'w', encoding='UTF8', newline='') as f:

writer = csv.writer(f)

writer.writerow(header)

for item in results:

values = [item.get('title'), item.get('company_name'), item.get('via'), item.get('location'), item.get('description')]

if item['related_links']:

if len(item['related_links']) > 1:

values.append(item['related_links'][0]['link'])

values.append(item['related_links'][1]['link'])

else:

values.append(item['related_links'][0]['link'])

values.append('')

writer.writerow(values)

Now you have the company name are hiring, the job title, the location, the job description, and related links. Can you scrape more pages to get your massive leads and use this data to create a list of leads for your sales team?

Sure, we're not done yet. That's why we are writing this blog post: to save you time.

Scrape hundreds of jobs

The first question should be how to scrape massive amounts of companies hiring daily or weekly.

Let's take a look at SerpApi Google Jobs API, we allow you to scrape more pages, just by passing “start” param. For example, you can scrape second pages using the parameters:

import serpapi

import os, json

import csv

params = {

'api_key': 'your_api_key', # your serpapi api

'engine': 'google_jobs', # SerpApi search engine

'q': 'junior python developer',

'location': 'New York, United States',

'start': 10

}

And we can scrape a few pages or many pages until Google returns empty results for “one” keyword: “Junior Python Developer”. In this example, we will scrape maximum 3 pages:

results = []

for i in [0, 10, 20]:

params = {

'api_key': 'your_api_key', # your serpapi api

'engine': 'google_jobs', # SerpApi search engine

'q': 'junior python developer',

'location': 'New York, United States',

'start': i

}

results = results + serpapi.Client().search(params).get("jobs_results", [])

You've got to have more jobs, right? If you've done it correctly, your CSV file should contain 30 jobs.

Does Google Jobs provide hundreds of pages of results with thousands of jobs?

If you scroll the pages on jobs results, like this: https://www.google.com/search?q=junior+Python+developer&ibp=htl;jobs, it will stop after a few new pages. So, how can we get more leads?

The answer is pretty strange. Some companies name jobs like Junior Python developer, some names “Junior Python engineer,” “Junior Python Backend developer,” “Backend Python Junior,” etc.

Instead of scraping one keyword, we can scrape a list of keywords. You don’t need to brainstorm all the keywords. AI is best for this work, ask ChatGPT and it will give you as many keywords as you want, I listed 10 examples from ChatGPT:

- Junior Python Developer

- Entry-Level Python Developer

- Python Developer Junior

- Junior Python Programmer

- Junior Python Software Developer

- Python Developer Entry-Level

- Python Programmer Junior

- Python Developer New Grad

- Python Developer Associate

- Junior Python Engineer

Perfect. With these 10 keywords, each giving you about 30 jobs, you now have 300 jobs listed.

Scrape more keywords with more pages for each keyword. You can get massive jobs, and companies are hiring. However, there are many more steps to turning these jobs into quality leads.

If you check 300 jobs carefully, you can see

- A company may post multiple jobs

- There are many companies like Google, Facebook, Microsoft that you want to ignore in your sales call

- Some jobs require 2+ years of experience

- You want to ignore jobs from fake job boards or your competitors

- Duplicated jobs from multiple sources

- ….

We will cover them in Part 2 of this blog post. Now, try our APIs to get your massive jobs first.

This example uses Python, but you can also use your favorite programming languages, such as Ruby, NodeJS, Java, PHP, and more.

If you have any questions, please feel free to contact me.

Google Local Services API

Google Local Services API

Baidu Search API

Baidu Search API

Bing Search API

Bing Search API

DuckDuckGo Search API

DuckDuckGo Search API

Ebay Search API

Ebay Search API

Walmart Search API

Walmart Search API

The Home Depot Search API

The Home Depot Search API

Naver Search API

Naver Search API