I think that nowadays everyone who has been online used Google Search at least one time in their life. So, it is natural to think that we know how to search for what we need.

But when it comes to working with SerpApi's Google Search API, you may think that you have everything granted just like you search in your browser.

Truth is that it is not the case at all. When you search for a query in your favorite browser, Google already has the certain data about your language preferences, your location, your past searches and and probably more.

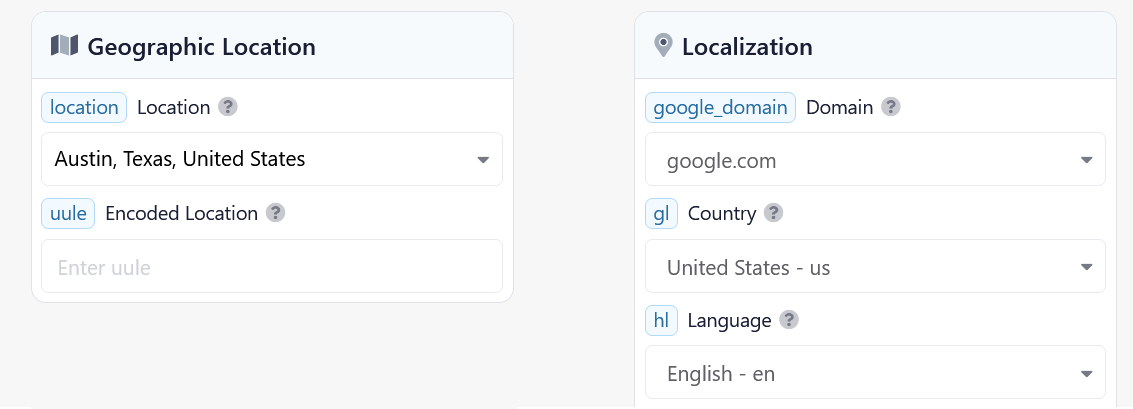

Location

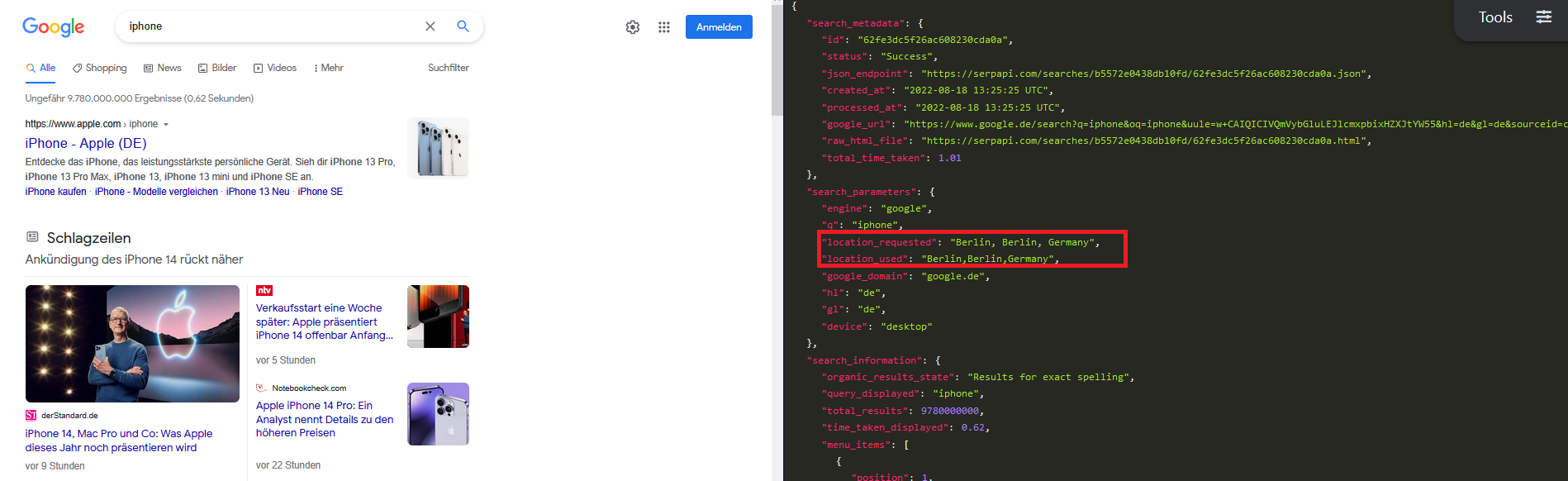

But when you search for a query with SerpApi, you have many parameters that you can deliberately choose to simulate any search in any location in any country. If you decide not to, you may expect certain anomalies in the results which you don't expect at all.

So, it is always good to have location, gl, hl and google_domain parameters in your request.

Another pitfall that some of our users fall when using location parameter is that instead of using a local city name, they always try to go big with a country or state name. But a user who searches on Google never connects from a country or just a state. They connect from a big or a small city.

So, the key point to using SerpApi is always keeping in mind that you are simulating a singular user's search.

Why is it important?

It is because whatever you search for, Google has the rule of serving results to people. This is how they designed their system. This is why we see more and more the local businesses, events or any other organization in the results around the city that the searcher connects from.

Maximum Results Shown by Google Search and How to Get More

Each and every Google search (whether be search, maps, jobs or others) returns only a small amount of results despite the numbers shown on the page.

So when you make a search for any term, the maximum amount of the results that you will be able to scrape won't be more than 400 in Google Search API.

Which means if you add num=100 (which I highly recommend doing if you are interested in organic_results), you won't see the 4th page most of the time. Google never shows those millions of results for end users and will never probably.

If you are looking to collect results more than what Google shows, you need to be a bit creative. Sometimes, adding just any letter ("a") to your search query will basically differentiate your query while you will not get away from the context that you are searching for.

Let's say that you are searching for the term: "coffee". You have collected all the results but want/need more.

You can simply try searching for "coffee a" and check the results. If they look good enough, you can also scrape all the result from there to differentiate your search but stay in context. And you have more letters in the alphabet.

Even adding "coffee or" , "coffee and", "coffee but", "coffee yet", "coffee so" or any other conjunction word can help. Because every other wall of text has those conjunctions and they don't change the context of your main keyword(s) in a serious way. So you will get a more variety of organic_results in the end.

Advanced Search Operators

Not everybody is aware of the Advanced Search Operators that Google offers for some of the more advanced users who knows what they are doing.

As Google's documentation is such a mess between all the interrelated services, it is hard to find the official documentation for the Advanced Search Operators. Even if you search for Advanced Search Operators on Google, the first result is from Ahrefs blog. It seems that not only Google depends on other websites' content to show results for their search engine but also they expect internet users or others to document their services, too. What a shame!

Don't forget that when you search in Google, most of the terms have already been searched before. This allows Google to serve their results faster. But when you use a Search Operator, the amount of time required gets higher because your search complexity is higher.

On the other hand, your everyday internet user almost never uses Advanced Search Operators. This means that excessive use of Search Operators may trigger Google's spam detection systems in place.

Sure, SerpApi can avoid the detection and can scale your searches to the moon. But the search queries with the Advanced Search Operators will take more time than your regular searches. Advanced Search Operators can also degrade the health of the proxies used in those searches.

Long story short, if you need to use Advanced Search Operators in your query, you should know that SerpApi will take longer to respond to your call. If you are going to use Advanced Search Operators across the hundreds of thousands of search queries, we recommend doing it in the limits that SerpApi advises for your plan.

Google Search Engines

When you visit Google.com or your local Google domain, you simply get to search across 5 different engines. Although Google has more search engines, we are strictly talking about the engines which can be found in any Google Search Results page. We also don't include Maps option in this part of the article because Maps actually changes the whole URL structure and is basically a service of its own.

Those are (not including text-based search):

isch: Google Images API

lcl - Google Local API

vid: Google Videos API

nws: Google News API

shop: Google Shopping API

For the most users, the most relevant services would be Google Textual(?) Search, Google Images, Google News and especially the Google Shopping. Each of those services have different parameters, filters and pagination in place.

You see I call regular Google Search actually Google Textual(?) Search. When Google first started, it was mostly serving text based results and images on a different tab. But nowadays, a Google search result includes Youtube and TikTok videos, answer boxes, commonly asked questions, related searches, knowledge graph and even Premier League standings or NHL standings. But I digress.

Text Based Search

Google shows that they have 2,520,000,000 results for a mere "Coffee" search if your location is Austin, Texas. If you perform the same search from Frankfurt Germany location, Google will tell you that they have actually 2,620,000,000 results. I think German language adds another 100 million results. But it doesn't mean that you will get to see and scrape them all. Because Google only shows 350-400 results maximum for almost all generic searches. If you have a more elaborate search term, you will get to see even less.

So by 10 organic results per page, you would need at least 35 requests per search query to scrape all the results. But you could also add num=100 parameter to your search to get 100 organic results per page. Just like the advanced search operators that I mentioned before, num=100 parameter can also wear down the proxies as your everyday user never adds &num=100 to the end of url on their web browser's adress tab manually each time one after another. So, if the proxy is used so many times with &num=100 parameter, its performance will degrade. Just be cautious with the &num=100 parameter and only use it when necessary.

To get the most realistic results with the Google Search API, one must be very careful with the location and localization parameters.

Every search that a real user performs includes location based on the IP, a Google domain according to the region, gl info for the country and hl info for the language.

What happens if you don't use location and gl parameters in your search? You will get to see a result page based on the proxy's IP address.

For example, when I try to search for the "heyka" using "q" , "google_domain" and "hl", I get results from Illinois and Google finds a doctor's office and related searches. But if I pick California manually as the location and set up gl and hl, some of the organic_results differ from the first results.

If the search results can differ between two different states in United states, how about the rest of the world? Yeah, it changes a lot.

Be careful with the location and language parameters. Never try to go as wide as United States or Germany as your search location. Dial it down to the state's name or the city name and if you can, a neighbourhood location (if it exists) can get you the most realistic results.

Google Images API

Google Images API is used for many different goals. Between them, aiding the web pages with the images on fly, deep learning projects where visual identification is the end goal and etc.

Especially deep learning projects require many labelled images to teach the algorithm how to differentiate that object. It can be apple images, it can be dog images, it can be human faces to identify them or create fake ones.

Maximum results for any search term in Google is mostly never more than 600-700 images. To be able to search more, one can employ the tips that I have mentioned before in the textual search section. I will quote below still:

"If you are looking to collect results more than what Google shows, you need to be a bit creative. Sometimes, adding just any letter ("a") to your search query will basically differentiate your query while you will not get away from the context that you are searching for.

Let's say that you are searching for the term: "coffee". You have collected all the results but want/need more.

You can simply try searching for "coffee a" and check the results. If they look good enough, you can also scrape all the result from there to differentiate your search but stay in context. And you have more letters in the alphabet.

Even adding "coffee or" , "coffee and", "coffee but", "coffee yet", "coffee so" or any other conjunction can help. Because every other wall of text has those conjunctions and they don't change the context of your main keyword(s) in a serious way. So you will get a more variety of images in the end.

Another difficulty that our users go through is trying to direct link thumbnails which are stored on SerpApi's servers to their app or web page. SerpApi has CORS rules in place and only accepts requests from one domain with one API KEY which means that you can't link thumbnails directly from SerpApi servers to your page or your app. You need to download to your storage server to show them to your users. You may also try to direct link the original image link to your web page or to your app.

The only problem with the second scenario is that you won't be able to link certain images from certain websites which never shares the direct link of the image that they host. Facebook images can be shown as an example to this problem.

Google recently has started showing some filters which are called chips by them. Those chips help you with refining your search queries in more specific ways.

For example, when you search for Coffee, you will get the filters/chips like in the image below:

Each of those chips are returned to requester in our JSON response. If you want to use any of them, you can check the JSON response and grab the chips for the filter that you want to use. You can check this example, perhaps.

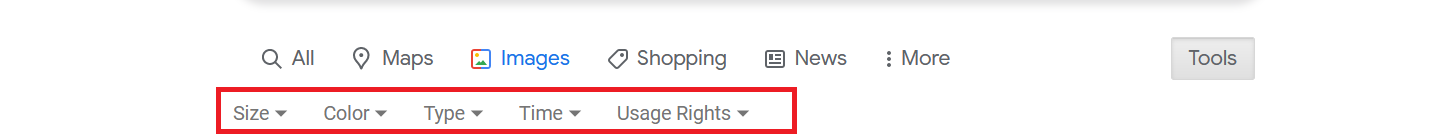

Tbm parameters are the other filters (not chips) which you can use to filter images.

Below, you will find the all possible tbs parameters for Google Images API:

Size:

Large = isz:l Medium = isz:m Icon = isz:i

You can click on each size to see how it is used as a parameter.

Color:

Black and White= ic:gray Transparent= ic:trans Red= ic:specific,isc:red

Orange= ic:specific,isc:orange Yellow= ic:specific,isc:yellow

Green= ic:specific,isc:green Teal= ic:specific,isc:teal

Blue= ic:specific,isc:blue Purple= ic:specific,isc:purple

Purple= ic:specific,isc:pink White= ic:specific,isc:white

Gray= ic:specific,isc:gray Black= ic:specific,isc:black

Brown= ic:specific,isc:brown

You can check the first three examples to see how it is used with SerpApi.

Type:

Clipart= itp:clipart Line Drawing= itp:lineart GIF= itp:animated

Time:

Past 24 hours= qdr:d Past Week= qdr:w Past Month= qdr:m

Past Year= qdr:y

You can also try using qdr:h to see the images from the last hour and cdr:1,cd_min:7/1/2022,cd_max:7/15/2022 to see the images published between specific dates. These two unofficial tmb parameters are taken from Google Search and may or may not use for Google Image Search.

Usage Rights:

Creative Common Licenses= il:cl Commercial&other Licenses= il:ol

Google News API

Google News can return maximum 100 results per request. News snippets are limited to what Google shows on their result pages. That's why, to scrape all the news article, you need to come up with your own solutions. JusText and similar libraries may help with that.

When it comes to using thumbnails from the Google News, you unfortunately can't use SerpApi urls of the images. Because of our CORS rules, you need to download the images to your own server to later present them to your users.

About the tbs parameters, Google News uses the same Time parameters with Google Search and Google Images. The only tbs parameter that is only used with Google News is Relevancy. While you don't need to do anything to sort the results by relevance, if you want to sort the results by date, you need to add a tbs parameter which is sbd:1. Google also hide duplicates when showing the results. If you would like to see duplicate results, you can also add another tbs parameter as nsd:1 which will show duplicates. To chain multiple tbs parameters, you only need to separate them by a comma. (e.g sbd:1,nsd:1 will sort the news by date and show the duplicates)

In the Time tbs parameters section, Google also shows Archive option for the date filters. You can use this filter by adding ar:1 as your tbs parameter. I am not exactly sure what Google means by this Archive option. But when you use it, you may get news from just a minutes ago to from 2014.

Google Shopping API

Google Shopping API let's you search for any products sold in the internet marketplaces.

Category information is not available with Google Shopping API. To collect maximum results, you can use num=100 parameter in your request. To paginate, you can add start=%amount_of_previous_results% parameter to your query. For example, if your first request includes num=100 parameter, to paginate to the second page you can use start=100 in your second request. Or, you can select the serpapi_pagination > next key and send a request to it to paginate to the second page and so on.

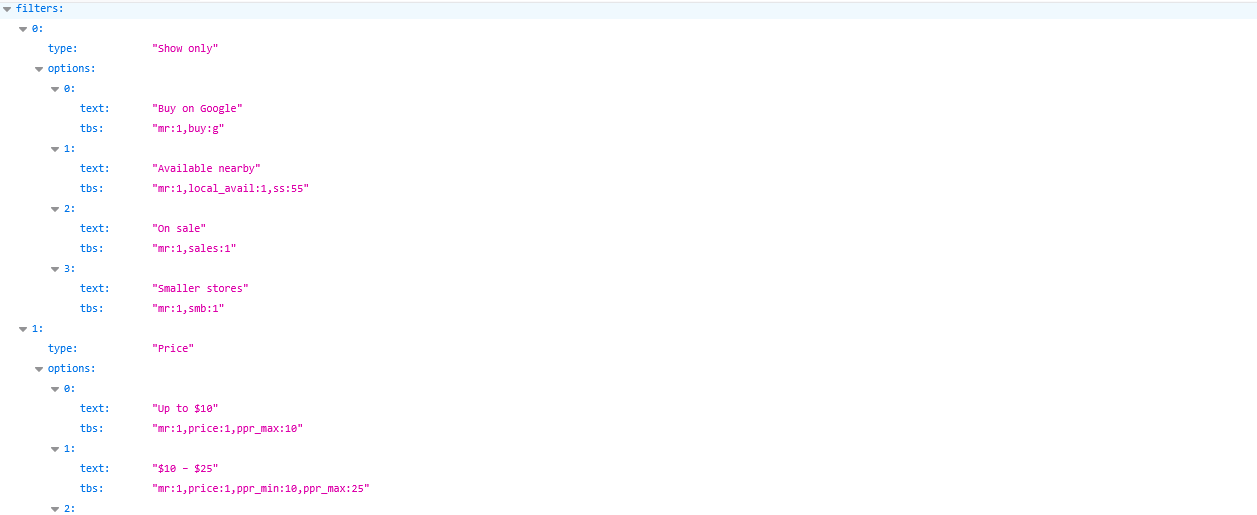

To customize your search, you can take advantage of the tbs filters. Tbs options are returned in the JSON response of your initial request for the product that you are searching.

You can chain your tbs parameters with a comma. For example:

You may have noticed that each tbs filter starts mr1. If you chain two different tbs filters, you need to remove the mr:1 of the second and following filters. For example if you want to see only products sold by Google, you need to add mr:1,buy:g. Then, you wanted to add price filter and want to see tea products between $3.50 – $6. Now you need to chain mr:1,price:1,ppr_min:3.5,ppr_max:6 filter with mr:1,buy:g. If you combine them by using mr:1 in the begininng of the each param, like this, mr:1,buy:g,mr:1,price:1,ppr_min:3.5,ppr_max:6, it can get finicky. Removing the second mr:1 like mr:1,buy:g,price:1,ppr_min:3.5,ppr_max:6, it is not going to create any problematic/out of ordinary results.

As we have mentioned in the beginning of the article, location is one the most important arguments when collecting price data from the Google Shopping API. As prices differ across countries and even cities, using a precise location always helps with the simulation of a real user request.

In this sense; location, hl, gl, device and google_domain will give you most realistic results while not having them can certainly end up returning irrelevant results for your search.

Google is in fact one of the most complicated piece of software products out there despite its easy use. Browsers definitely helps with what you are searching for 99% of the time as they share the most of the search parameters without you even knowing about it. Due to that, SerpApi can be hard to use for the engineers in terms of creating a search from the scratch with all the possible correct parameters.

But fear not. As we at SerpApi love a good support for the products that we use, we also love providing the best support for our products.

Feel free to reach out to SerpApi with any question of yours. We love delving into the software problems and figure out what may possibly be wrong with your implementation.

Google Local Services API

Google Local Services API

Baidu Search API

Baidu Search API

Bing Search API

Bing Search API

DuckDuckGo Search API

DuckDuckGo Search API

Ebay Search API

Ebay Search API

Walmart Search API

Walmart Search API

The Home Depot Search API

The Home Depot Search API

Naver Search API

Naver Search API