When you want to find information on products from various sources, Google Shopping is one of the best quality websites for you.

In my previous blog post, we were able to scrape all products from Google Shopping. You can customize keywords, price, and more.

The results of the Google Shopping listing page are basic, when you want to research the prices, do some analysis, or you need more information.

By scraping Google Product results, you can get more insightful information like multiple prices from different providers, user reviews overview, full product descriptions, full offerings from other sellers, etc.

Scraping Google Product information from Google is always challenging, and time-consuming if you don't have experience with that. With SerpApi, you can scrape all information effortlessly. Here is how.

Setting up a SerpApi account

SerpApi offers a free plan for newly created accounts. Head to the sign-up page to register for an account and do your first search with our interactive playground. When you want to do more searches with us, please visit the pricing page.

Once you are familiar with all results, you can utilize SERP APIs using your API Key.

Scrape your first Google Product Result with SerpApi

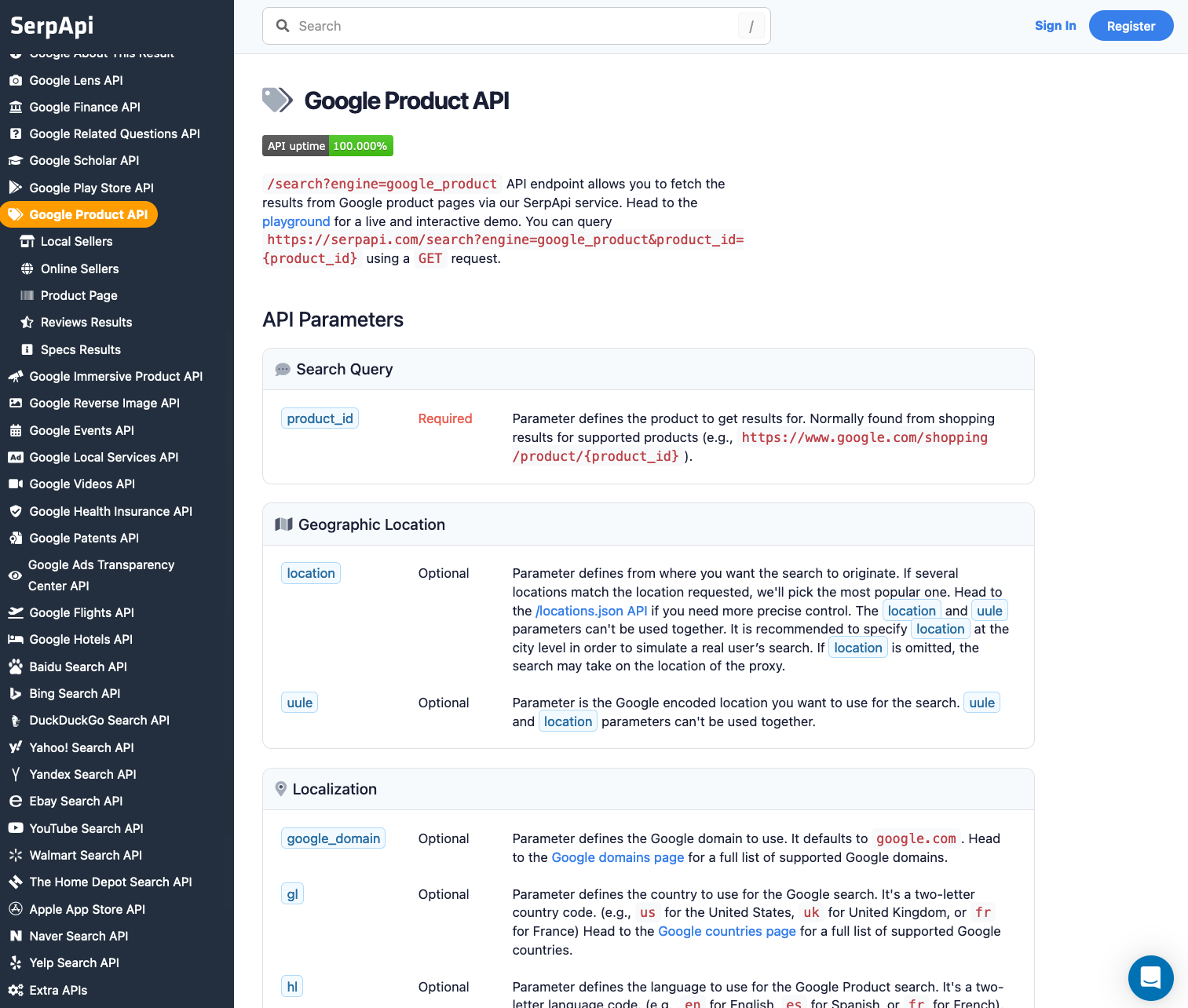

Head to the Google Product documentation on SerpApi for details.

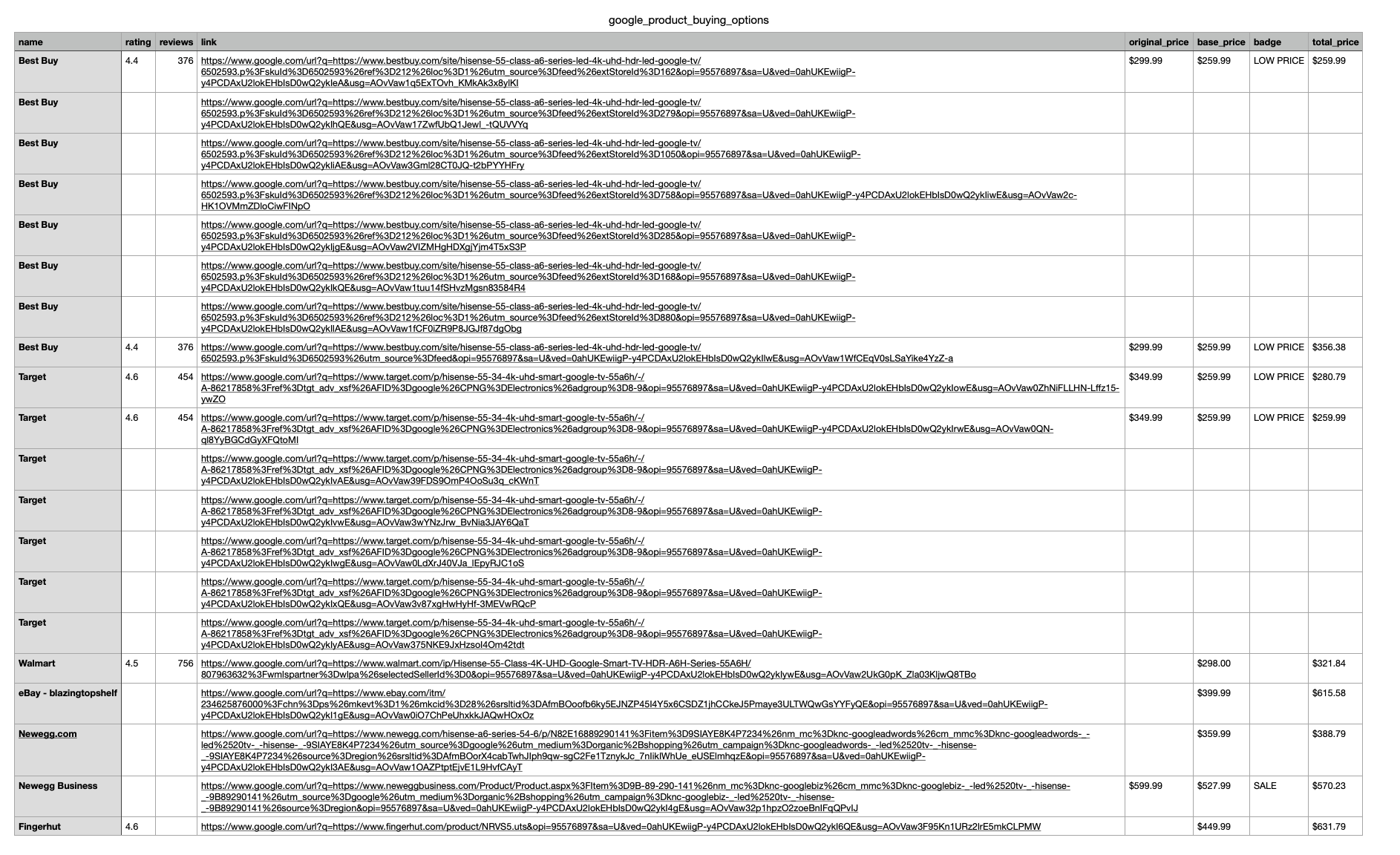

In this tutorial, we will compare buying options. The data result contains name, rating, reviews, link, original_price, base_price, badge, and top_price.

First, you need to install the SerpApi client library.

pip install google-search-resultsSet up the SerpApi credentials and search.

import serpapi, os, json

params = {

'api_key': 'YOUR_API_KEY', # your serpapi api

'engine': 'google_product', # SerpApi search engine

'product_id': '9625072758641291354'

}

To retrieve Google Product details for a given product id, you can use the following code:

client = serpapi.Client()

results = client.search(params)['product_results']['sellers_results']['online_sellers']You can store Google Product details JSON data in databases or export them to a CSV file.

import csv

header = ['name', 'rating', 'reviews', 'link', 'original_price', 'base_price', 'badge', 'total_price']

with open('google_product_buying_options.csv', 'w', encoding='UTF8', newline='') as f:

writer = csv.writer(f)

writer.writerow(header)

for item in results:

writer.writerow([item.get('name'), item.get('rating'), item.get('reviews'), item.get('link'), item.get('original_price'), item.get('base_price'), item.get('badge'), item.get('total_price')])

This example uses Python, but you can also use your favorite programming languages like Ruby, NodeJS, Java, PHP, and more.

If you have any questions, please feel free to contact me.

Google Local Services API

Google Local Services API

Baidu Search API

Baidu Search API

Bing Search API

Bing Search API

DuckDuckGo Search API

DuckDuckGo Search API

Ebay Search API

Ebay Search API

Walmart Search API

Walmart Search API

The Home Depot Search API

The Home Depot Search API

Naver Search API

Naver Search API