Bing search results are rich in data that can be invaluable for development, businesses, and academic research.

SerpApi provides APIs for scraping results pages from all of the largest search engines, including Bing. There are libraries supporting several of the most popular programming languages, including JavaScript, Python, Ruby, and PHP.

In this beginner-friendly tutorial, we will cover how to scrape Bing search results pages with Node.js, using SerpApi's JavaScript library.

To learn how to scrape Bing search results yourself, without using SerpApi, you can click here.

Click here for a tutorial using Ruby.

We will also cover how to paginate and get all of the available results for a Bing search.

Prerequisites

You will need to be somewhat familiar with Javascript.

You will need to have Node and Npm installed on your system. This tutorial should work with Node.js 7 and newer. If you don’t already have Node and Npm installed you can visit the following link for help with this process:

https://docs.npmjs.com/downloading-and-installing-node-js-and-npm

You will also need to sign up for a free SerpApi account at https://serpapi.com/users/sign_up.

Preparation

First we need to create a package.json file inside our project directory:

mkdir bing-scraper-nodejs

cd bing-scraper-nodejs

npm initNpm will walk you through the process of creating a package.json file. We don't need to make any changes to the package.json file in this case, as we will be using the CommonJS syntax and import system.

Next, we need to install a few dependencies. For this tutorial, we will use the SerpApi Javascript library to get search results, and Dotenv for handling our API key.

npm install dotenv serpapi

If you haven’t already signed up for a free SerpApi account go ahead and do that now by visiting https://serpapi.com/users/sign_up and completing the signup process.

Getting Started

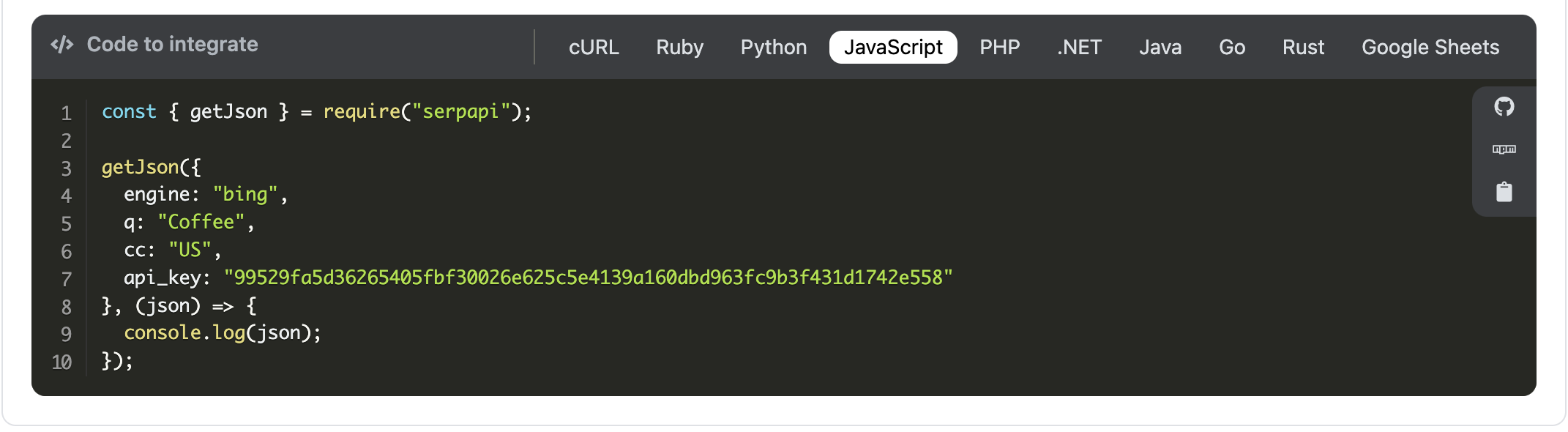

SerpApi provides starter code for all APIs, with examples in popular programming languages. You can find Node.js starter code not only for the Bing Search API but for any of SerpApi's APIs by visiting the documentation pages and selecting Javascript in the "Code to Integrate" window.

You will notice if you are signed into your account that your API key is already added to the parameters.

We can copy the example code using the clipboard icon to get started.

Then create a file called index.js, open it in your favorite IDE or editor, and paste the code.

You can run it by entering the command node index.js. You should see the JSON results printed in your terminal.

Protecting Your API Key

If you push your code to a public GitHub repository, you need to be careful not to expose your API key. A common way of handling this in Node.js is to create and add the API key as an environment variable and use the dotenv node module to retrieve it for use in your application. You can then edit your .gitignore to ensure the .env file won't be pushed to Github.

Create a .env file in your project directory:

touch .envOpen the .env file and add the API key as an environment variable:

SERPAPI_API_KEY="your-api-key-here"Then we can use the dotenv module to retrieve it in our index.js. Underneath const { getJson } = require("serpapi"); add the following lines:

require('dotenv').config();

console.log(process.env);

Then run your application with node index.js to make sure your API_key is retrieved successfully. Comment out the call to console.log() after you do this, as we don't want to print out our environment variables every time we run our code.

The SerpApi library also includes a config object where you can store your API key so that you don't have to add it as a parameter in each request. To use config we need to add it to the import statement:

const { getJson, config } = require("serpapi");We can then retrieve the API key using dotenv and store it in config:

const { getJson, config } = require("serpapi");

require('dotenv').config();

// console.log(process.env);

config.api_key = process.env.SERPAPI_API_KEY;We can then remove the API_key from the parameter object in the getJSON() call. Your full code should now look like this:

const { getJson, config } = require("serpapi");

require('dotenv').config();

// console.log(process.env);

config.api_key = process.env.SERPAPI_API_KEY;

getJson({

engine: "bing",

q: "Coffee",

cc: "US",

}, (json) => {

console.log(json);

});If you plan to push your code to a public GitHub repo, make sure you include .env in your .gitignore file.

Other Parameters

The starter code includes the basic parameters needed to perform a search. However, the Bing Search API supports some optional parameters that can help you fine-tune your results. Visit the documentation page to learn about them all.

Geographic Location

Location can be an important factor if you are using the Bing Search API to track SEO rankings, or want to emulate the search results you see when using Bing in your browser.

The Bing Search API supports the lat and lon parameters if you want to search for results from a point of origin as defined by GPS coordinates.

However, in this example, we will use the location parameter for simplicity. To add the location parameter, just add it to the parameter object. It accepts a string, and can include the name of a City, State, and/or Country:

getJson({

engine: "bing",

q: "Coffee",

cc: "US",

location: "Austin, Texas",

}, (json) => {

console.log(json);

});You can read more about location parameters, and how they affect your searches here:

Pagination

Bing searches return a limited number of results per page. Sending a request to SerpApi only returns the first page of results. To get more results we need to use the pagination parameters supported by SerpApi.

We can use the count parameter to increase the number of results per page. It has a maximum value of 50. However, Bing will not always provide a number of results per page equal to the value of count. Later pages seem to contain the number of results specified, but the first page may not show more than 35 results or so even if count=50.

To get as many results as possible, we set count=50:

getJson({

engine: "bing",

q: "Coffee",

cc: "US",

location: "Austin, Texas",

count: 50,

}, (json) => {

console.log(json);

});We can use the first parameter to paginate. This is an offset, similar to Google's start except that it starts at 1 whereas start begins at 0.

So, for example, if 35 results were returned in response to the initial request, we can send another request with first=36:

getJson({

engine: "bing",

q: "Coffee",

cc: "US",

location: "Austin, Texas",

count: 50,

first: 36

}, (json) => {

console.log(json);

});

To send multiple requests in a loop, we will need to check the response for the presence of results each time so that we know when to exit the loop. Since we can't check the response until we receive it, we will need to use the await keyword. To use the await keyword in CommonJs, we need to put our code inside an async function.

Typically we want to increment first by the number of results available per page with each pass of the loop, in order to get all available results. However, the number of results Bing returns per page may vary, so to do this with count=50 we would need to check how many results were returned, add one, and use that as the value for first.

Let's simplify for now by setting count=30, and incrementing first by 30 with each pass.

Instead of hardcoding the values for the search, let's also accept an object called "params" as a parameter in the function. You can comment out the call to getJson() we added earlier, and add the following code instead:

async function search(params){

// get the first page

let page = await getJson(params);

let resultsPresent = true;

// keep searching while there are results

while(resultsPresent){

// increment first by 30 to paginate

params.first += 30;

page = await getJson(params);

if(!page?.organic_results){

resultsPresent = false;

}else{

/* Print the current pagination offset and the title of the top

result on each page*/

console.log("Result #" + page.search_parameters.first);

console.log(page.organic_results[0].title);

}

}

}Then declare a search_parameters object and call the search() method, passing in search_parameters:

const search_parameters = {

engine: "bing",

q: "Coffee",

cc: "US",

location: "Austin, Texas",

mkt: "en-US",

count: 50,

first:1

};

search(search_parameters);

Open your terminal window again and run your code with node index.js to to make sure everything is working correctly.

To reduce the number of calls it requires to paginate through all of the results, we could set count=50, and check the number of available results in each page, so that we know how much to increment first.

However, there is a cleaner way to paginate.

SerpApi Pagination

SerpApi provides a serpapi_pagination key for engines supporting pagination. With bing, and most other engines, we can use the serpapi_pagination.next key to get the URL for the next page.

We can use the url and querystring node modules to help us parse the parameters from the serpapi_pagination.next URL.

We need to import these modules, add the import statements at the top of index.js:

const url = require("url");

const qs = require("querystring");The syntax to parse the pagination URL using these modules looks like:

const nextUrl = url.parse(page.serpapi_pagination.next);

const params = qs.parse(nextUrl.query);We can just replace params.first += 30; with the lines above. Now we can set count to 50, and get the maximum number of results for each page.

Here is the full code:

const { getJson, config } = require("serpapi");

const url = require("url");

const qs = require("querystring");

require('dotenv').config();

// console.log(process.env);

config.api_key = process.env.SERPAPI_API_KEY;

config.timeout = 60000;

const search_parameters = {

engine: "bing",

q: "Coffee",

cc: "US",

location: "Austin, Texas",

mkt: "en-US",

count: 50,

first:1

};

async function search(params){

// get the first page

let page = await getJson(params);

let resultsPresent = true;

// keep searching while there are results

while(resultsPresent){

const nextUrl = url.parse(page.serpapi_pagination.next);

// Extract the request parameters

const params = qs.parse(nextUrl.query);

// Get the next page

page = await getJson(params);

if(!page?.organic_results){

resultsPresent = false;

}else{

// Print the current pagination offset and the title of the top result on each page

console.log("Result #" + page.search_parameters.first);

console.log(page.organic_results[0].title);

}

}

}

search(search_parameters);

Conclusion

We've seen how to scrape Bing Search Results using Node.js with SerpApi, and paginate through all of the available results.

I hope you found the tutorial informative and easy to follow. If you have any questions, feel free to contact me at ryan@serpapi.com.

Links

SerpApi:

- Bing Search API Documentation

- SerpApi Javascript Integration

- SerpApi Playground

- ES6 Pagination Example (for Node.js 14+)

- Scrape Bing with Ruby

Google Local Services API

Google Local Services API

Baidu Search API

Baidu Search API

Bing Search API

Bing Search API

DuckDuckGo Search API

DuckDuckGo Search API

Ebay Search API

Ebay Search API

Walmart Search API

Walmart Search API

The Home Depot Search API

The Home Depot Search API

Naver Search API

Naver Search API