A common question we get asked is: "how many search credits will it cost to do x" (or something along those lines), so I thought it would be helpful to explain how I calculate these things when asked.

Often the customer is understandably preoccupied with getting as many results as possible for the minimum amount of search credits - so we'll be keeping that as a central focus for the rest of the article.

The easy answer: count clicks.

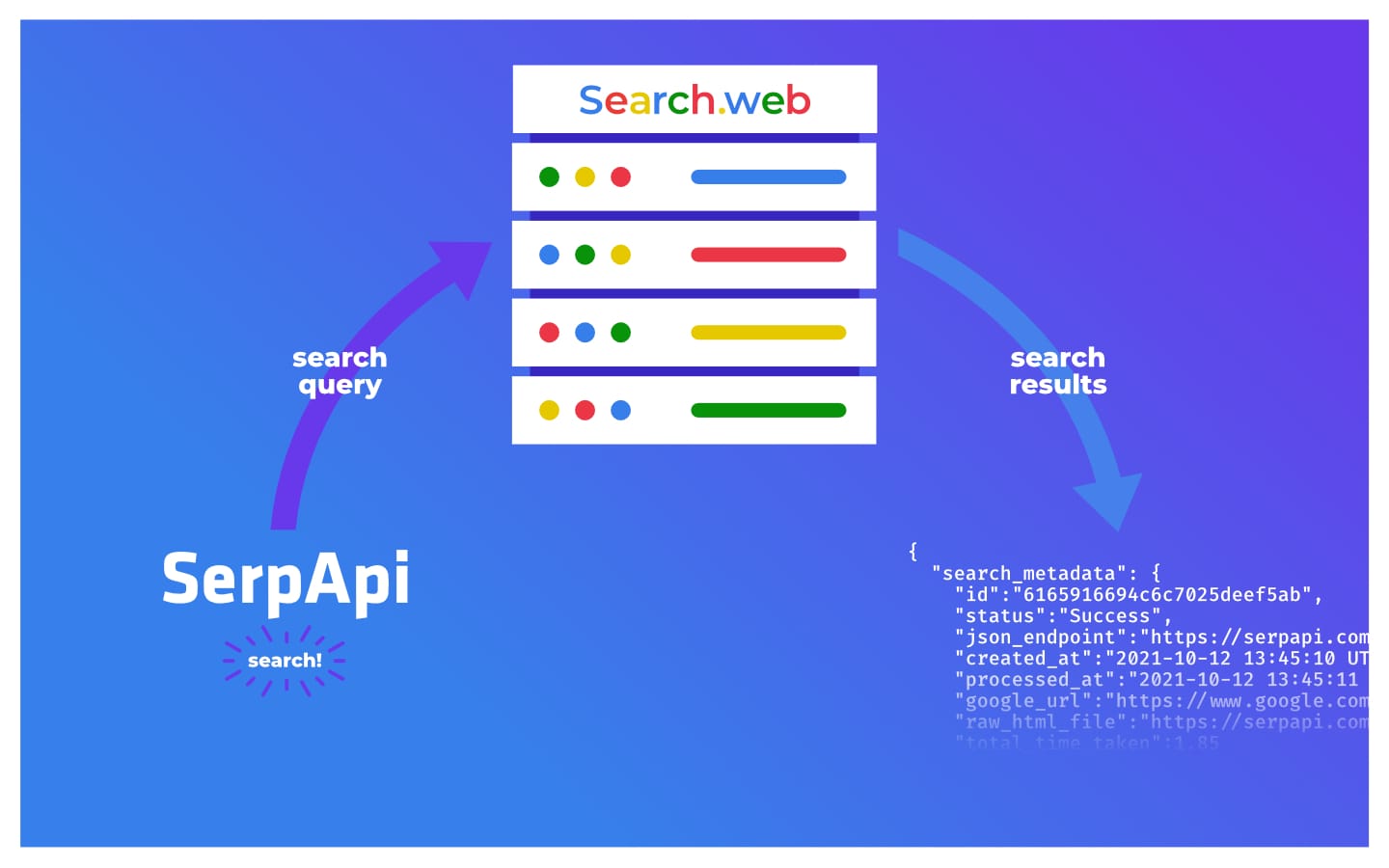

Our APIs can be thought of as simple layers via which our users interact with Google, Bing, and a whole array of other engines. So when trying to understand which interactions are going to cost you money, I find it best to start at the source:

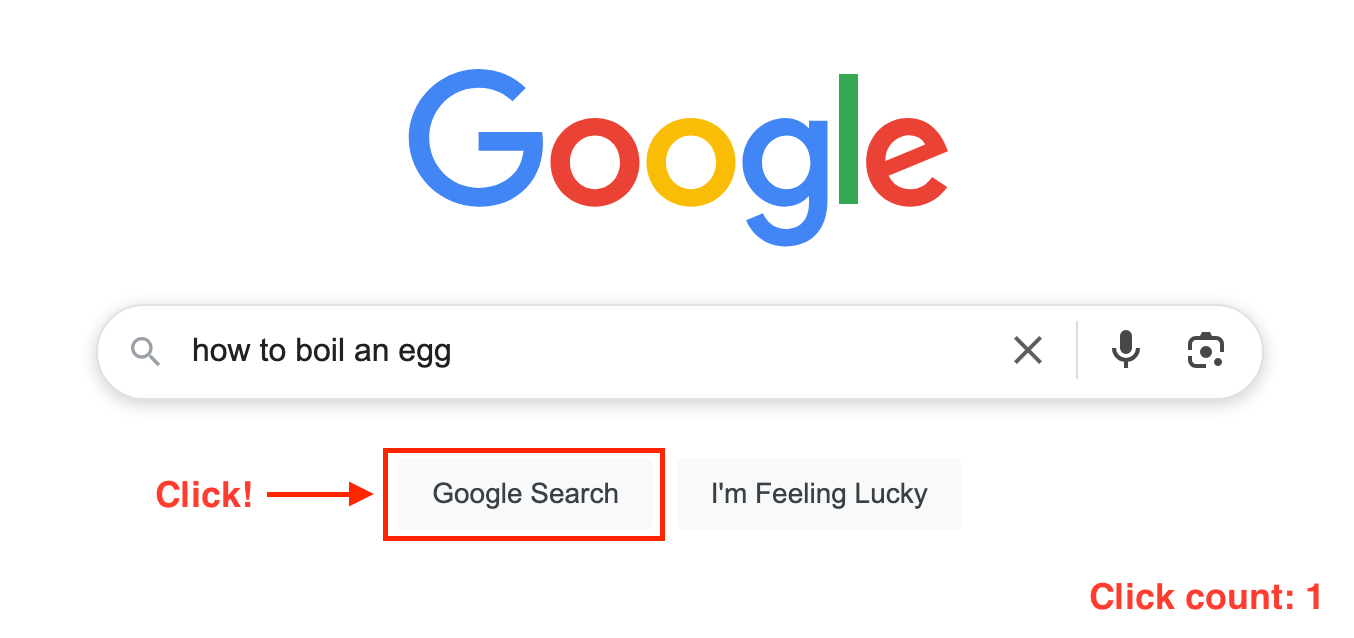

If we type a search query into the search bar, and click on 'Google Search', the browser will take us to a results page:

For this particular search, Google returned roughly 10 results on the first page.

To get here, it took us one click.

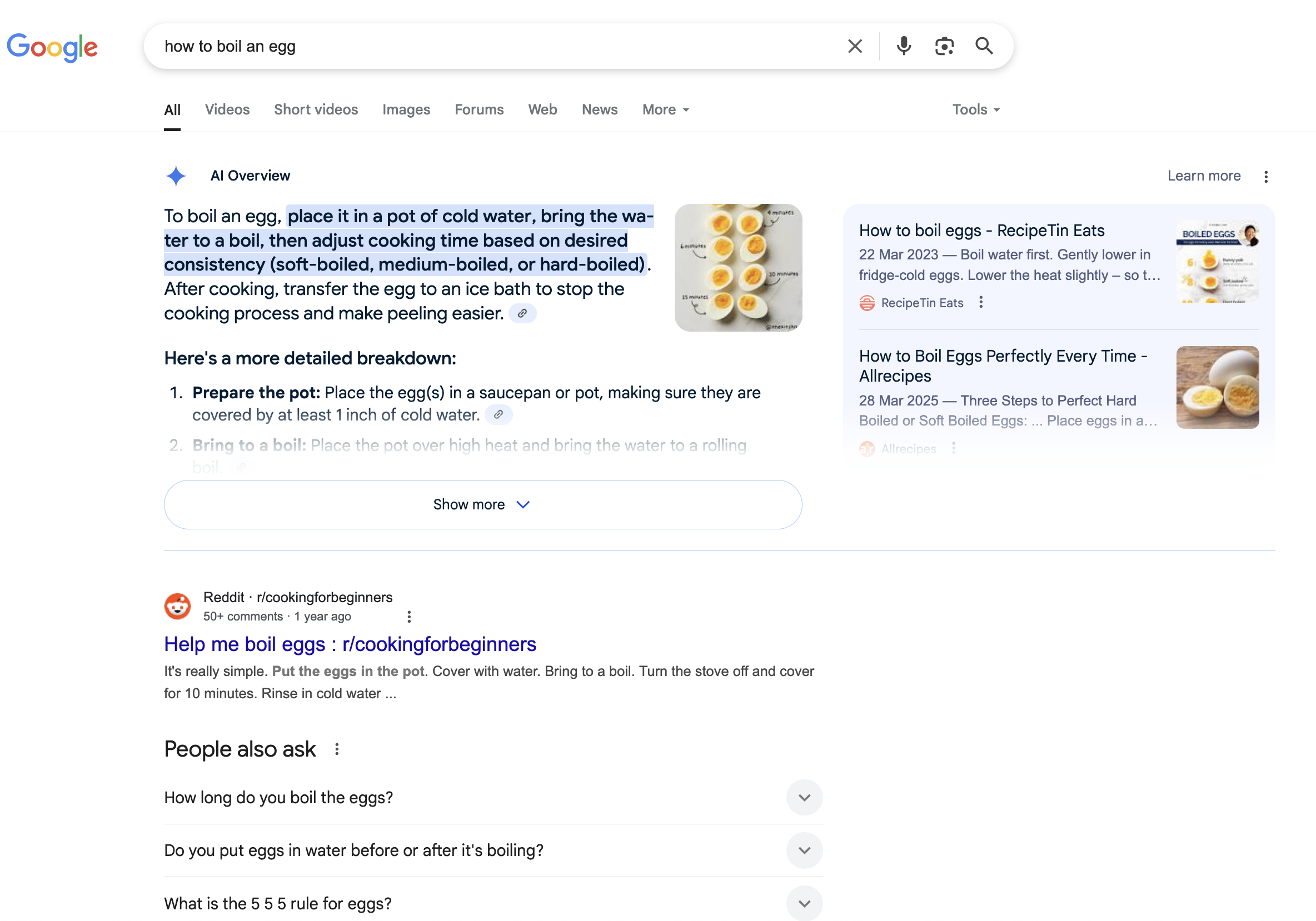

To see more results, I have to visit another page of the search. So I need to interact with the pagination controls Google provides at the bottom of the page:

To make the two requests to get 20 results, it took two clicks.

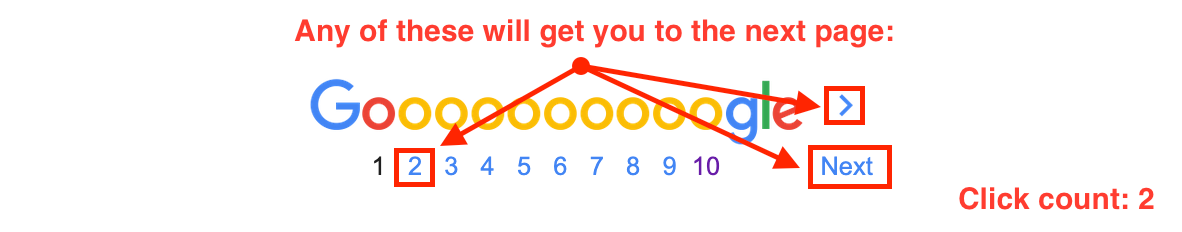

When you click on a link in your browser, you're actually initiating a request, which in turn will result in a response.

Web developers will know this as the "request/response cycle which you can see in the following illustration:

In the previous example, it means we would have gone through the above process twice:

Two clicks = two request/response cycles.

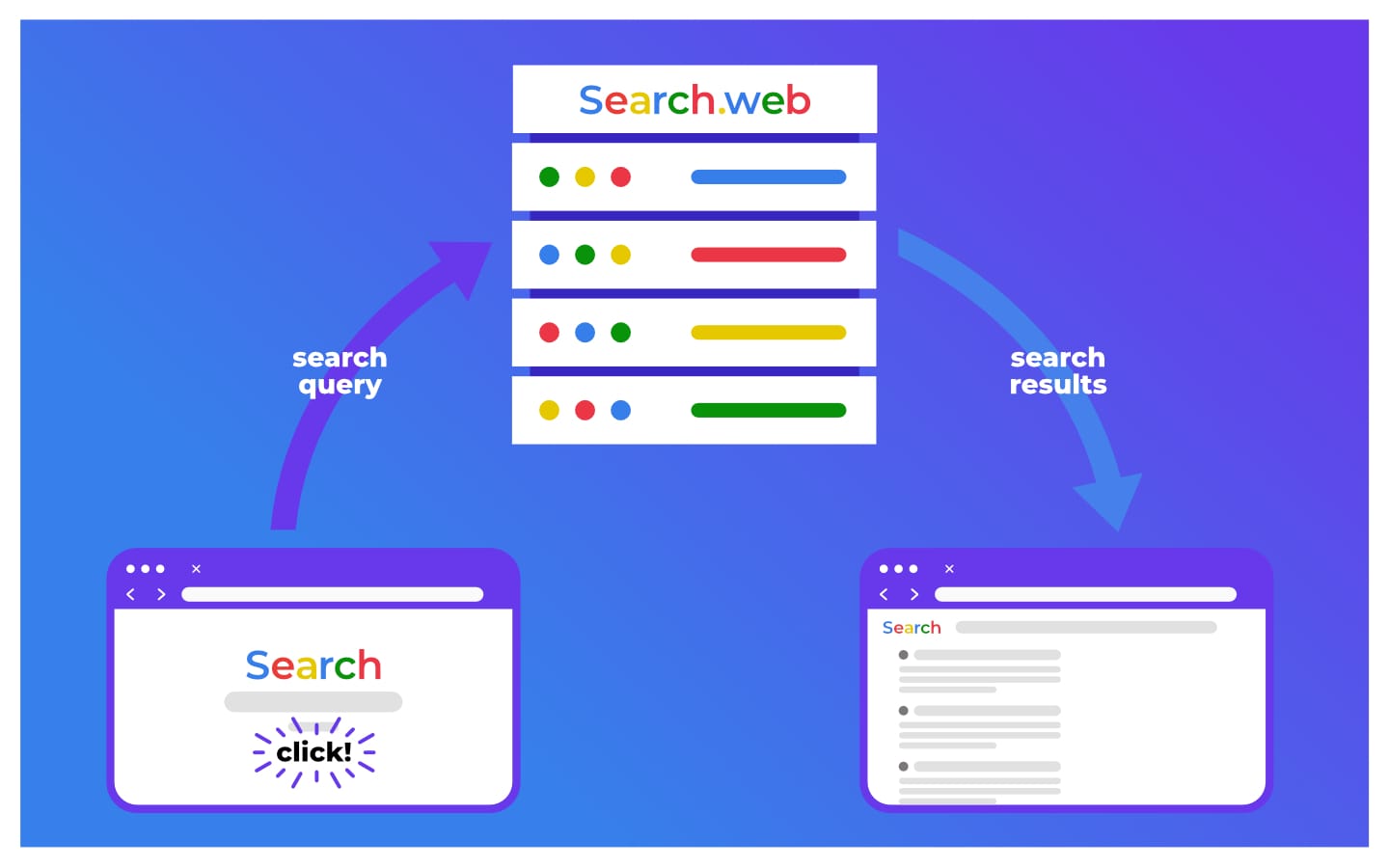

SerpApi's APIs allow you to perform search engine requests, and receive responses in ways that can be automated by the use of programming languages and "no code" platforms such as n8n or Google Sheets.

The above diagram translated to SerpApi's world would look like this:

As you can hopefully see, the behaviour of the API essentially mirrors the way you would interact with a search engine via a browser as closely as possible - meaning if something costs two clicks in the browser, it will cost two requests with the API.

This covers the basic method - but read on if you want to learn more!

An exception to the rule

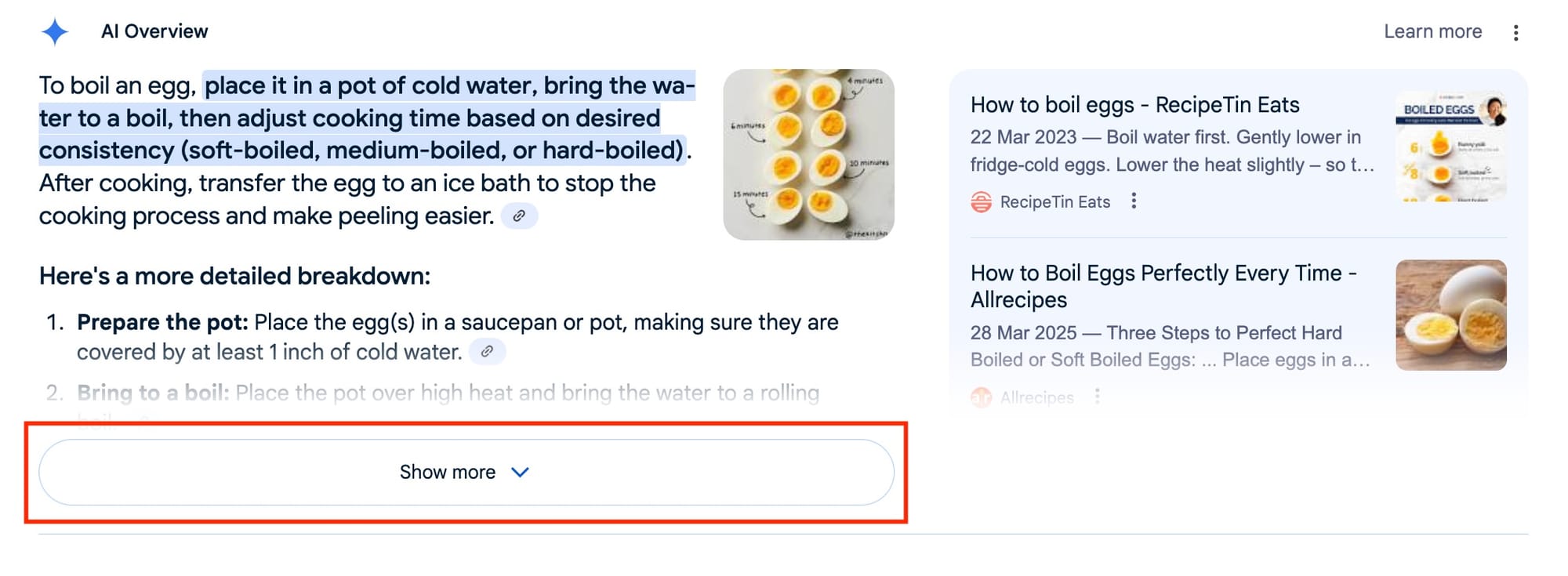

In a previous screenshot, you might have noticed a particular detail that would require you to use an extra click:

The 'Show more' button you see here requires a click in order to display the rest of the information that seems to fade out.

However, in these cases, this is a design choice to save space on the page. The information has already been retrieved in the initial response, and the click on 'Show more' will simply reveal the extra elements without needing to pull them from the server.

For the purposes of predicting search credit costs, clicks that do not lead to a request/response cycle will not result in a search credit being used.

"How do I get all of the results?"

Some users are looking to harvest as many results as possible. Some even hope to pull every record in Google's databases.

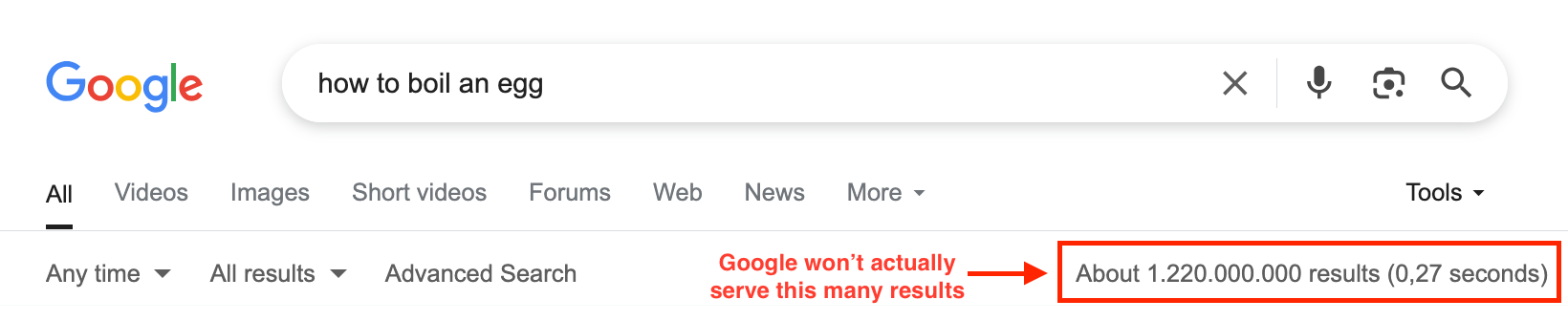

While it's now hidden under the "Tools" dropdown, Google still tells you how long your search took, and boasts approximately how many results they have to choose from:

According to Google, our egg-related search turns up over a billion results.

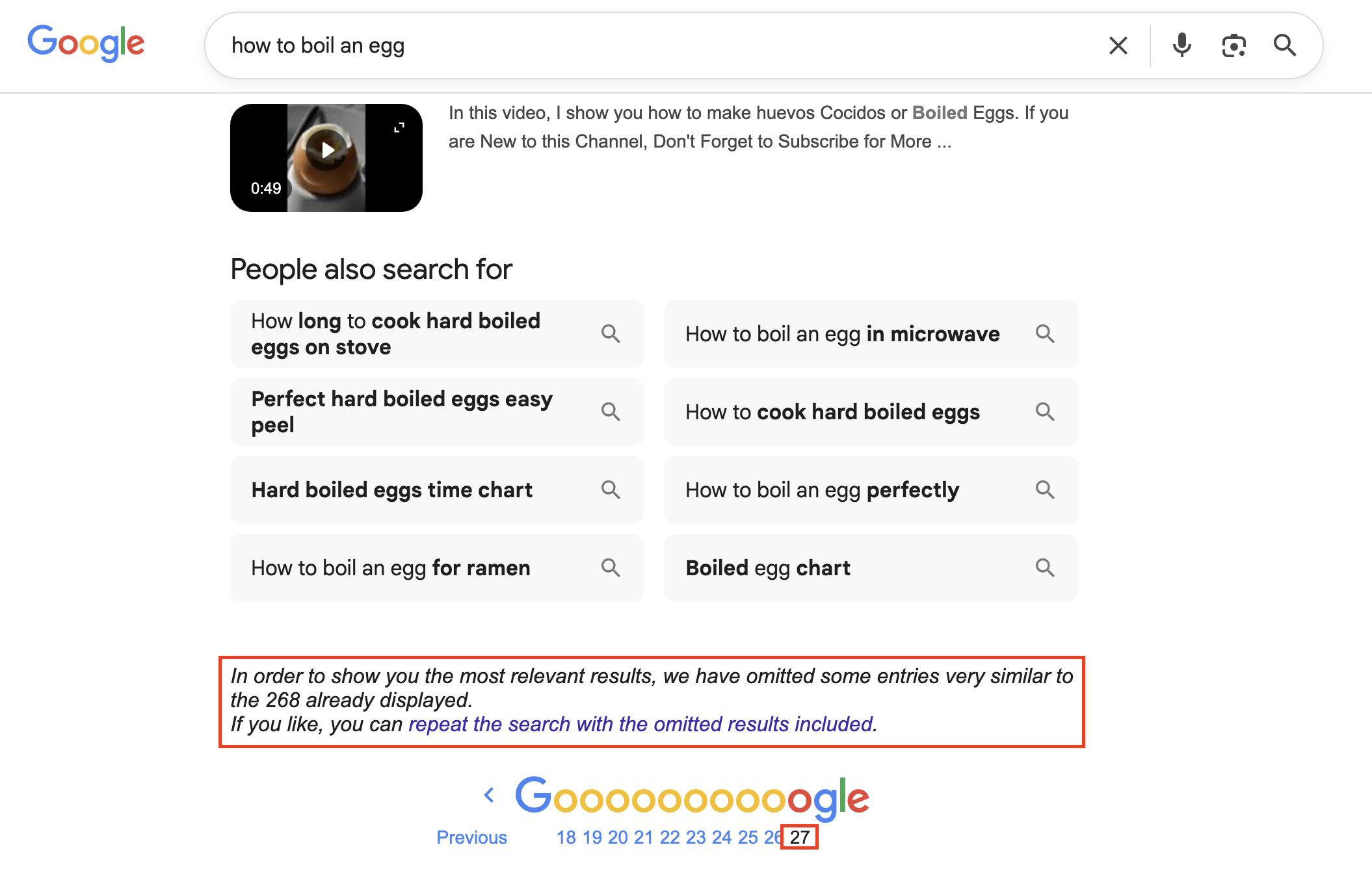

However, even if we keep paginating as far as Google will let us, when we scroll to the bottom of the page we see something curious:

As we can see in the screenshot, paginating through 27 pages only nets us 268 results.

268 = a whole lot less than 1.2 billion.

So we see that Google actually omits results in your search. While this is done with the intention of giving you more relevant search results, if you're looking to boost those result numbers, there's another parameter (also supported by SerpApi): filter which when set to 0 includes omitted results.

Even with the omitted results included, Google won't serve you upwards of a billion articles on how to boil an egg.

But the key thing to bear in mind here, is that any web scraping API is only ever going to be able to pull in the data that the search engine chooses to serve. And while Google might have more records than they choose to share, that is ultimately their decision.

What counts as a 'Successful' Search?

For any given request, SerpApi can only return whatever the search engine we're scraping decides to serve.

However, in the cases where there's an error/failed request, or if the search was cached, you will not be charged for a search credit.

A simple way to remember this is: the search engine is responsible for the content - SerpApi is responsible for making sure the request/response cycle happens for each search.

For example: it's entirely possible to perform a search query which returns no results. Let's consider the following:

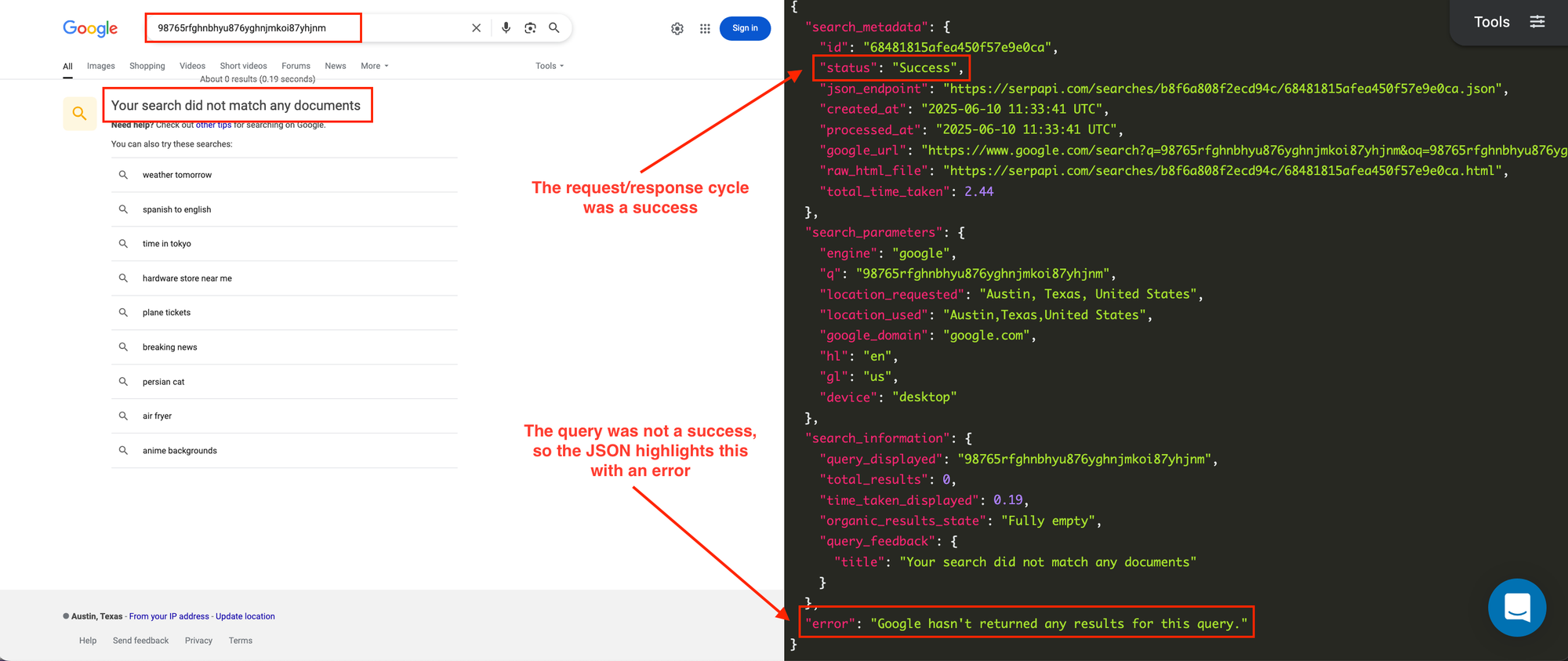

If you were to perform the same search via SerpApi, you would see something like the following:

In this case, the search was carried out successfully (there was a successful request/response cycle). However, the query failed because Google (unsurprisingly) did not have any results that matched the result of me running my finger across the keyboard a bunch of times.

Since the part you need SerpApi for was carried out, a search credit is debited - even though the JSON response contains an error message (which is included to help our users easily find which queries they sent were unsuccessful.

Conclusion

Hopefully you now feel more confident in your ability to estimate how many search credits you will need for a specific action (or at least know you have a method for doing so).

I also hope you feel more empowered with the use of the filter parameter to get (slightly) more voluminous searches.

As always, if you have any questions for how to make the most out of our APIs, please get in touch with our Customer Success team either via the chat window on our site, or by sending us an e-mail to contact@serpapi.com and we'll be delighted to help.

See you next time!