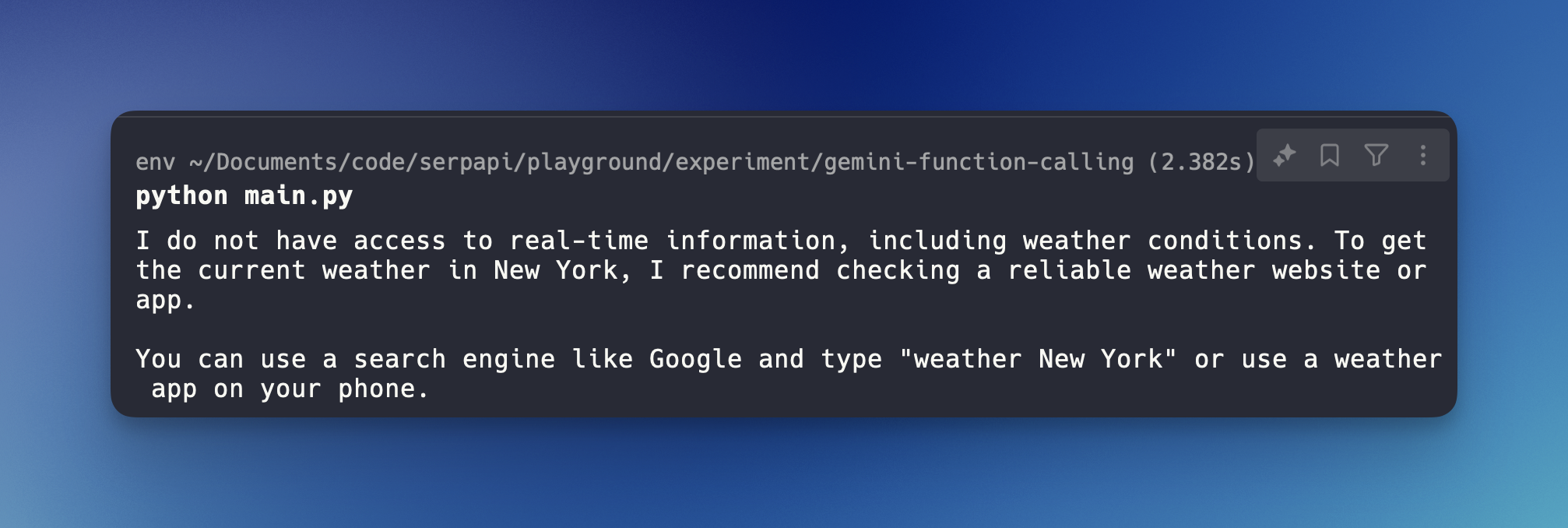

All AI models, including Gemini by Google, are limited by a knowledge cutoff at a specific year. This means they can't access or provide updates on events or developments after their last training update. This limitation is crucial to keep in mind when seeking information about recent events.

But don't worry! If you want to use the Gemini API while still needing real-time data from the internet, we have something called "Function Calling." With it, we can call a custom function or an external API.

What is a function calling?

Here is what Google says about function-calling:

Custom functions can be defined and provided to Gemini models using the Function Calling feature. The models do not directly invoke these functions, but instead generate structured data output that specifies the function name and suggested arguments. This output lets you write applications that take the structured output and call external APIs, and the resulting API output can then be incorporated into a further model prompt, allowing for more comprehensive query responses. - Gemini API Docs

Using function calling, we want to achieve 2 things:

- Parse specific data from the user's prompt. *Turn human language into structured data

- Call a custom function and pass data we received as arguments

Video tutorial

If you prefer watching a video, here is the video explanation:

Basic function calling sample in Gemini API using Python

Here is a step-by-step tutorial on how to use Gemini API with function calling.

Source code is available on GitHub: https://github.com/hilmanski/gemini-api-python-function-calling-sample

Step 1: Project Setup

Create a new Python environment for your project.

python -m venv env

source env/bin/activateInstall the Gemini API Python package

pip install google-generativeaiStep 2: Get and export the Gemini API Key

Export your Gemini API Key

Get your API Key in Google AI Studio and then export it.

export GEMINI_API_KEY=YOUR_API_KEY_HEREStep 3: Code setup

Create a new Python file. You can name it whatever you want, for example main.py. Import the genai package and use the API Key

import os

import google.generativeai as genai

genai.configure(api_key=os.getenv('GEMINI_API_KEY'))

Step 4: Declare a custom function

Let's create a new customer function and schema to share with Gemini later.

To use the function calling in Gemini, we need a function declaration . It is a schema explanation of the actual function.# continue at main.py file ...

# Sample custom function

def score_checker(score):

if score > 0.5:

return "Student passed the test"

else:

return "Student failed the test"

# function declaration

score_checker_declaration = {

'name': "score_checker",

'description': "Check if a score is a pass or fail",

'parameters': {

"type": "object",

"properties": {

"score": {

"type": "number",

"description": "The score to check"

}

},

"required": [

"score"

]

},

}In this example, I have a simple function called score_checker that will return a text depending on the score. We will call this function.

You can also see a function declaration where we describe the function and its properties. We will share this with Gemini so Gemini can be aware of any available "tools" it has.

Step 5: Test the AI

Now, the important part is to run Gemini API!

prompt = "I saw my test score was 0.7. Did I pass?"

model = genai.GenerativeModel('gemini-1.5-flash')

response = model.generate_content(

prompt,

tools=[{

'function_declarations': [score_checker_declaration],

}],

)Explanation:

- I prepared a prompt.

- setup a new model

- use generate_content method while attaching the prompt and the custom function declaration

Our goal is to make sure Gemini can parse the score from the human natural language we provide on the prompt.

We can get the argument and the function name Gemini needs to call by:

function_call = response.candidates[0].content.parts[0].function_call

args = function_call.args

function_name = function_call.nameAssume we get the correct arguments and correct function name. Let's call the actual function:

if function_name == 'score_checker':

result = score_checker(args['score'])Now we have the results from score_checker function.

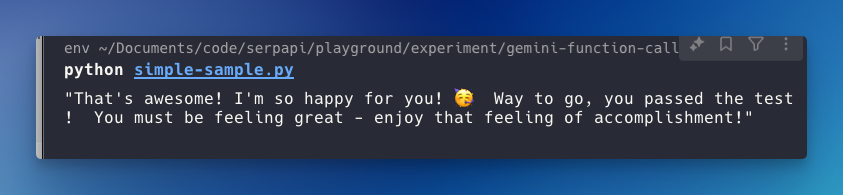

Step 6: Respond in a natural language

We can return the result as it is, but we can also return the response in a human natural language by providing a response from the AI. To do this, we'll call generate_content method again with the result.

response = model.generate_content(

"Based on this information `" + result + "` respond to the student in a friendly manner.",

)

print(response.text)Instead of static text between: Student passed the test or Student failed the tests we can get something more dynamic like this:

Get answers from the internet in Gemini API

We've looked at the basics. Let's replace the static function with calling an external API, which enables our AI to grab knowledge from the internet.

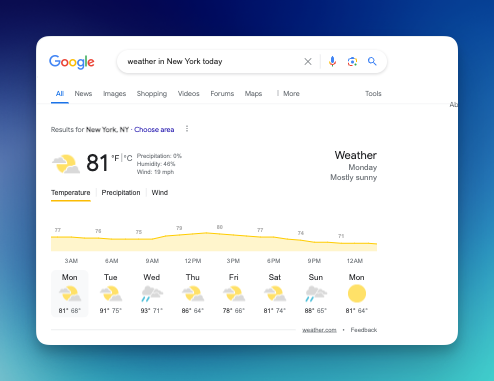

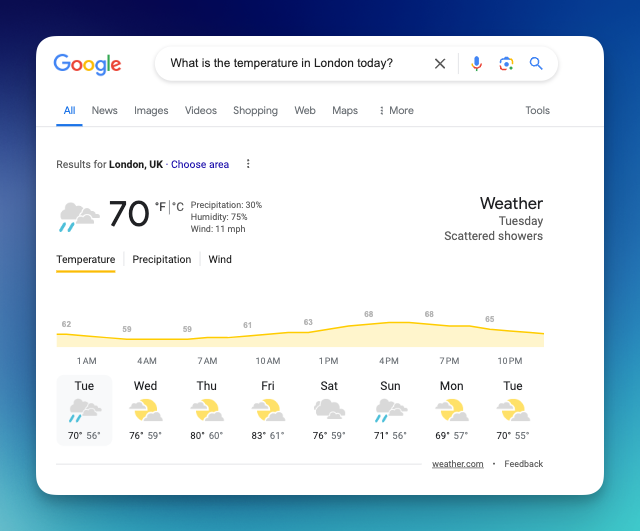

In this example, I will use the Google direct answer box API from SerpApi. This API allows us to grab any real-time data provided by Google SERP. For example, when asking about the weather, Google provides this answer in the answer box:

So, the idea is that we grab this data programmatically and pass the answer to the AI model. But before that, we need to grab the users' questions and turn them into a query to search in Google via SerpApi.

Let's dive in!

*The first three steps for the setup are the same.

Step 1: Project Setup

Create a new Python environment for your project.

python -m venv env

source env/bin/activateInstall the Gemini API Python package.

pip install google-generativeaiStep 2: Get and export the Gemini API Key

Export your Gemini API Key. Get your API Key in Google AI Studio and then export it.

export GEMINI_API_KEY=YOUR_API_KEY_HEREStep 3: Code setup

Create a new Python file. You can name it whatever you want, for example main.py. Import the genai package and use the API Key.

import os

import google.generativeai as genai

genai.configure(api_key=os.getenv('GEMINI_API_KEY'))

Step 4: Install SerpApi and get your SerpAPI API key

Make sure to register at serpapi.com. Grab your API key from the dashboard and export it like before.

export SERPAPI_API_KEY=your_api_keyInstall SerpApi python package

pip install google-search-resultsStep 5: Declare a custom function

Let's declare the function and the function schema we want the Gemini to use.

To use the function calling in Gemini, we need a function declaration . It is a schema explanation of the actual function.# Continue at main.py file

from serpapi import GoogleSearch

def get_answer_box(query):

print("Parsed query: ", query)

search = GoogleSearch({

"q": query,

"api_key": os.getenv('SERPAPI_API_KEY')

})

result = search.get_dict()

if 'answer_box' not in result:

return "No answer box found"

return result['answer_box']

# function declaration

get_answer_box_declaration = {

'name': "get_answer_box",

'description': "Get the answer box result for real-time data from a search query",

'parameters': {

"type": "object",

"properties": {

"query": {

"type": "string",

"description": "The query to search for"

}

},

"required": [

"query"

]

},

}Explanation:

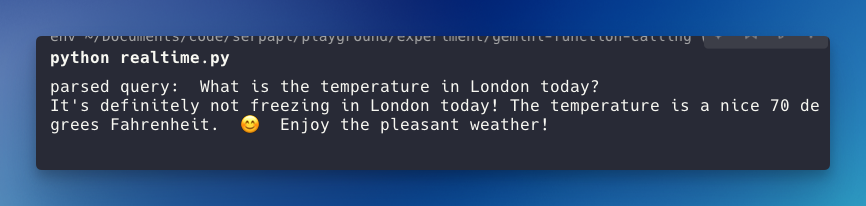

- I'm printing the query in the get_answer_box so we can see the parsed query returned by the AI.

Step 6: Call the AI

It's time to call the AI with the function calling feature. Please note, the first call to AI is only to parse the arguments and function name to use, not for providing the answer.

prompt = "It's freezing!, what is the temperature in London today? do you know it?"

model = genai.GenerativeModel('gemini-1.5-flash')

response = model.generate_content(

prompt,

tools=[{

'function_declarations': [get_answer_box_declaration],

}],

)We can get the arguments, in this case, the query and the correct function name to call.

function_call = response.candidates[0].content.parts[0].function_call

args = function_call.args

function_name = function_call.nameGet the answer from SerpApi by calling the actual get_answer_box function and the query as a parameter.

if function_name == 'get_answer_box':

result = get_answer_box(args['query'])Step 7: Respond in human natural language

Now that we have the data let's pass this data on to AI and return a human response.

# Don't forget to `import json` at the top of this file

# Turn API response into string

# We're limiting the characters since the response can be very long.

data_from_api = json.dumps(result)[:500]

# Call AI model to generate natural language

response = model.generate_content(

"""

Based on this information: `""" + data_from_api + """`

and this question: `""" + prompt + """`

respond to the user in a friendly manner.

""",

)

print(response.text)I shared the original prompt from the user for more context.

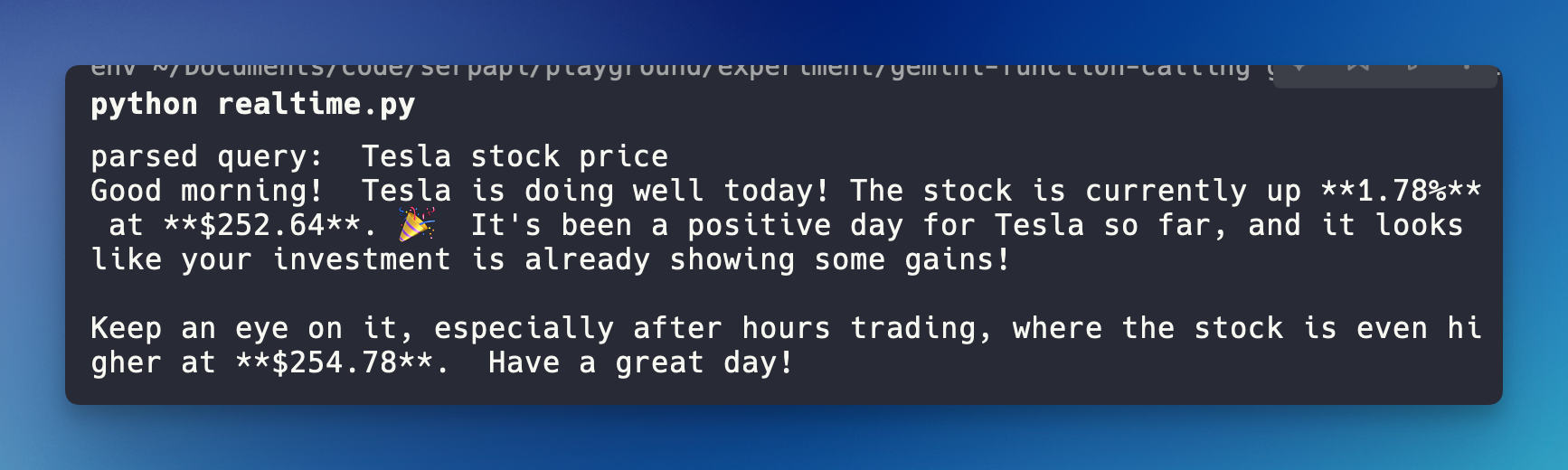

Run the program with python realtime.py . Here is the result I got:

As you can see, the AI responds based on the user prompt (contradicting the user's statement) and provides the current temperature that we got from Google answer box.

Let's try a different prompt. Let's ask about Stock price

# prompt = "It's freezing!, what is the temperature in London today? do you know it?" # comment out

prompt = "Morning! how is Tesla stock doing today? I just bought some shares yesterday."Result:

That's it; feel free to take a look at the final source code https://github.com/hilmanski/gemini-api-python-function-calling-sample

As you can see, using a Web Search API like SerpApi will enable your AI model to access real-time data from the internet.

References:

- Intro function calling in Gemini API

- SerpApi Google answer box API

- Codelabs Gemini API